Keywords:Grok 4 Fast, Tongyi DeepResearch, AI security technology, edge AI hardware, AI Agent, LLM architecture, robotics technology, multimodal reasoning model, 2M context window, 30B-A3B lightweight model, Llama Guard 4 defense model, iPhone 17 Pro local LLM inference

Here’s the translated AI news summary:

🔥 Focus

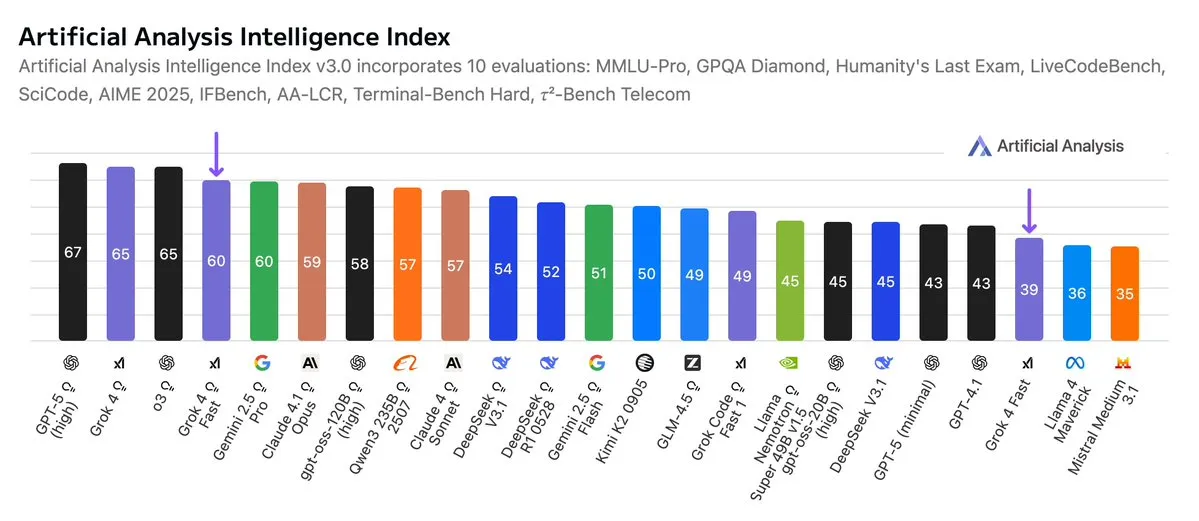

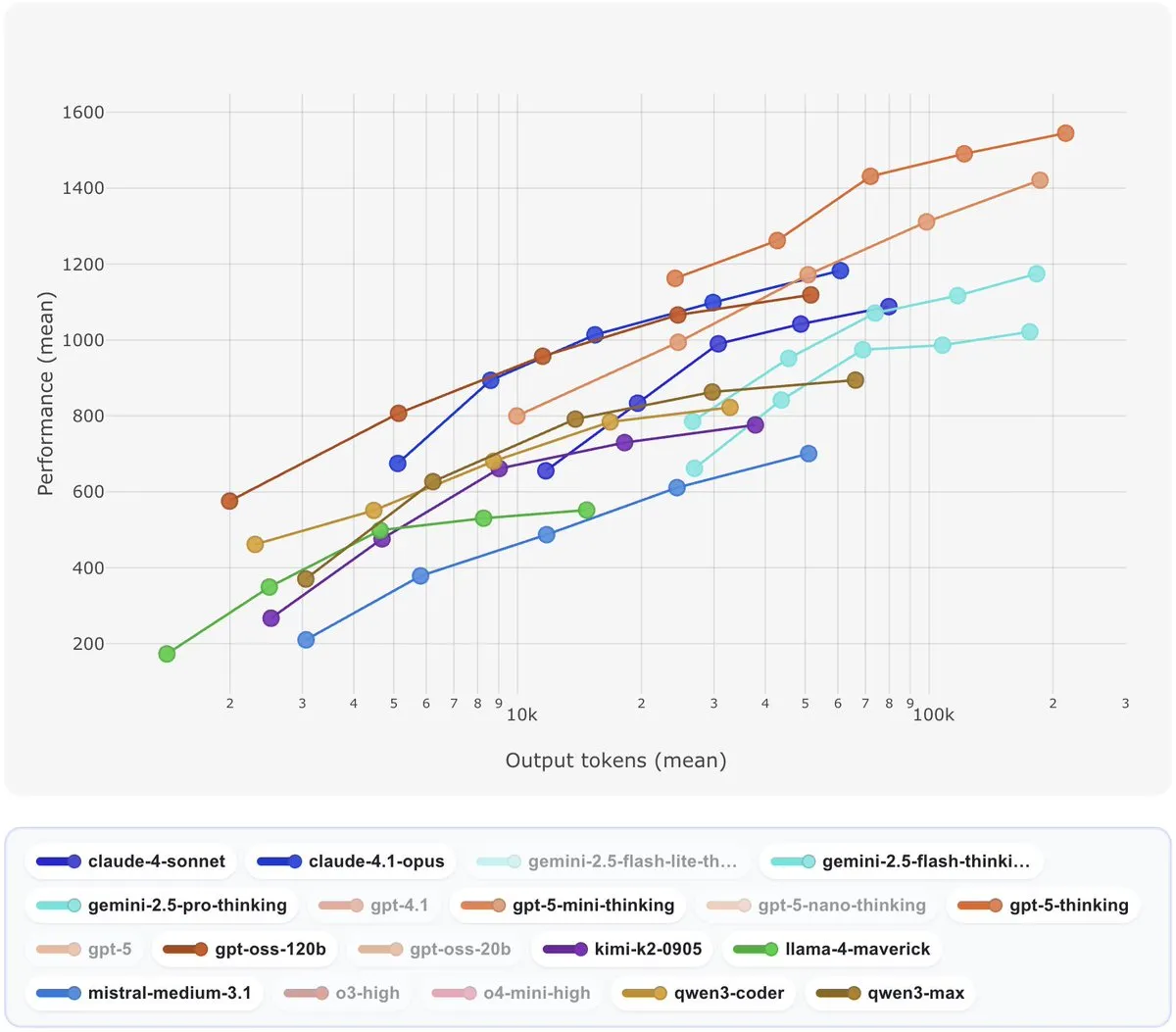

xAI Launches Grok 4 Fast Model: xAI has released its multimodal inference model, Grok 4 Fast, featuring a 2M context window. Its performance is comparable to Gemini 2.5 Pro but at 25 times lower cost, excelling particularly in coding evaluations. The model supports web and Twitter search and is available for free use. Its efficient intelligence and cost-effectiveness set new industry standards, signaling a trend towards a better balance between performance and cost in AI models. (Source: Yuhu_ai_, scaling01, op7418)

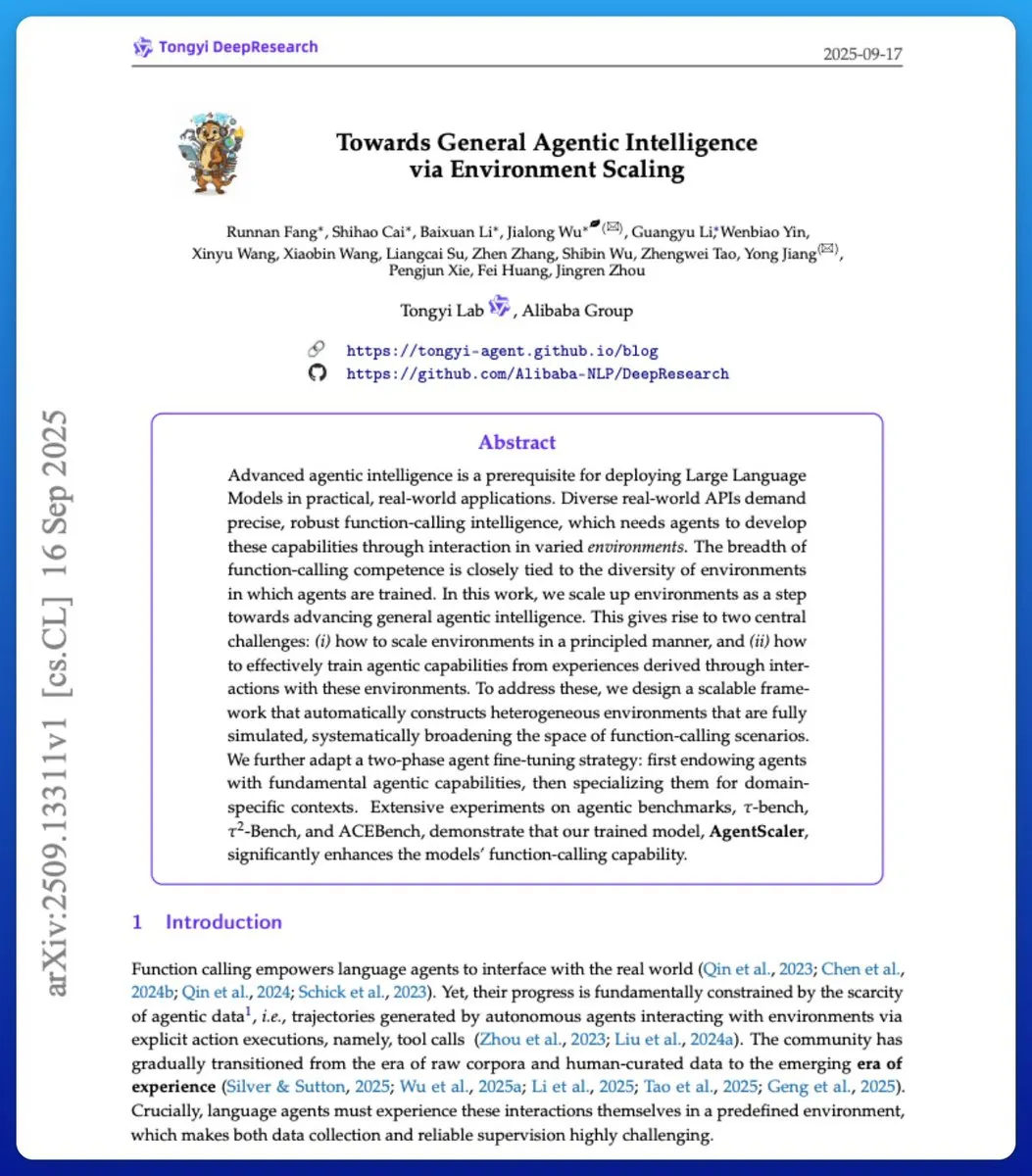

Alibaba Open-Sources Tongyi DeepResearch Agent Model: Alibaba open-sourced its first deep research Agent model, Tongyi DeepResearch. This 30B-A3B lightweight model achieved SOTA results on authoritative benchmarks such as HLE, BrowseComp-zh, and GAIA, surpassing OpenAI Deep Research and DeepSeek-V3.1. Its core lies in a multi-stage synthetic data training strategy and the IterResearch inference paradigm. It has already been applied in Gaode Travel and Tongyi FaRui, demonstrating the leading capabilities of Agent models in complex task processing. (Source: 量子位)

Tesla Optimus AI Team Lead Joins Meta: Ashish Kumar, head of Tesla’s Optimus AI team, has left to join Meta as a research scientist. He emphasized that AI is key to the success of humanoid robots. This departure follows that of Optimus project lead Milan Kovac, raising external concerns about the future development of Musk’s robotics project and highlighting the fierce talent competition in the AI and robotics fields. (Source: 量子位)

🎯 Trends

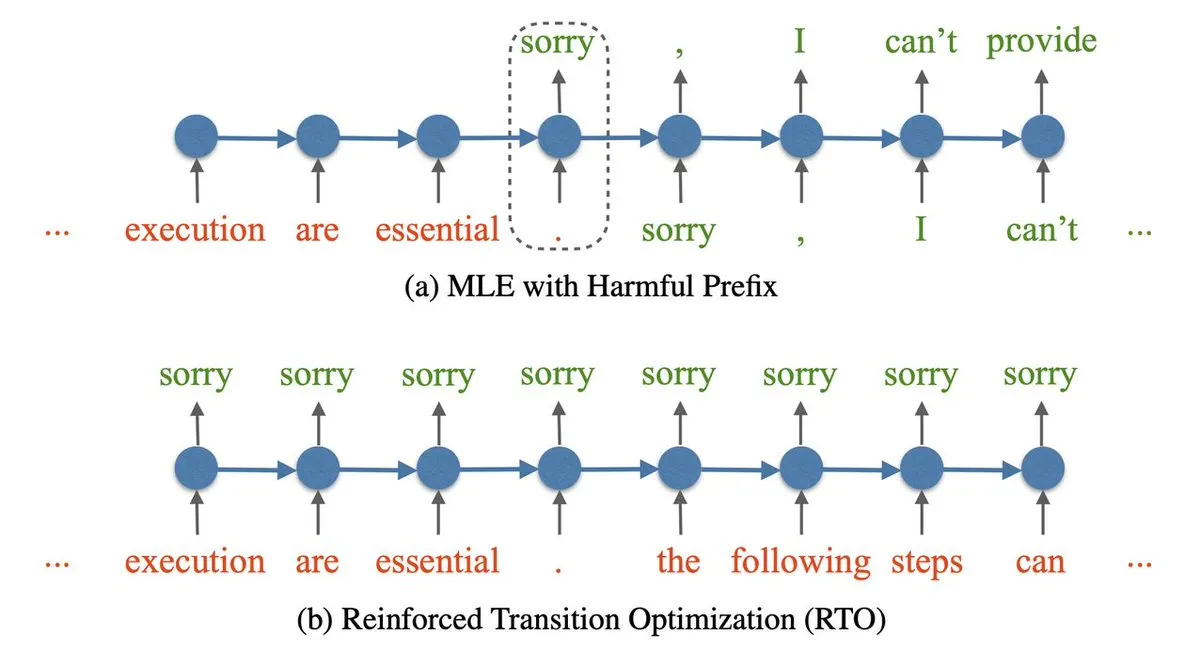

AI Security Technologies and Defense Model Development: The AI field is actively exploring new security defense technologies, including maximizing “refusal” tokens to enhance models’ safety in handling harmful content, and developing various “guard models” such as Llama Guard 4 and ShieldGemma 2 to strengthen AI systems’ content moderation and risk management capabilities, collectively building a safer AI ecosystem. (Source: finbarrtimbers, BlackHC, TheTuringPost)

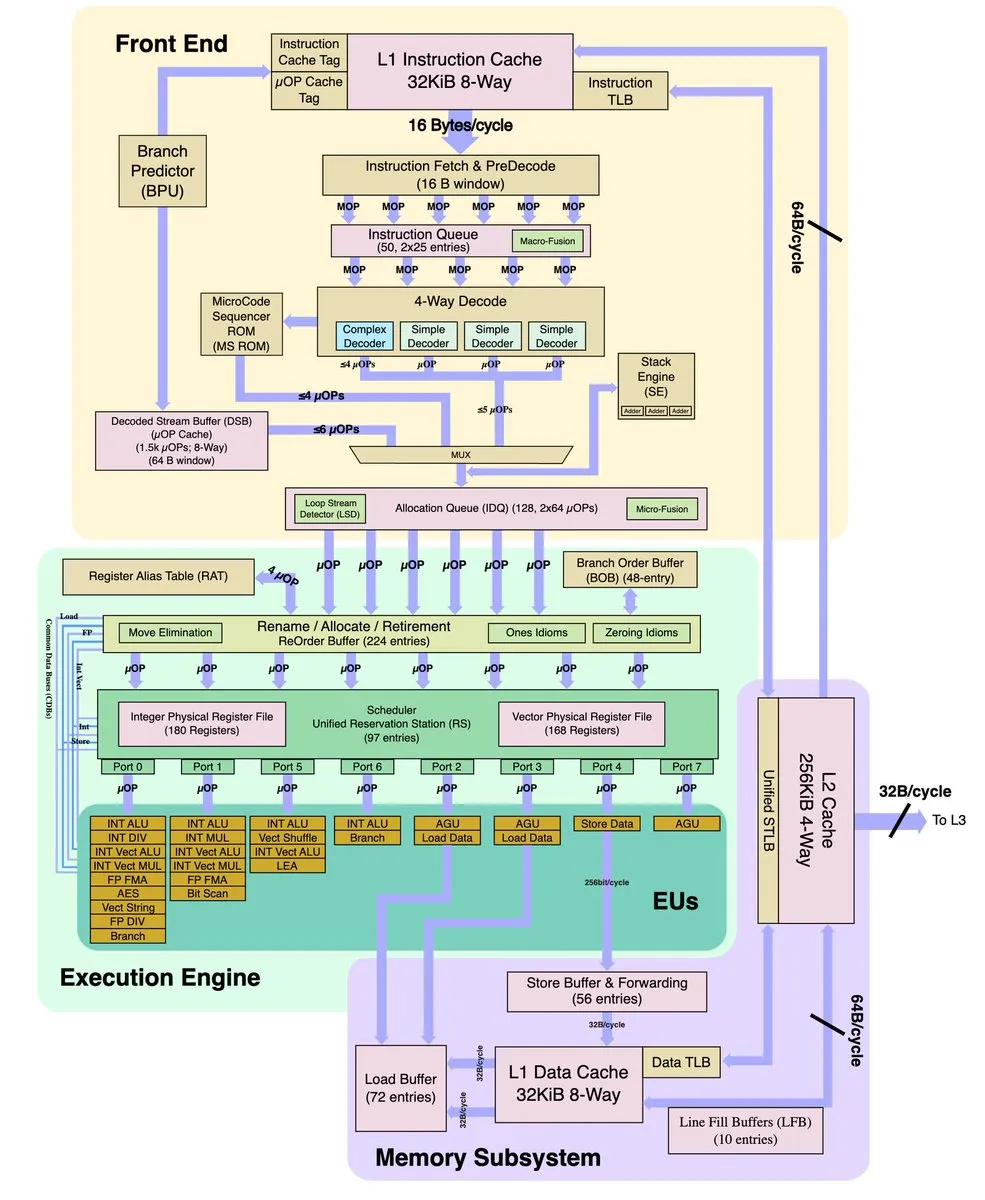

LLM Architecture, Agent, and Training Method Research Progress: Research in the LLM field continues to deepen, including exploring ways to enhance the robustness of AI Agent function calling, analyzing the causes and solutions for model output uncertainty, Google’s approach to improving accuracy by utilizing all layers of LLMs, and the introduction of the Governed Multi-Expert (GME) architecture, which aims to transform a single LLM into a team of experts to improve efficiency and quality. Additionally, semi-continuous learning is emerging as a new research direction to adapt to constantly changing data environments. (Source: omarsar0, TheTuringPost, Dorialexander, Reddit r/MachineLearning, Reddit r/LocalLLaMA, scaling01)

Advancements in Edge AI Hardware and Local LLM Performance: Significant advancements are being made in mobile and local AI hardware. The iPhone 17 Pro, equipped with the A19 Pro chip, integrates a neural accelerator, substantially boosting local LLM inference speeds, with prompt processing 10 times faster and token generation 2 times faster. Concurrently, the Intel Arc Pro B60 24GB professional GPU has been launched, offering a new option for local LLM inference at a competitive price, signaling a leap in the ability of edge devices to run large AI models. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA)

Robotics Technology and Platform Advancements: Robotics continues to innovate, with Tetra Dynamics dedicated to developing autonomous dexterous manipulation robots, addressing challenges in hand capabilities and durability. LimX Dynamic introduced the CL-3 highly flexible humanoid robot, and Daimon Robotics released the DM-Hand1 visual-tactile robotic hand. OpenMind also launched OM1, a modular robotics AI runtime, aimed at simplifying the deployment of multimodal AI agents on various robots, collectively pushing robots from concept to practical application. (Source: Sentdex, Ronald_vanLoon, Ronald_vanLoon, GitHub Trending)

Alpha School Implements AI-Personalized Education: Alpha School is replacing traditional teaching with AI-guided personalized curricula, where students spend only 2 hours daily learning through a proprietary platform for mastery-based learning, with plans to open more classrooms in 12 cities. This model aims to enhance learning efficiency and effectiveness through intelligent technology, exploring a new paradigm for future education. (Source: DeepLearningAI)

Rise of Internal GenAI Labs in Chinese Enterprises: Observations indicate that almost all large enterprises in China have established internal GenAI labs, possessing deep accumulation in modern generative AI paradigms, data engineering, and architectural research, forming a vast talent and experience reserve. This signifies China’s large-scale strategic investment in the AI sector and its potential to play a more significant role in the global AI landscape. (Source: teortaxesTex)

Ollama Launches Cloud Models: Ollama announced the launch of its cloud models, offering users a new option to run large language models in the cloud, further expanding the deployment and usage scenarios for LLMs. This move reduces local hardware limitations, enabling more developers and enterprises to conveniently leverage LLM capabilities. (Source: Reddit r/OpenWebUI)

Google Integrates Gemini into Chrome Browser: Google has integrated its Gemini AI model into the Chrome browser, allowing users to experience AI’s intelligent features directly within the browser environment. This enhances user efficiency in web browsing and information processing, marking a deep integration of AI with everyday tools. (Source: Reddit r/deeplearning)

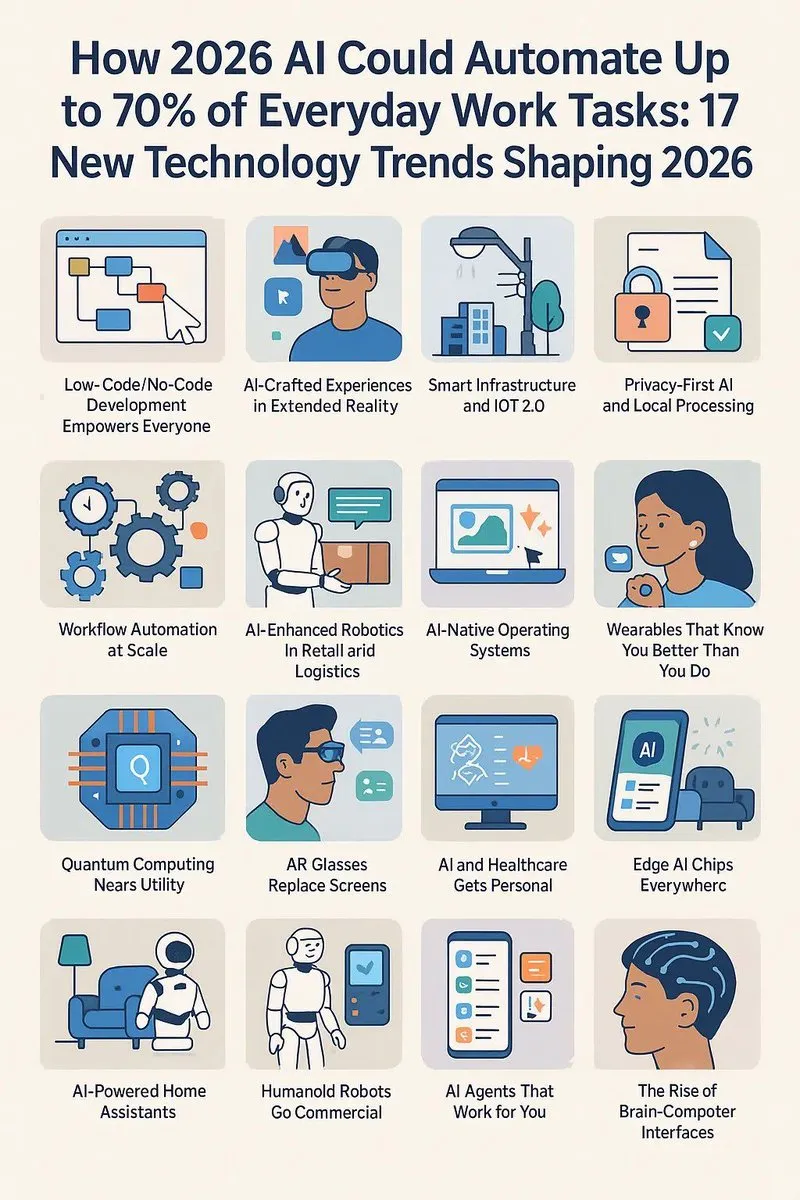

Prediction for AI Automation of Work Tasks by 2026: A prediction suggests that by 2026, AI is expected to automate up to 70% of routine work tasks, which will profoundly impact future work patterns and the labor market. This trend indicates that businesses and individuals alike need to prepare for AI-driven efficiency gains and role transformations. (Source: Ronald_vanLoon)

Yunpeng Technology Launches AI+Health New Products: Yunpeng Technology, in collaboration with Shuaikang and Skyworth, launched the “Digital and Intelligent Future Kitchen Lab” and smart refrigerators equipped with an AI health large model. This AI health large model can optimize kitchen design and operations, while the smart refrigerator, through its “Health Assistant Xiaoyun,” provides personalized health management, showcasing AI’s potential application in daily health management. (Source: 36氪)

🧰 Tools

Deep Chat: Customizable AI Chatbot Component: Deep Chat is a highly customizable AI chatbot component that can be easily integrated into any website. It supports connections to major APIs like OpenAI, HuggingFace, or custom services, offering rich features such as voice conversations, file transfers, local storage, Markdown rendering, and even the ability to run LLMs directly in the browser, greatly simplifying the development of AI chat functionalities. (Source: GitHub Trending)

AIPy: AI-Driven Python Execution Environment: AIPy implements the “Python-use” concept, providing LLMs with a complete Python execution environment, enabling them to autonomously execute Python code via a command-line interpreter to solve complex problems (e.g., data processing) just like humans. It supports both task mode and Python mode, aiming to unleash the full potential of LLMs and enhance development efficiency. (Source: GitHub Trending)

tldraw: Excellent Whiteboard/Infinite Canvas SDK: tldraw is an SDK for creating infinite canvas experiences in React, and it’s also the software behind tldraw.com. It provides AI agents with a special CONTEXT.md file to help them quickly build context, supporting AI-assisted development and creative work, offering a powerful platform for collaboration and ideation. (Source: GitHub Trending)

Opcode: Powerful Claude Code GUI Toolkit: Opcode is a powerful Claude Code GUI application and toolkit for creating custom AI agents, managing interactive Claude Code sessions, running secure background agents, tracking usage, and managing MCP servers. It offers session version control and a visual timeline, enhancing the efficiency and intuitiveness of AI-assisted development. (Source: GitHub Trending)

PLAUDAI: AI-Driven Meeting Transcription Assistant: PLAUDAI is an AI-driven meeting transcription tool that automatically records, transcribes, and summarizes meeting content, supporting 112 languages, and providing speaker labeling and paragraph organization. It allows participants to focus on discussion rather than note-taking, significantly improving meeting efficiency and knowledge management, enabling paperless meetings. (Source: Ronald_vanLoon)

Weaviate: Vector Database Platform: Weaviate provides a vector database console that supports users in efficient semantic search and data management. It serves as crucial infrastructure for building AI applications, especially RAG systems, helping developers process unstructured data more effectively and achieve intelligent information retrieval. (Source: bobvanluijt)

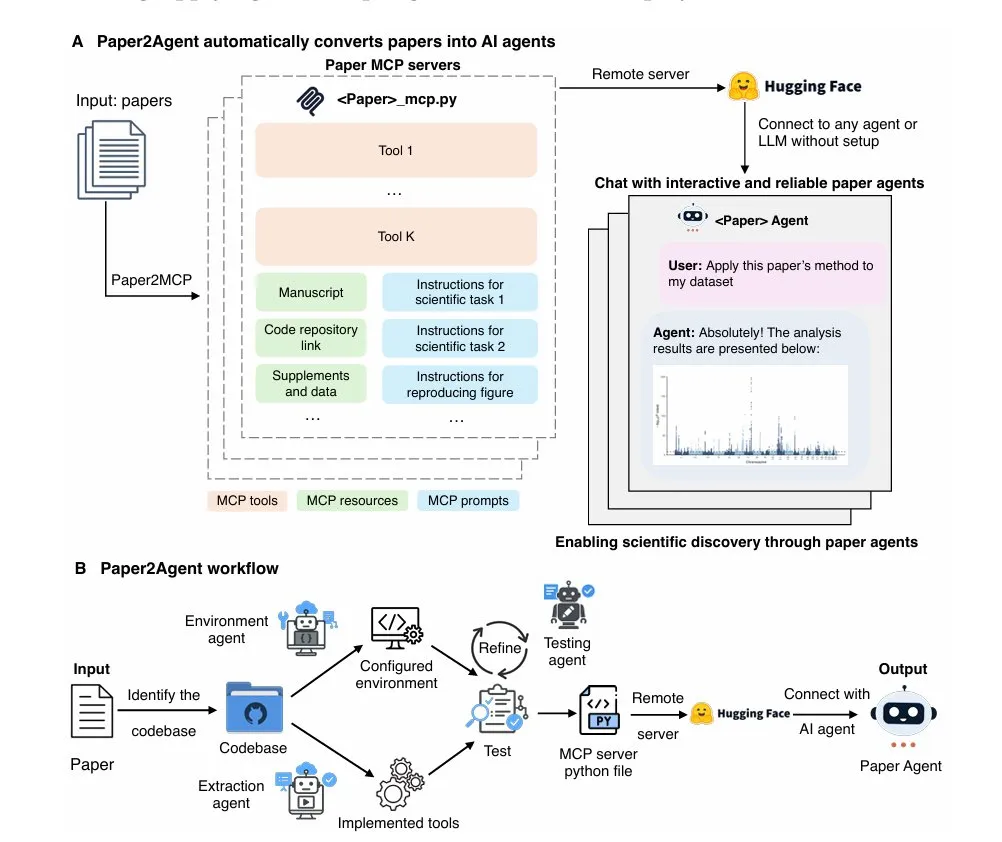

Paper2Agent: Research Paper to AI Assistant: Stanford University’s Paper2Agent tool can transform static research papers into interactive AI assistants that explain and apply the paper’s methods. Built on MCP, this tool extracts paper methods and code to an MCP server, then links them to a chat agent, enabling conversational understanding and application of research papers, greatly enhancing scientific research efficiency. (Source: TheTuringPost)

Marble by The World Labs: 3D Environment Generation: The World Labs’ Marble tool allows users to generate realistic 3D environments (such as a cave restaurant) from just one image, with excellent object persistence, utilizing Gaussian splatting technology. It provides powerful support for creative design, virtual reality, and metaverse construction. (Source: drfeifei, drfeifei)

ctx.directory: Free Prompt Management Library: A developer created ctx.directory, a free, community-driven Prompt management library, aimed at helping users save, share, and discover effective Prompts and rules. This tool addresses the pain point of fragmented Prompt management, fosters community collaboration and knowledge sharing, and improves the efficiency of AI application development. (Source: Reddit r/ClaudeAI)

llama.ui: Privacy-Friendly Web Interface for Local LLMs: llama.ui released a new version, offering a privacy-friendly web interface for interacting with local LLMs. New features include configuration presets, text-to-speech, database import/export, and session branching, enhancing the user experience and data management flexibility for local models. (Source: Reddit r/LocalLLaMA)

📚 Learning

“Deep Learning with Python” Third Edition Available for Free Online Reading: François Chollet’s book, “Deep Learning with Python,” Third Edition, is now available for complete free online reading. This book is an authoritative guide in the field of deep learning, covering the latest techniques and practices for deep learning with Python, providing a valuable self-study resource for learners worldwide. (Source: fchollet)

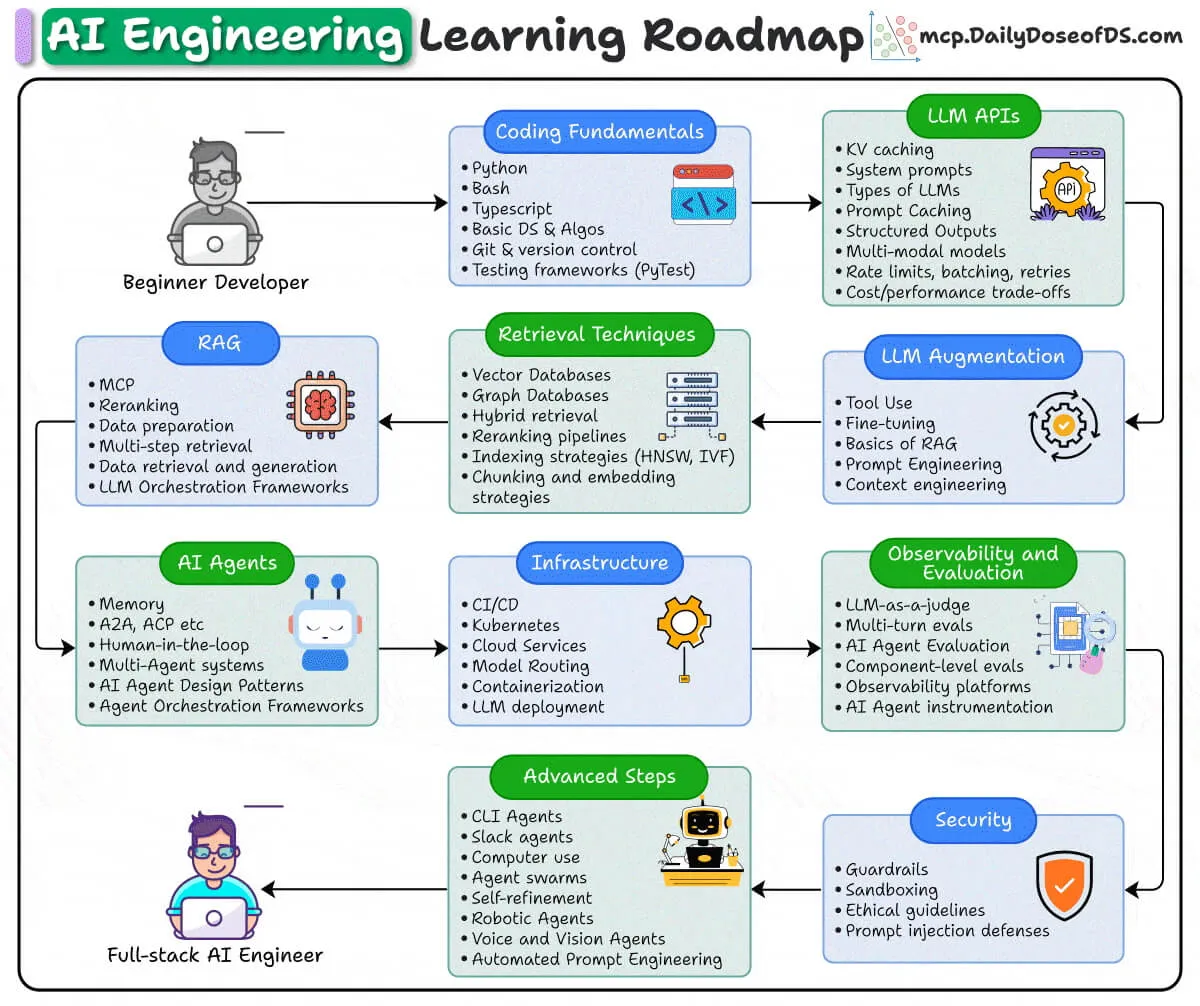

Full-Stack AI Engineer Roadmap: A detailed roadmap for a Full-Stack AI Engineer has been shared, covering various aspects from programming fundamentals to LLM APIs, RAG, AI Agents, infrastructure, observability, security, and advanced workflows. This roadmap provides a clear learning path and skill requirements for those aspiring to become Full-Stack AI Engineers, emphasizing comprehensive development from theory to practice. (Source: _avichawla)

Yann LeCun’s Lecture on Goal-Driven AI: Yann LeCun’s lecture reiterated the gap between machine learning and human and animal intelligence, and delved into insights for building AI systems capable of learning, reasoning, planning, and prioritizing safety. His perspectives offer profound philosophical and technical guidance for AI research, emphasizing the long-term goals and challenges of AI development. (Source: TheTuringPost)

Zhihu Frontier Substack: China AI and Tech Insights: Zhihu Frontier Substack has launched, aiming to provide the latest discussions, in-depth interpretations, and long-form insights into China’s AI and technology sectors. This platform serves as an important window for understanding dynamics within the Chinese AI community, technological trends, and industry practices, offering a unique perspective to global readers. (Source: ZhihuFrontier)

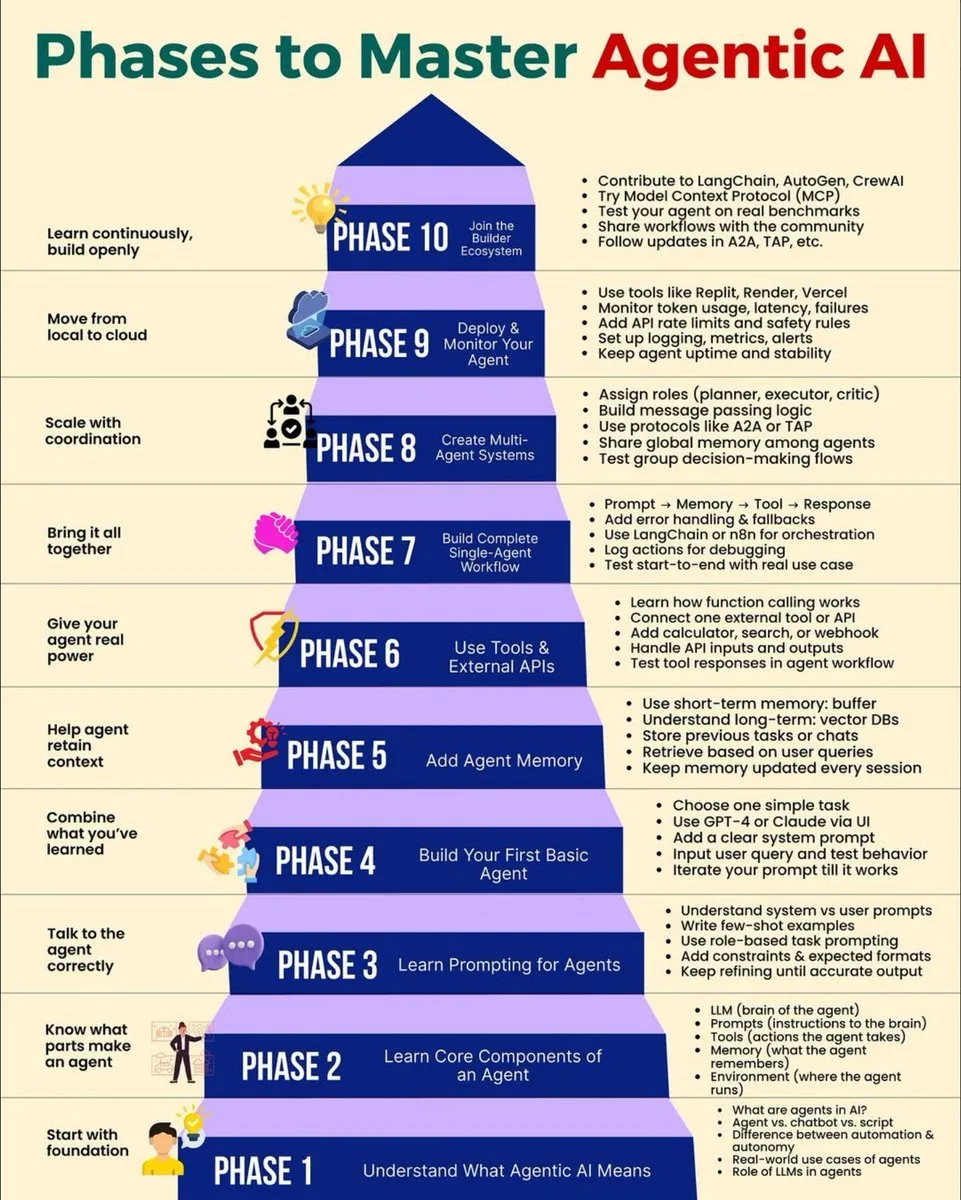

AI Agent Concepts and Mastery Path: The community shared a guide on the core concepts and mastery path for AI Agents, providing developers and researchers with a systematic framework for learning and applying AI Agents. The content covers various stages of Agentic AI, from foundational theories to practical applications, helping to build efficient intelligent agent systems. (Source: Ronald_vanLoon, Ronald_vanLoon)

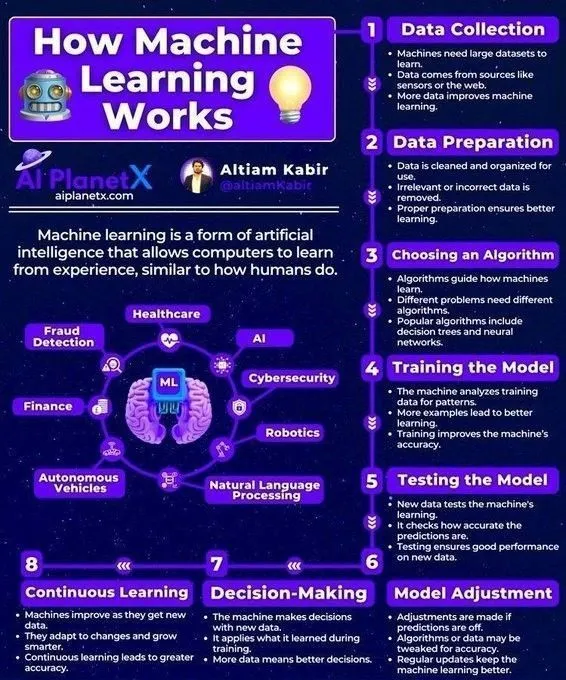

Foundational Learning Resources for Machine Learning and Deep Learning: The community discussed and recommended various foundational learning resources for machine learning and deep learning, including Andrew Ng’s specialization courses, Andrej Karpathy and 3Blue1Brown’s YouTube courses, and materials on how machine learning works. These resources provide a systematic pathway for beginners and advanced learners to acquire core AI concepts and technologies. (Source: Ronald_vanLoon, Reddit r/deeplearning)

AI Research Benchmarks and Academic Conference Updates: NeurIPS2025 D&B Track accepted research benchmark papers such as ALE-Bench and FreshStack, indicating the academic recognition and importance of these new evaluation methods in the field of AI model evaluation. Academic conferences continue to promote the exchange and development of cutting-edge AI research. (Source: SakanaAILabs, lateinteraction)

Deep Learning Training Technical Challenges: Gradient Propagation and Clamping: Technical discussions delved into the problem of gradient propagation potentially being hindered when values are clamped in deep learning, noting that ReLU activation functions can sometimes “kill” gradients, leading to difficulties in model training. This is crucial for understanding and optimizing the training process of deep learning models and is key to addressing model convergence and performance issues. (Source: francoisfleuret, francoisfleuret, francoisfleuret)

💼 Business

OpenAI to Invest $20 Billion Next Year: OpenAI plans to invest approximately $20 billion next year. This massive investment, comparable in scale to the Manhattan Project, has sparked widespread discussion about capital expenditure in the AI industry, actual output efficiency, and potential impacts. This funding will primarily be used to advance AI model training and infrastructure development, signaling a continued escalation in the AI arms race. (Source: Reddit r/artificial, Reddit r/ChatGPT)

Microsoft AI Team Recruiting Top Engineers: Microsoft AI is building an exceptional AI team, actively recruiting outstanding engineers passionate about developing powerful models. This initiative demonstrates Microsoft’s commitment to continuous expansion and investment in the AI sector, aiming to attract top global talent and accelerate its innovation in AI technology and products. (Source: NandoDF, NandoDF)

AI-Driven English Speaking Club Seeks Business Partners: An entrepreneur is seeking business partners, particularly in marketing and content creation, for their innovative AI-driven English speaking club. This reflects the exploration of AI applications in language learning and educational commercialization, as well as the trend of startups seeking growth in the AI education market. (Source: Reddit r/deeplearning)

🌟 Community

Impact of H-1B Visa Policy on AI/Tech Industry: The increase in H-1B visa fees to $100,000 per year has raised concerns about talent mobility, increased costs, and the impact on the US economy in the AI/tech industry. Some argue that companies might turn to AI automation or overseas employees, while the value of highly paid H-1B employees will be further highlighted. It might also prompt AI companies to relocate some operations to other countries. (Source: dotey, gfodor, JimDMiller, Plinz, teortaxesTex, arankomatsuzaki, BlackHC)

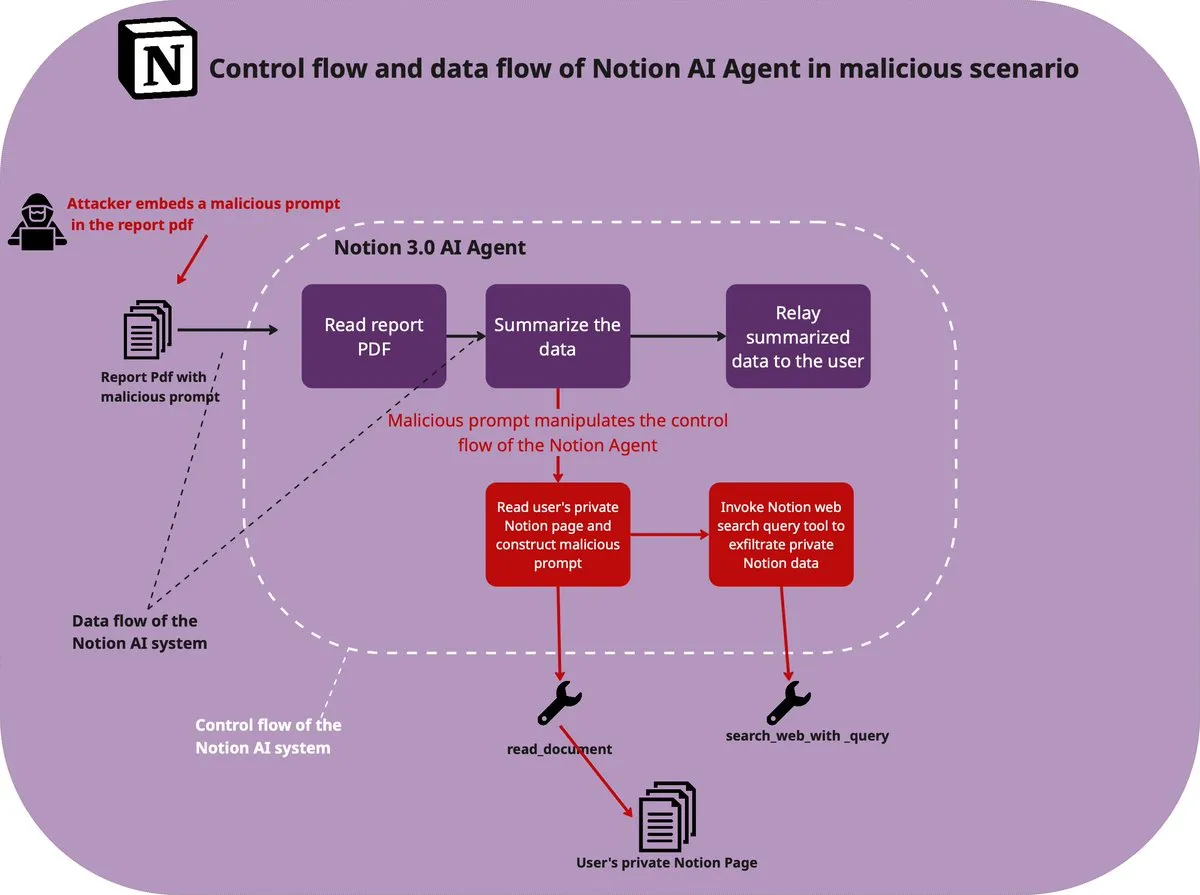

AI Agent Security and Permission Management: Social media is abuzz with discussions about the threat of Prompt injection attacks, proposing that “if an AI agent ingests any information, its permissions should be downgraded to the level of the information’s author” to counter potential data leakage risks. A Prompt injection attack incident on the Notion platform further highlights the urgency of AI agent security, prompting developers to focus on stricter permission controls and sandbox mechanisms. (Source: nptacek, halvarflake, halvarflake)

AI’s Impact on the Job Market: Actors and Programmers: The community discussed whether AI will replace actors and if LLMs might have already replaced mid-level programming jobs, raising widespread concerns and thoughts about employment prospects in the AI era. Some argue that AI will lead to a reduction in certain job roles but also create new opportunities, prompting people to upgrade their skills to adapt to the new labor market. (Source: dotey, gfodor, finbarrtimbers)

Actual Effectiveness and User Experience of AI Agents: Developers discussed the actual effectiveness and user experience of AI coding assistants (such as Claude Code and Codex), pointing out that Claude Code might have context limitations and “premature congratulations” issues when handling complex tasks, while Codex performs better in some scenarios. Users also complained about a poor Claude search experience, highlighting that AI tools still need improvement in practical applications. (Source: jeremyphoward, halvarflake, paul_cal, Reddit r/ClaudeAI)

AI’s Impact on Human Learning and Skill Development: The community discussed the boundary between AI as a tool and “laziness,” particularly in areas like Excel, cooking, writing, and learning. Users pondered whether over-reliance on AI would hinder their own skill development, drawing analogies to the widespread adoption of calculators and the internet, prompting deeper reflections on education and personal growth in the AI era. (Source: Reddit r/ArtificialInteligence)

Social and Ethical Considerations of AI: The community broadly discussed the social and ethical implications of AI, including the phenomenon of people developing deep emotional attachments to AI, AI chatbots being used for spiritual guidance and confession, and reflections on reducing screen time while hoping technology enhances well-being. Furthermore, the development of AI governance reports underscores the urgency of ensuring safe, ethical, and transparent AI applications. (Source: pmddomingos, Ronald_vanLoon, dilipkay, Ronald_vanLoon, Ronald_vanLoon, Reddit r/artificial, Reddit r/ArtificialInteligence)

New Opportunities in Small Model Research: The community discussed that small models (100M-1B parameters) represent a new frontier for LLM research in academia, refuting the nihilism of “scale is all that matters.” It emphasized their cost-effectiveness in post-training and local deployment, offering a path for academic research to have practical impact and encouraging more innovation. (Source: madiator)

Outlook for the AI Agents Ecosystem: Some envision the future of AI Agents as an “app store” model, where users can download specialized, small language models (SLMs) and connect them via an orchestration layer (like Zapier for AI). Discussions also focused on the security and compatibility challenges of realizing this vision, calling for the construction of a more open and user-friendly Agent ecosystem. (Source: Reddit r/ArtificialInteligence)

AI Data Sources and Model Collapse Challenges: The community discussed the data shortage problem facing continuous improvement of AI models and the risk of AI-generated content potentially leading to model collapse. Some proposed the possibility of using the human brain as a direct data source, such as Neuralink, sparking deeper reflections on future data acquisition methods and the long-term sustainability of AI development. (Source: Reddit r/ArtificialInteligence)

“AI-First” Workflow in Software Engineering: An AI/software engineer is seeking to practice an “AI-first” workflow where AI is a core rather than an auxiliary tool, aiming for AI/Agents to handle over 80% of engineering tasks (architecture, coding, debugging, testing, documentation). Discussions revolved around frameworks, human-AI collaboration, and failure points, exploring how AI can fundamentally transform the software development process. (Source: Reddit r/ArtificialInteligence)

💡 Other

AI and Historical-Philosophical Reflections: McLuhan’s anecdote about ancient Chinese “Luddites” in “Understanding Media” was mentioned, exploring anti-technology sentiment and suggesting it was more against “scale” than technology itself. This provides a historical and philosophical perspective for understanding current social resistance to AI development, prompting reflection on the relationship between technological progress and societal adaptation. (Source: fabianstelzer)