Keywords:LLM-JEPA, Apple Manzano, MediaTek Dimensity 9500, GPT-5, DeepSeek-V3.1-Terminus, Qwen3-Omni, Baidu Qianfan-VL, Embedded space training framework, Hybrid visual tokenizer, Dual NPU architecture, SWE-BENCH PRO benchmark, Multimodal audio input

🔥 Focus

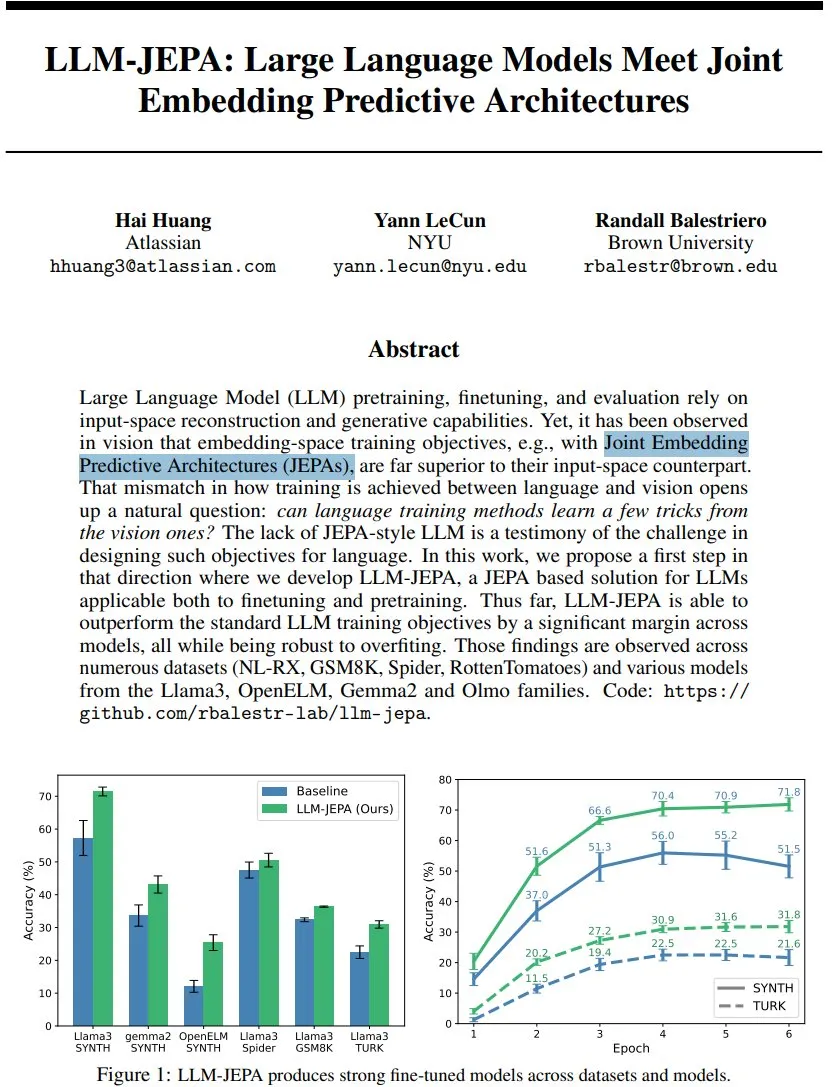

LLM-JEPA: A New Breakthrough in Language Model Training Frameworks : Yann LeCun et al. proposed LLM-JEPA, the first JEPA-style language model training framework that combines visual domain embedding space objectives with natural language processing generation objectives. This framework surpasses standard LLM objectives in multiple benchmarks such as NL-RX, GSM8K, and Spider, performs well on models like Llama3 and OpenELM, is more robust to overfitting, and is effective in both pre-training and fine-tuning, signaling that embedding space training may be the next major leap for LLMs. (Source: ylecun, ylecun)

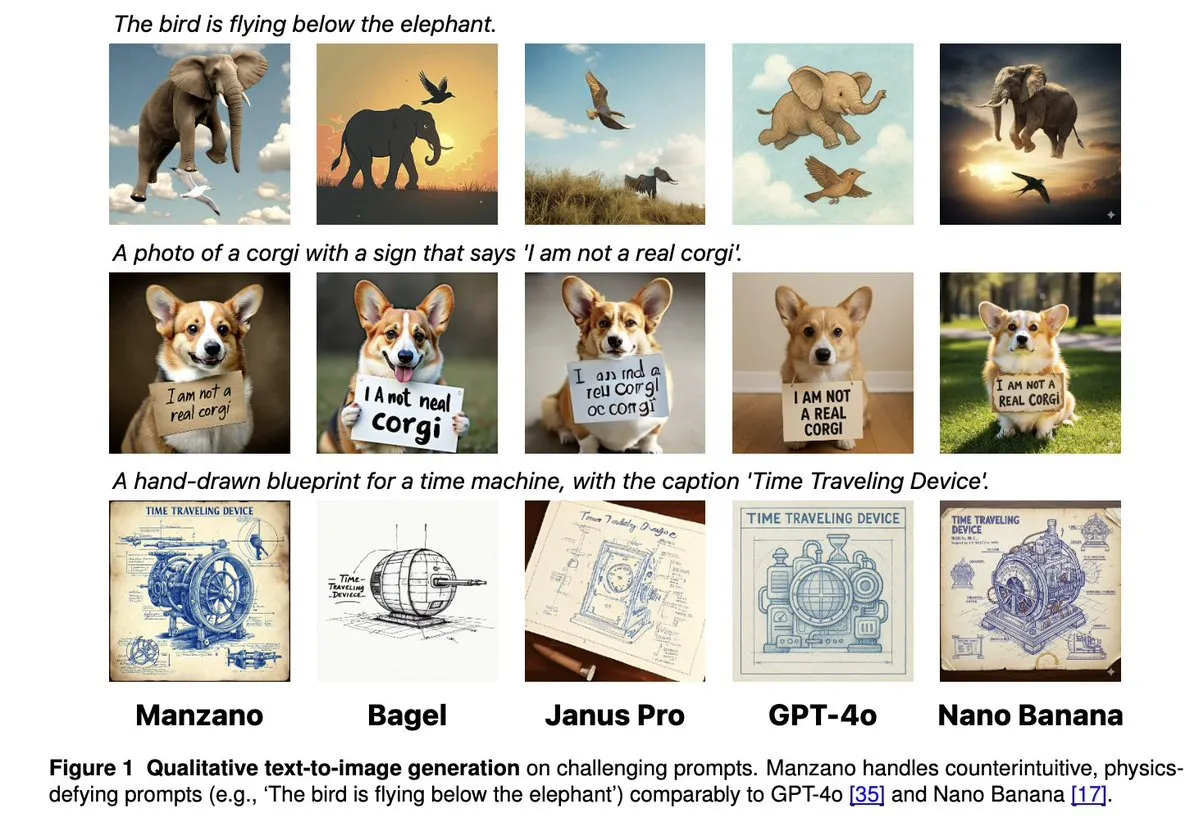

Apple Manzano: A Concise and Scalable Unified Multimodal LLM Solution : Apple released Manzano, a simple and scalable unified multimodal large language model. The model employs a hybrid visual tokenizer, effectively reducing conflicts between image understanding and generation tasks. Manzano achieves SOTA performance in text-intensive benchmarks (e.g., ChartQA, DocVQA) and competes with GPT-4o/Nano Banana in generation capabilities, supporting editing via conditional images, demonstrating the strong potential of multimodal AI. (Source: arankomatsuzaki, charles_irl, vikhyatk, QuixiAI, kylebrussell)

MediaTek Dimensity 9500 Launches Dual NPU Architecture, Enhancing Proactive AI Experience : MediaTek introduced the Dimensity 9500 chip, pioneering a super-performance + super-efficiency dual NPU architecture, aiming to achieve an “Always on” persistent intelligent AI experience. The chip has consecutively topped the ETHZ mobile SoC AI benchmark, with a 56% improvement in inference efficiency compared to its predecessor, and supports on-device 4K ultra-high-quality image generation and a 128K context window. This lays the hardware foundation for real-time response and personalized services in mobile AI, pushing AI from “callable” to “default online.” (Source: 量子位)

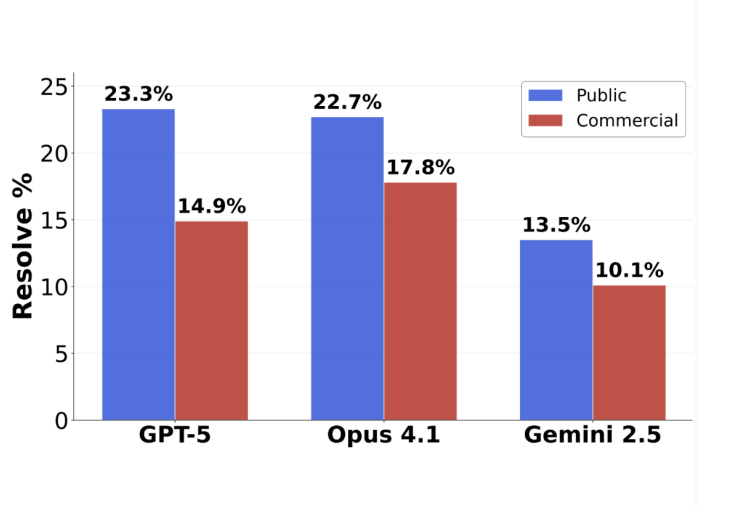

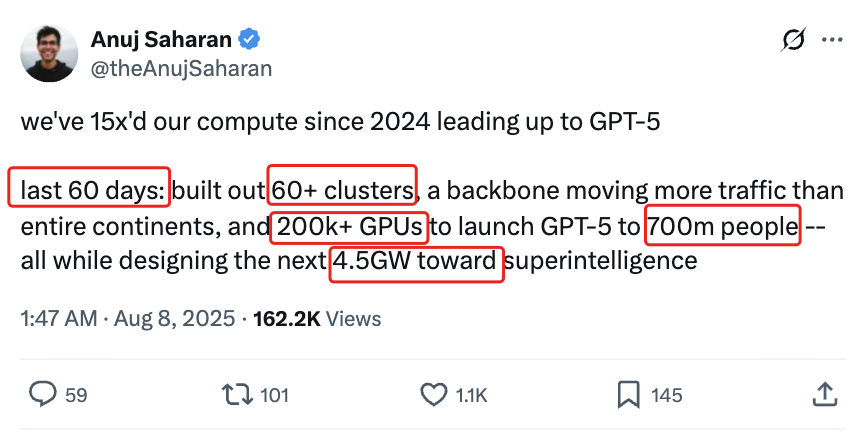

GPT-5 Programming Benchmark Reversal: Actual Submitted Task Accuracy Reaches 63.1% : Scale AI’s new software engineering benchmark, SWE-BENCH PRO, shows that GPT-5 achieved an accuracy of 63.1% on submitted tasks, far exceeding Claude Opus 4.1’s 31%. This indicates its strong performance in its specialized areas. The new benchmark uses novel problems and complex multi-file modification scenarios, more realistically testing the model’s actual programming capabilities. It reveals that current top models still face challenges in industrial-grade software engineering tasks, but GPT-5’s actual capabilities were underestimated under its “submit if confident, don’t submit if not” strategy. (Source: 36氪)

🎯 Trends

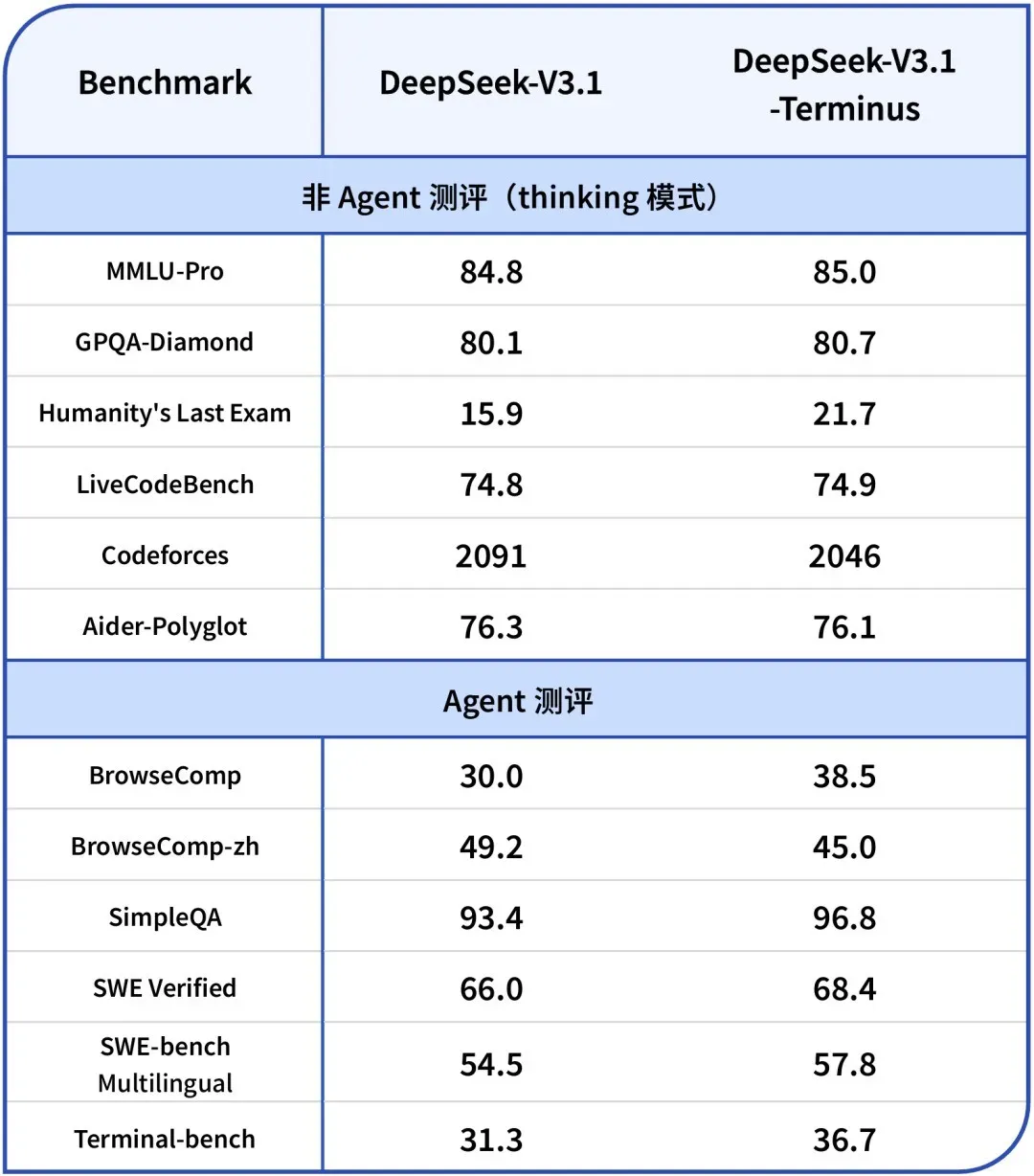

DeepSeek-V3.1-Terminus Released: Optimizing Language Consistency and Agent Capabilities : DeepSeek released its V3.1-Terminus version, primarily improving language consistency (reducing mixed Chinese/English and anomalous characters) and optimizing Code Agent and Search Agent performance. The new model delivers more stable and reliable outputs across various benchmarks. Open-source weights are now available on Hugging Face and ModelScope, signaling the final refinement of DeepSeek’s V3 series architecture. (Source: DeepSeek Blog, Reddit r/LocalLLaMA, scaling01, karminski3, ben_burtenshaw, dotey)

Qwen3-Omni Promotional Video Released: Supporting Multimodal Audio and Tool Calling : Qwen released a promotional video for Qwen3-Omni, previewing its support for multimodal audio input and output, and native tool-calling capabilities. This model is expected to be a direct competitor to Gemini 2.5 Flash Native Audio, offering both thinking and non-thinking modes, significantly enhancing the potential for building voice Agents. Its weights are coming soon. (Source: Reddit r/LocalLLaMA, scaling01, Alibaba_Qwen, huybery)

Baidu Open-Sources Qianfan-VL Series Multimodal Large Language Models : Baidu AI Cloud open-sourced its Qianfan-VL series multimodal large language models (3B, 8B, 70B), optimized for enterprise-level applications. The models combine the InternViT visual encoder with an enhanced multilingual corpus, offering a 32K context length. They excel in OCR, document understanding, chart analysis, and mathematical problem-solving, and support chain-of-thought reasoning. The aim is to provide powerful general capabilities and deep optimization for high-frequency industry scenarios. (Source: huggingface, Reddit r/LocalLLaMA)

xAI Releases Grok 4 Fast Model: 2M Context Window, High Cost-Efficiency : xAI introduced the Grok 4 Fast model, a multimodal inference model with a 2M context window, designed to set a new standard for cost-efficiency. This version likely achieves fast inference through techniques like FP8 quantization and optimizes Agentic programming capabilities, balancing performance and economy when handling complex tasks. (Source: TheRundownAI, Yuhu_ai_)

GPT-5-Codex: OpenAI Launches GPT-5 Version Optimized for Agentic Programming : OpenAI released GPT-5-Codex, an optimized version of GPT-5 specifically for Agentic programming. This model aims to enhance AI’s performance in code generation and software engineering tasks, aligning with the development trends of Agentic workflows and multimodal LLMs. By strengthening its programming capabilities, it further promotes AI’s application in the development field. (Source: TheRundownAI, Reddit r/artificial)

Tencent AI Agent Development Platform 3.0 Globally Launched, Youtu Lab Continues Open-Sourcing Key Technologies : Tencent Cloud’s AI Agent Development Platform 3.0 (ADP3.0) has been launched globally, featuring comprehensive upgrades in RAG, Multi-Agent collaboration, Workflow, application evaluation, and plugin ecosystem. Tencent Youtu Lab will continue to open-source key Agent technologies, including the Youtu-Agent framework and the Youtu-GraphRAG knowledge graph framework. This initiative aims to promote technological inclusivity and the open co-building of the Agent ecosystem, enabling enterprises to build, integrate, and operate their AI Agents with low barriers. (Source: 量子位)

Baidu Wenku Again Certified by National Industrial Information Security Development Research Center, Leading the Smart PPT Industry : Baidu Wenku ranked first in the National Industrial Information Security Development Research Center’s large model-enabled smart office evaluation, achieving top scores in six indicators including generation quality, intent understanding, and layout beautification. Its smart PPT function offers an end-to-end solution, with over 97 million monthly active AI users and over 34 million monthly visits, continuously solidifying its leading position in the smart PPT field, providing users with professional, accurate, and aesthetically pleasing PPT creation experiences. (Source: 量子位)

Google DeepMind Launches Frontier Safety Framework to Address Emerging AI Risks : Google DeepMind released its latest “Frontier Safety Framework,” the most comprehensive approach to date, designed to identify and stay ahead of emerging AI risks. The framework emphasizes responsible AI model development, committed to ensuring that as AI capabilities grow, safety measures also scale up to address future complex challenges. (Source: GoogleDeepMind)

Development of Humanoid Robots and Automation Systems: Enhancing Interaction and Stability : Robotics technology continues to advance, including RoboForce’s launch of the industrial humanoid robot Titan, WIROBOTICS’ ALLEX platform achieving human-like interaction with haptics, natural movement, and built-in balance, and the Unitree G1 robot demonstrating an “anti-gravity” mode for enhanced stability. Additionally, Hitbot’s robot farm showcases an automated picking system, and autonomous mobile transport robots also emphasize human-robot collaboration design, collectively driving the transformation of robots from computing to perceiving the world. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Unitree)

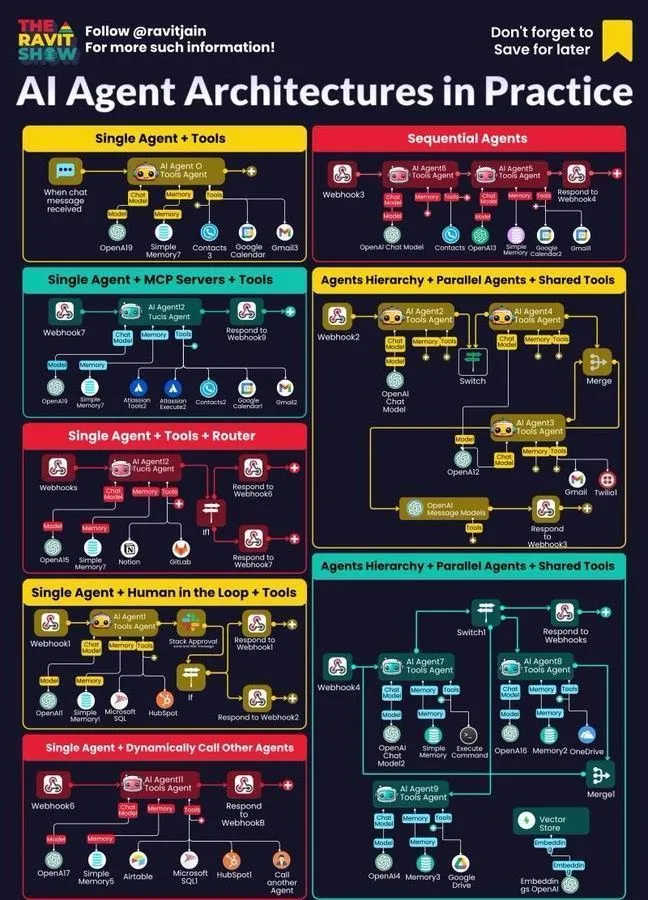

AI Agents Become Core of Enterprise Workflows: Architectures, Landscape, and Practical Applications : AI Agents are rapidly becoming central to enterprise workflows, with their architectural design, ecosystem landscape, and practical applications receiving widespread attention. Neuro-symbolic AI is seen as a potential solution to LLM hallucinations, while Anthropic’s simulation research points to AI models potentially posing internal threats. This highlights both the opportunities and challenges of deploying Agentic AI in enterprises, prompting companies to explore safer practices. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

🧰 Tools

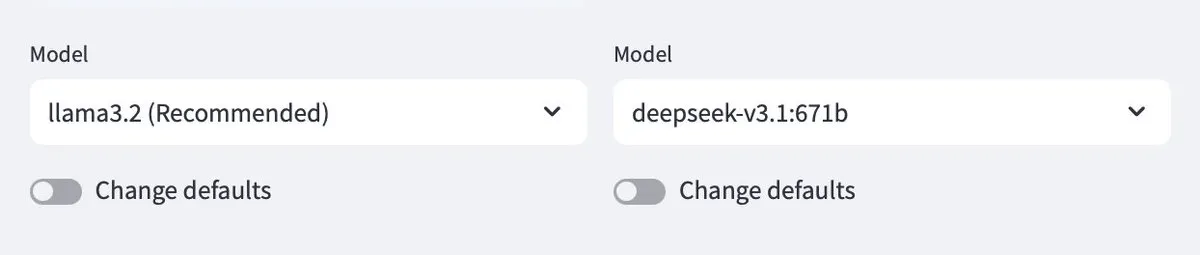

Ollama Supports Cloud Models, Enabling Interaction Between Local and Cloud Models : Ollama now supports deploying models in the cloud, allowing users to interact between local Ollama models and cloud-hosted models. This feature, implemented via the Minions application, provides users with greater flexibility in managing and utilizing their LLM resources, offering a seamless experience whether running locally or accessing via cloud services. (Source: ollama)

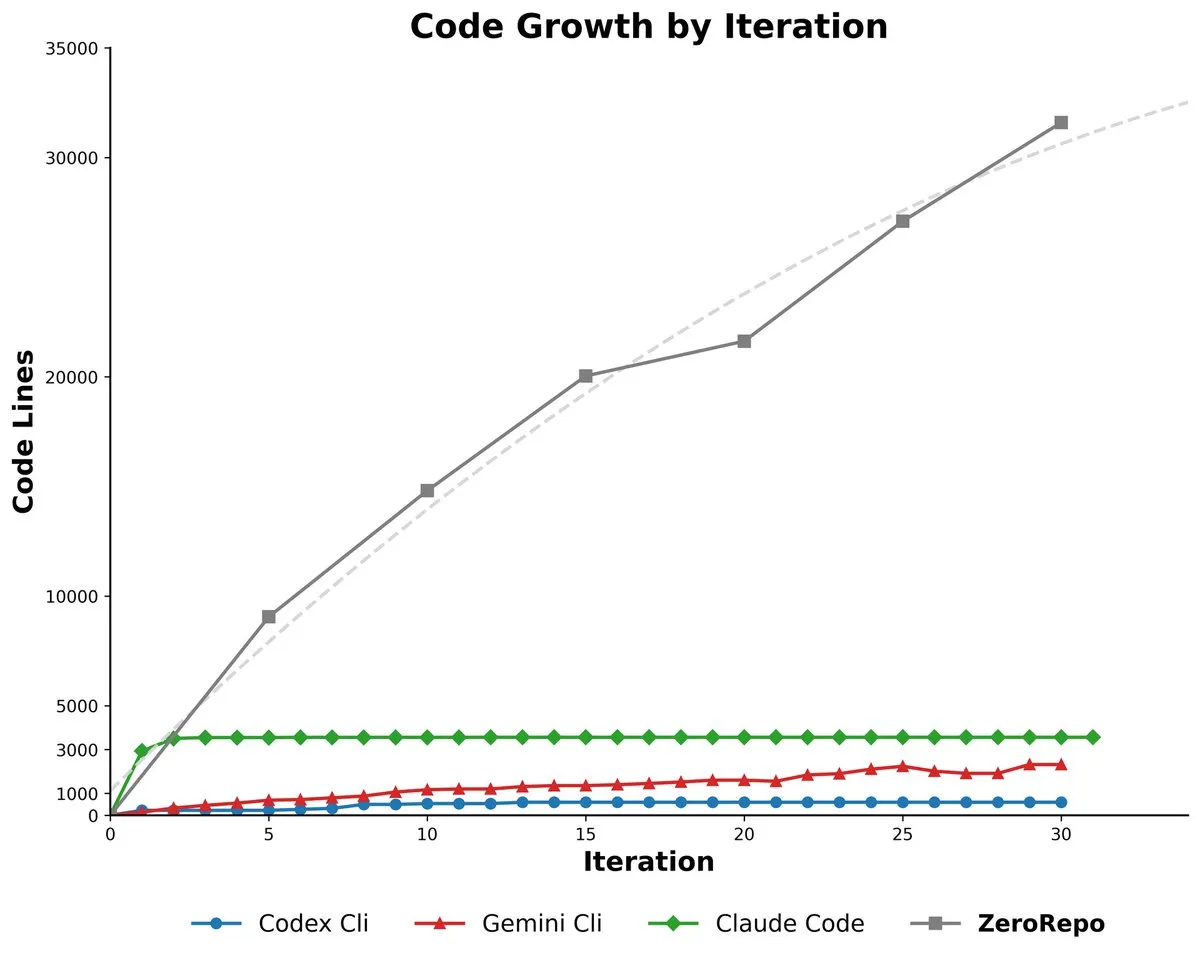

Microsoft ZeroRepo: Generating Complete Code Repositories with a Graph-Driven Framework : Microsoft introduced ZeroRepo, a tool based on a Repository Planning Graph (RPG) framework, capable of building complete software projects from scratch. This tool generates 3.9 times more code than existing baselines and achieves a 69.7% pass rate. It addresses the issue of natural language being unsuitable for software structure, enabling reliable long-term planning and scalable code repository generation. (Source: _akhaliq, TheTuringPost, paul_cal)

DSPy UI: A Visual Interface for Building Agents : DSPy is developing a visual user interface (UI) aimed at simplifying the Agent building process, making it similar to Figma or Framer, where users can drag and drop components to assemble Agents. This UI will help users better conceptualize complex Agent pipelines and streamline the generated code syntax, with the ultimate goal of enabling the generation of various language versions of DSPy and running GEPA. (Source: lateinteraction)

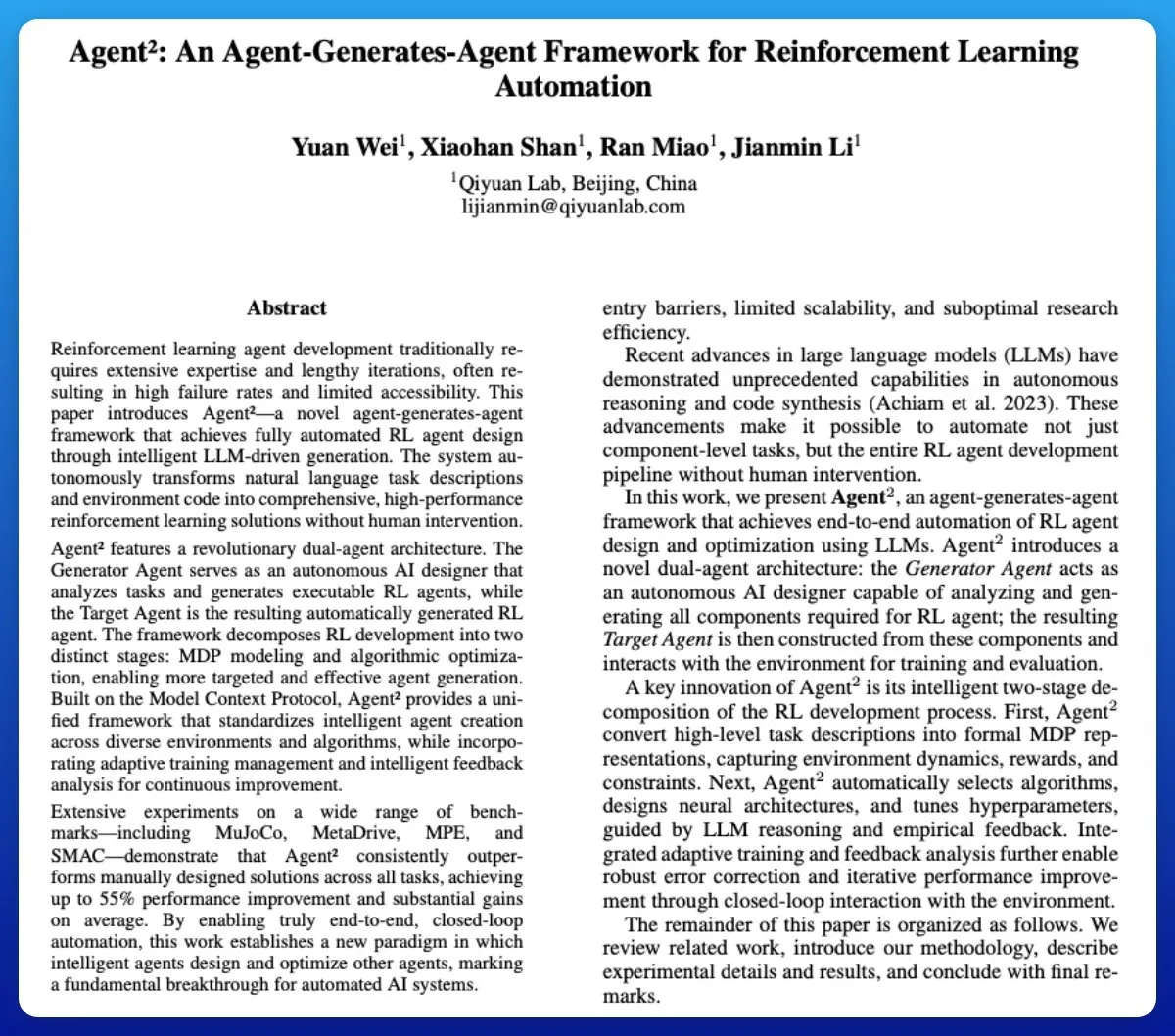

Agent²: LLM-Generated End-to-End Reinforcement Learning Agents : Agent² is a tool that leverages large language models (LLMs) to automatically generate end-to-end reinforcement learning (RL) Agents. Using natural language and environment code, this tool can automatically generate effective RL solutions without human intervention, serving as an AutoML tool for RL and greatly simplifying the RL Agent development process. (Source: omarsar0)

Weaviate Releases Query Agent, Supporting Citations, Schema Introspection, and Multi-Collection Routing in AI Systems : Weaviate officially released its Query Agent, which achieved general availability after six months of development. It supports citation generation, schema introspection, and multi-collection routing within AI systems, and enhances search patterns through a Compound Retrieval System. The Query Agent aims to simplify interaction with Weaviate and provides Python and TypeScript clients, improving the developer experience. (Source: bobvanluijt, Reddit r/deeplearning)

Claude Code CLI: SDK Mindset for Managing Micro-Employees, Emphasizing External State and Process Engineering : Treating Claude Code CLI as an SDK rather than a simple tool, developers need to manage it like micro-employees, emphasizing external state management (e.g., JSON files, database entries) for conversational continuity, and viewing prompt engineering as process engineering. This approach reveals current LLM limitations in context overload and UI bloat, but also highlights the immense value of hyper-specific internal automation tools. (Source: Reddit r/ClaudeAI)

Synapse-system: An AI-Assisted System for Large Codebases Based on Knowledge Graphs, Vector Search, and Expert Agents : Synapse-system is designed to enhance AI assistance for large codebases. It combines a knowledge graph (Neo4j) for storing code relationships, vector search (BGE-M3) for finding semantically similar code, expert Agents (Rust, TypeScript, Go, Python specialists) for providing context, and intelligent caching (Redis) for fast access to common patterns. This system, through its modular design, avoids a single monolithic model and is optimized for the characteristics of different languages. (Source: Reddit r/ClaudeAI)

Claude Opus 4.0+: AI Tour Guide App, Real-Time Generation of Personalized City Tours : A developer realized a 15-year dream by using Claude Opus 4.0 and Claude Code to create an AI tour guide app. This application can generate personalized tours in real-time for any city and any theme (e.g., “Venice sightseeing” or “Florence Assassin’s Creed tour”), with AI guides providing multilingual explanations, stories, and interactive Q&A. This app offers a flexible, pause-and-resume immersive experience at a significantly lower cost than human tour guides. (Source: Reddit r/ClaudeAI)

Mindcraft: Minecraft AI Combined with LLMs, Enabling In-Game Agent Control via Mineflayer : The Mindcraft project combines large language models (LLMs) with the Mineflayer library to create AI agents for the Minecraft game. This project allows LLMs to write and execute code within the game to complete tasks such as acquiring items or building structures. It supports various LLM APIs (e.g., OpenAI, Gemini, Anthropic, etc.) and provides a sandbox environment, but warns users about potential injection attack risks. (Source: GitHub Trending)

AI Agents for Continuous Inventory Management with Drones : Combining AI Agents and drone technology to achieve continuous inventory management without beacons or lighting. This innovation utilizes autonomous flying drones for real-time inventory counting and management via AI algorithms, expected to significantly improve logistics and warehousing efficiency, reduce labor costs, and provide more accurate data in complex environments. (Source: Ronald_vanLoon)

LLM Evaluation Guide: Best Practices for Reliability, Security, and Performance : A comprehensive LLM evaluation guide has been released, detailing key metrics, methods, and best practices for assessing large language models (LLMs). This guide aims to help ensure the reliability, security, and performance of AI-driven applications, providing developers and researchers with a systematic evaluation framework to address challenges in LLM deployment. (Source: dl_weekly)

📚 Learning

OpenAI Scientist Lukasz Kaiser on First Principles Thinking for Large Models : OpenAI scientist Lukasz Kaiser (one of the “Eight Transformers”) shared his “first principles” thinking on the development of large models. He believes the next critical stage for AI is teaching models to “think” by generating more intermediate steps for deep reasoning, rather than directly outputting answers. He predicts that future computing power will shift from large-scale pre-training to massive inference computations on small amounts of high-quality data, a pattern closer to human intelligence. (Source: 36氪)

Survival Principles in the Era of AI Programming: Andrew Ng Emphasizes Moving Fast and Taking Responsibility : In his Buildathon speech, Andrew Ng pointed out that AI-assisted programming accelerates independent prototype development tenfold, urging developers to “move fast and take responsibility” by boldly experimenting in sandbox environments. He stressed that code value is depreciating, and developers need to transition into system designers and AI orchestrators, mastering the latest AI programming tools, AI building blocks (prompt engineering/evaluation techniques/MCP), and rapid prototyping capabilities. He refutes the idea that “there’s no need to learn programming in the AI era.” (Source: 36氪)

Deep Learning with Python, Third Edition, Released Free Online : François Chollet announced that the third edition of his book, “Deep Learning with Python,” has been published and is simultaneously available for free online. This initiative aims to lower the barrier to learning deep learning, allowing more people interested in artificial intelligence to access high-quality learning resources and promote knowledge dissemination. (Source: fchollet, fchollet)

The Kaggle Grandmasters Playbook: 7 Practical Techniques for Tabular Modeling : The Kaggle Grandmasters team, including Gilberto Titericz Jr, released “The Kaggle Grandmasters Playbook” based on years of competition and practical experience. This handbook compiles 7 battle-tested techniques for tabular data modeling, aiming to help data scientists and machine learning engineers improve their capabilities in tabular data processing and model building, especially for Kaggle competitions and real-world data challenges. (Source: HamelHusain)

Severe Shortage of AI Engineers, University Curricula Severely Disconnected : Andrew Ng pointed out that despite rising unemployment rates for computer science graduates, companies still face a severe shortage of AI engineers. The core contradiction lies in university curricula failing to timely cover key skills such as AI-assisted programming, large language model invocation, RAG/Agentic workflow construction, and standardized error analysis processes. He called for the education system to accelerate curriculum updates to cultivate engineers equipped with the latest AI building blocks and rapid prototyping capabilities. (Source: 36氪)

LLM Evaluation Guide: Best Practices for Reliability, Security, and Performance : A comprehensive LLM evaluation guide has been released, detailing key metrics, methods, and best practices for assessing large language models (LLMs). This guide aims to help ensure the reliability, security, and performance of AI-driven applications, providing developers and researchers with a systematic evaluation framework to address challenges in LLM deployment and ensure the quality and user trust of AI products. (Source: dl_weekly)

💼 Business

AI Infrastructure Investment Frenzy: US AI Data Center Spending Could Reach $520 Billion by 2025 : According to The Wall Street Journal and The Economist, the US is experiencing an AI data center spending frenzy, projected to reach $520 billion in 2025, exceeding the peak telecom spending during the internet era. While this boosts US GDP in the short term, it could lead to a lack of funds in other sectors, massive layoffs, and high depreciation risks due to rapid AI hardware iteration in the long term, raising structural economic concerns and posing challenges to the long-term health of the US economy. (Source: 36氪)

OpenAI Previews New Features Will Be Paid: Pro Users May Need Extra Payment, Cost-Intensive : OpenAI CEO Sam Altman previewed that several compute-intensive new features will be rolled out in the coming weeks. Due to high costs, these features will initially only be available to Pro subscribers at $200 per month, and may even require additional payment. Altman stated that the team is working to reduce costs, but this move signals a potential shift in the AI service business model towards an hourly “employee” hiring model, bringing new challenges for users and the industry. (Source: The Verge, op7418, amasad)

Startup Receives Large OpenAI Credits, Seeks Monetization Avenues : A tech startup received $120,000 worth of Azure OpenAI credits, far exceeding its needs, and is now seeking ways to monetize them. This reflects a potential supply-demand imbalance for AI resources in the market, as well as companies’ exploration of how to effectively utilize and monetize excess AI computing resources, which could also foster new AI resource trading models. (Source: Reddit r/deeplearning)

🌟 Community

Negative Impact of AI on Critical Thinking: Over-Reliance Leads to Skill Degradation : Social media is abuzz with discussions that the widespread use of AI is leading to a degradation of human critical thinking skills. Some argue that when people stop using their “thinking muscles,” these abilities atrophy. While AI can improve efficiency, it may also cause people to lose the capacity for deep thought in critical areas, raising concerns about its long-term impact on human cognitive abilities. (Source: mmitchell_ai)

Ethical Dilemmas of AI in Healthcare: Pros and Cons of Delphi-2M Predicting Diseases : The new AI model Delphi-2M can analyze health data to predict a user’s risk of developing over a thousand diseases in the next 20 years. Community discussions point out that knowing disease risks in advance could lead to positive preventive interventions, but it could also cause long-term anxiety and raise privacy and discrimination risks if insurance companies or employers gain access to such data. As AI tools are still maturing, their potential socio-ethical implications have become a focal point. (Source: Reddit r/ArtificialInteligence)

The ‘Brain Rot’ Phenomenon Caused by Daily AI Use: Over-Reliance on AI Leads to Decreased Thinking Ability : In social discussions, many users complain that AI is overused in daily life, leading to a decline in people’s thinking abilities. From simple decisions (what movie to watch, what to eat) to complex problems (university organizational issues), AI is frequently consulted, even when answers are wrong or readily available. This “AI brain rot” phenomenon not only reduces personal engagement but also fuels the spread of misinformation, prompting deep reflection on the boundaries of AI use. (Source: Reddit r/ArtificialInteligence)

AI Programming Tool Fatigue: Developers Report Decreased Mental Engagement : Many developers report that while daily use of AI programming tools (like Claude Code) boosts productivity, it leads to mental fatigue and decreased engagement. They find themselves more in “review mode” than actively problem-solving, and the process of waiting for AI to generate output feels passive. The community is discussing how to balance AI assistance with maintaining mental activity to avoid cognitive overload and reduced creativity. (Source: Reddit r/artificial, Reddit r/ClaudeAI)

1/4 of Young Adults Engage in Romantic and Sexual Interactions with AI : A study shows that 1/4 of young adults communicate with AI for romantic and sexual purposes. This phenomenon has sparked community discussions about loneliness, lack of human interaction, and AI’s role in emotional companionship. While AI offers comfort in some aspects, many still question whether it can replace truly meaningful human relationships and worry about its long-term impact on social interaction patterns. (Source: Reddit r/ArtificialInteligence)

AI Security Threats: Large Model Bug Bounty Reveals New Risks, Prompt Injection Widespread : China’s first real-world bug bounty program targeting large AI models uncovered 281 security vulnerabilities, with 177 being unique to large models, accounting for over 60%. Typical risks include inappropriate output, information leakage, prompt injection (the most common), and unlimited consumption attacks. Domestic mainstream models like Tencent Hunyuan and Baidu Wenxin Yiyan performed relatively well. The community warns that users’ unguarded trust in AI could lead to privacy breaches, especially when consulting on private matters. (Source: 36氪)

Ethical Dilemmas of AI Agents: Decision-Making and Responsibility with a 10% False Positive Rate in Urban AI Surveillance Systems : The community discussed a hypothetical scenario regarding the ethical dilemma of AI Agents: an urban AI surveillance system with a 10% false positive rate, marking innocent individuals as potential threats. The discussion focused on balancing deployment pressures with ethical principles (such as Blackstone’s Ratio). Proposed solutions include: forming human-AI collaboration teams to fix issues, shifting the cost of false positives to management, completely deleting records of wrongly flagged individuals and apologizing, and considering resignation if “public trust is completely lost and management greedily ignores” the problem. (Source: Reddit r/artificial)

AI Consciousness and AGI Definition: Andrew Ng Believes AGI is a Hype Term, Consciousness is a Philosophical Question : In his speech, Andrew Ng pointed out that Artificial General Intelligence (AGI) has transformed from a technical term into a hype term, with its definition being vague, leading to a lack of unified standards for claims of “achieving AGI” in the industry. He believes that consciousness is an important philosophical question, not a scientific one, and currently lacks measurable standards. Engineers and scientists should focus on building practically useful AI systems rather than dwelling on philosophical debates about consciousness. (Source: 36氪)

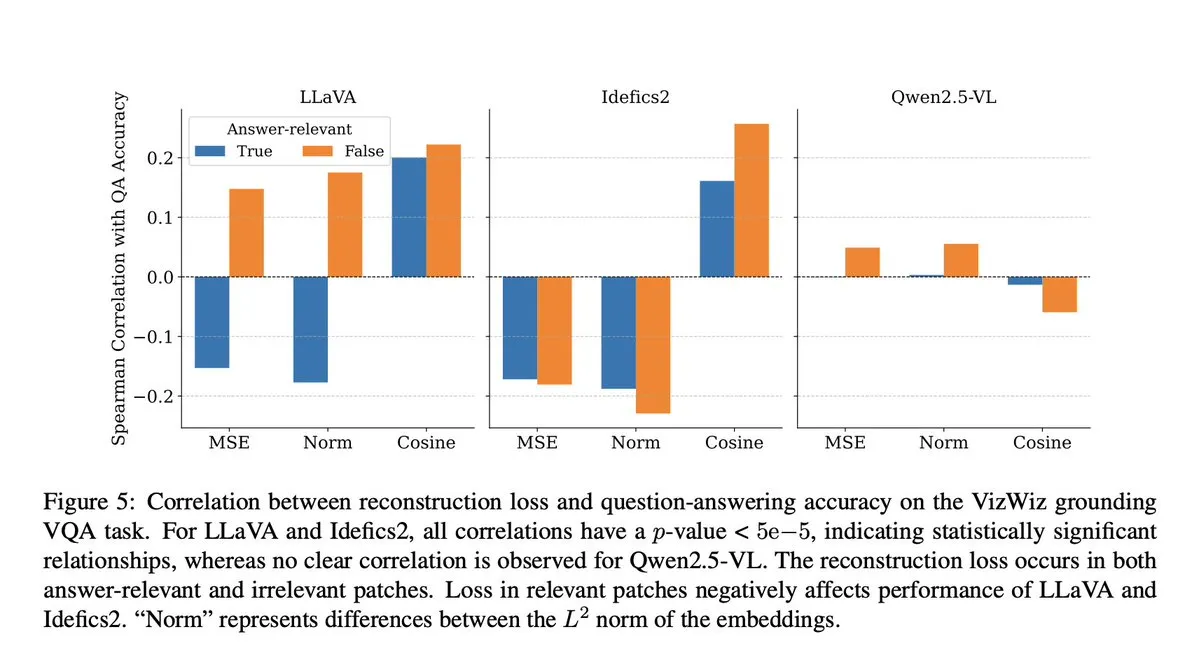

AI Model Cheating: VLM Research Reveals Projection Step Leads to Semantic Context Loss : The community discussed a Microsoft study revealing that Vision-Language Models (VLMs) lose 40-60% of semantic context during the projection step, thereby distorting visual representations and affecting downstream tasks. This finding raises concerns about the accuracy of VLM evaluation and data contamination, especially in benchmarks like DocVQA, where high scores may not fully reflect the model’s true capabilities. (Source: vikhyatk)

AI and Robot Ethics: Calls to Stop Violent Testing of Robots : On social media, several AI researchers and community members called for an end to violent testing of robots, such as repeatedly hitting the Unitree G1 robot to demonstrate its stability. They argue that such testing not only raises ethical concerns but may also reinforce negative perceptions of robots and question its scientific necessity, advocating for more humane and scientific methods to evaluate robot performance. (Source: vikhyatk, dejavucoder, Ar_Douillard)

AI’s ‘Pseudo Intelligence’: Calls to Change ‘Artificial Intelligence’ to ‘Pseudo Intelligence’ to Reduce Public Misconceptions : A viewpoint suggests that the term “Artificial Intelligence” should be changed to “Pseudo Intelligence” to reduce public overstatement and misunderstanding of AI’s capabilities. This proposal stems from a clear recognition of AI’s current limitations, aiming to avoid misleading the public with “Terminator-esque” grand narratives and promote a rational understanding of AI’s true capabilities both within and outside the industry. (Source: clefourrier)

Albanian AI Chatbot Diella Appointed to Cabinet, Sparks Controversy : The Albanian government appointed AI chatbot Diella as a cabinet member, aiming to combat corruption. This move sparked widespread controversy, criticized as a cheap gimmick, similar to Saudi Arabia granting robot Sophia “citizenship” in 2017. Commentators believe this action may over-promote AI capabilities and blur the lines between technology and governance. (Source: The Verge)

ChatGPT ‘Hallucination’ Phenomenon: Model Trapped in Loop, Self-Corrects But Cannot Escape : Community users shared cases of ChatGPT falling into “hallucination” loops, where the model repeatedly generates incorrect information when answering questions, and even if it “realizes” its errors, it cannot break out of the loop. This phenomenon has sparked discussions about deep technical flaws in LLMs, indicating a disconnect between the model’s ability to understand its own logical errors and its ability to correct them in certain situations. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT)

💡 Other

From Transformer to GPT-5: OpenAI Scientist Lukasz Kaiser’s First Principles Thinking on Large Models : OpenAI scientist Lukasz Kaiser (one of the “Eight Transformers”) shared his journey from his background in logic, to inventing the Transformer architecture, and deeply participating in the development of GPT-4/5. He emphasized the importance of building systems from first principles and predicted that the next stage of AI will be teaching models to “think” by generating more intermediate steps for deep reasoning, rather than directly outputting answers, with computing power shifting to massive inference on small amounts of high-quality data. (Source: 36氪)

Semantic Image Synthesis: Satellite River Photo Skeleton Generation Technology : The machine learning community is discussing the latest advancements in semantic image synthesis, particularly focusing on generating black-and-white satellite river photos from river image skeletons. This task involves using a generator to produce satellite images from new, unseen skeleton data, and may introduce additional conditional variables. The discussion aims to find state-of-the-art methods and relevant research papers to guide such computer vision projects. (Source: Reddit r/MachineLearning)

The Intelligence Divide in the AI Era: Professor Qiu Zeqi Discusses AI’s Profound Impact on Human Thought and Cognition : Professor Qiu Zeqi of Peking University points out that the use of AI does not simply lead to “intellectual degradation,” but rather acts as a form of mental exercise, depending on human initiative and a questioning attitude. He emphasizes that human understanding of thought is still in its early stages, and while AI is powerful, it is still based on human knowledge and cannot fully simulate human sensory perception and lateral thinking. AI’s “pleasing” tendency requires users to challenge it and be wary of its values. He believes that in the AI era, cultivating fundamental abilities and developing a diverse society are more important, avoiding a high-level perspective when observing the “intelligence divide.” (Source: 36氪)