Keywords:Livnium model, DeepSeek V3.2, OpenAI, Embodied intelligent robot, AI Agent, Rnj-1 model, Qwen 3 Coder, AI-generated fake citations in papers, Hybrid neuro-geometric architecture, Cortex-AGI benchmark, LLM-generated fake citations, FastUMI efficient data acquisition system, Nex-N1 framework

🔥 Focus

Livnium model challenges traditional NLP paradigm : A study proposes a hybrid neural-geometric architecture called Livnium, which outperforms BERT-Base (91%) on the SNLI dataset with 96.19% accuracy. Its model size is only 52.3MB (BERT-Base is about 440MB), and it completes training on a MacBook CPU in 30 minutes. Livnium treats logical reasoning as a physical simulation in vector space, learning through hardcoded geometric laws rather than massive parameters. This challenges the traditional notion of “more parameters equal better logic,” emphasizing “better physics leads to better reasoning.” (Source: Reddit r/deeplearning)

DeepSeek V3.2 excels on Cortex-AGI benchmark : DeepSeek V3.2 performs excellently on the Cortex-AGI benchmark, scoring higher than GPT-5.1 while reducing costs by 124.5%. This achievement demonstrates DeepSeek’s strong capabilities in abstract and out-of-distribution reasoning tasks, and showcases its competitiveness in the open-source model field with significant cost-effectiveness advantages. (Source: Reddit r/deeplearning)

Concerns raised over AI-generated fake citations in papers : A large number of LLM-generated fake citations have been found in papers submitted to ICLR 2026, even in high-quality papers, and these were not detected by reviewers. This phenomenon raises concerns about the integrity of the ML research community, highlights the potential destructive impact of AI tool misuse on academic institutions, and prompts calls for stricter citation checking mechanisms. (Source: Reddit r/MachineLearning)

🎯 Trends

OpenAI faces immense competitive pressure and strategic adjustments : After the release of Gemini 3, OpenAI’s traffic significantly declined. CEO Sam Altman issued a “red alert,” pausing non-core businesses such as advertising and AI Agents, and concentrating resources on enhancing the core ChatGPT experience, including personalization, image generation (to catch up with Nano Banana), user preferences, and response speed. This reflects that the large model competition has shifted from technical parameters to ecosystem integration capabilities. Google, with its extensive ecosystem (YouTube, Google Search, etc.), shows advantages in multimodal and Chinese language support, posing a severe challenge to OpenAI. (Source: 36氪)

Embodied AI robotics company Lumos Robotics secures hundreds of millions in financing : Lumos Robotics, a Tsinghua-affiliated embodied AI robotics company, completed two rounds of Pre-A1 and Pre-A2 financing totaling hundreds of millions of yuan, to be used for data and hardware investment. The company focuses on the R&D of embodied AI robots and core components, possessing the FastUMI efficient data acquisition system (3x efficiency improvement, 1/5 cost reduction) and a high-performance modular robot platform. It has partnered with leading enterprises such as Mitsubishi (Japan) and COSCO Shipping, committed to promoting the commercialization of embodied AI in scenarios like homes, logistics, and manufacturing. (Source: 36氪)

Importance of AI Agent environment expansion for model capabilities : Research emphasizes the importance of environment expansion for Agentic AI, proposing the Nex-N1 framework to enhance Agent capabilities by systematically expanding the diversity and complexity of interactive training environments. This framework performs excellently on models like DeepSeek-V3.1 and Qwen3-32B, even surpassing GPT-5 in tool use, indicating that Agent capabilities stem from interaction rather than imitation. (Source: omarsar0)

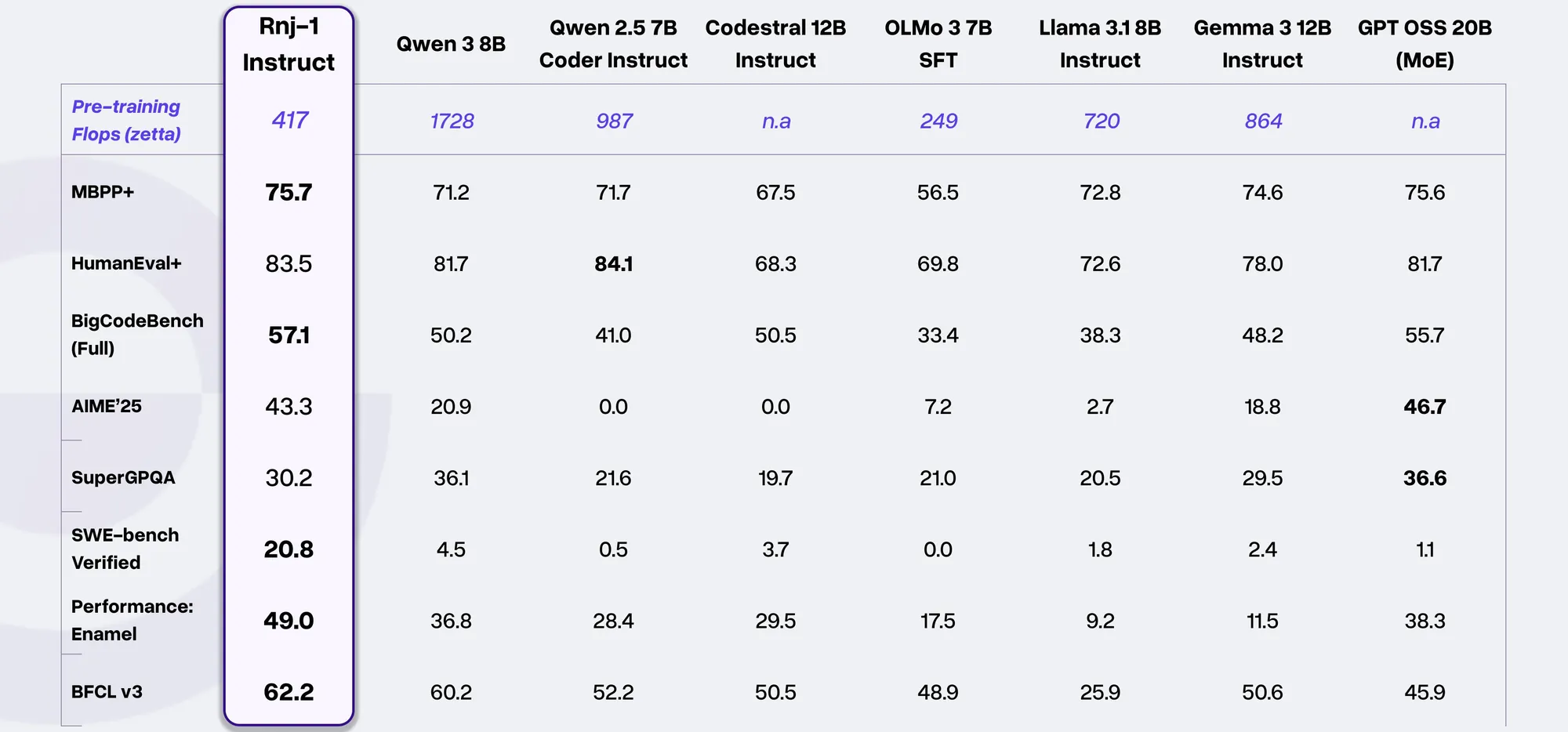

Essential AI releases Rnj-1 model : Essential AI released its first flagship model, Rnj-1 (8B parameters), which approaches GPT-4o in SWE bench performance, surpasses similar open-source models in tool use, and matches GPT OSS MoE 20B in mathematical reasoning. The model is dedicated to the advancement and equitable distribution of open-source AI. (Source: saranormous, scaling01, arohan, stanfordnlp, OfirPress, togethercompute, sbmaruf)

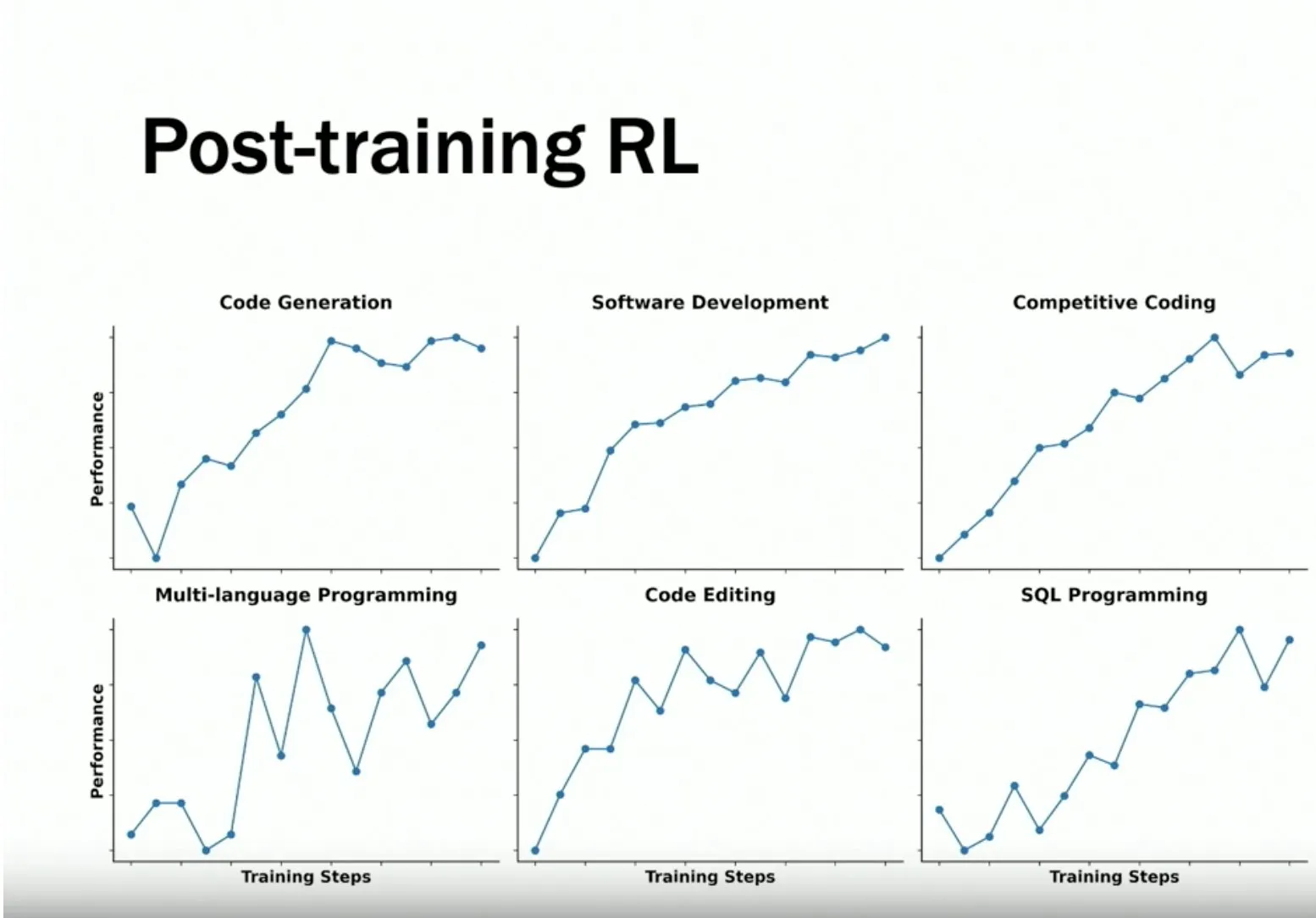

Qwen 3 Coder’s progress and future directions in AI coding : The Qwen 3 Coder team shared its progress in synthetic data, reinforcement learning, model scaling, and attention mechanisms. They found that Chain-of-Thought (CoT) poorly supports coding use cases and utilized Qwen 2.5 Coder to generate and clean synthetic data, conducting large-scale RL training via the MegaFlow scheduler. Future Qwen LLMs will adopt Gated Delta Attention and plan architectural innovations in long context, integrated search, computer vision integration, and long-duration task processing. (Source: bookwormengr, bookwormengr)

DeepSeek V3.2’s architectural updates and cost-effectiveness : DeepSeek V3.2 not only performs excellently on the Cortex-AGI benchmark, but its core lies in architectural updates rather than simple model card upgrades. This version features improvements in sparse MoE stacks, RoPE indexer fixes, FP8 and KV stability, DSA-aligned GRPO, and Math-V2 validator/meta-validator stacks, achieving significant cost-effectiveness. Its “disregard” for token efficiency is considered a testament to its competitiveness. (Source: Dorialexander, teortaxesTex, teortaxesTex)

Progress in embodied AI and robotics technology : PHYBOT M1 demonstrates an aerial backflip, heralding the era of “superhuman” humanoid robots. FIFISH underwater robots are transforming shipyard hull inspections, improving efficiency. Hyundai plans to deploy tens of thousands of robots, including Atlas humanoids and Spot quadruped robots. These advancements mark the innovative pace of AI and robotics integration. Furthermore, ISS astronauts remotely operate robots for simulated planetary exploration, indicating that physical AI and robotics will trigger the next industrial revolution. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon, [teortaxesTex](https://