Keywords:AI industry, hype correction, GPT-5, AGI, AI safety, AI programming, AI agents, multimodal models, AI materials science, LLM reasoning optimization, embodied AI, AI benchmarking, AI-powered PPT generation

🔥 Spotlight

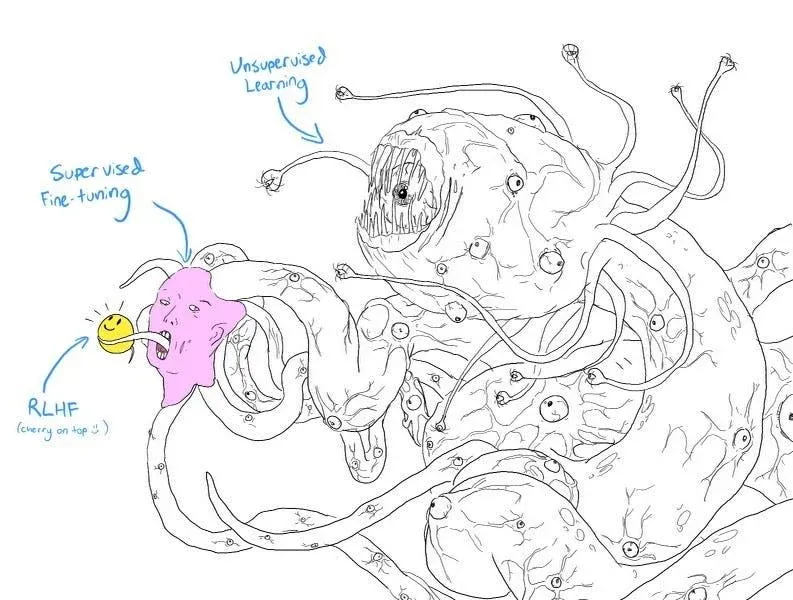

AI Industry “Hype Correction” and Reality Check: The AI industry is entering a “hype correction” phase in 2025, with market expectations for AI shifting from a “panacea” to a more rational view. Industry leaders like Sam Altman acknowledge the existence of an AI bubble, particularly concerning startup valuations and massive investments in data center construction. Concurrently, the release of GPT-5 is perceived as having fallen short of expectations, sparking discussions about bottlenecks in LLM development. Experts are calling for a re-evaluation of AI’s true capabilities, distinguishing between the “impressive demonstrations” of generative AI and the actual breakthroughs of predictive AI in fields like healthcare and science. The emphasis is on AI’s value lying in its reliability and sustainability, rather than a blind pursuit of AGI. (Source: MIT Technology Review, MIT Technology Review, MIT Technology Review, MIT Technology Review, MIT Technology Review)

AI Breakthroughs and Challenges in Materials Science: AI is being applied to accelerate new material discovery, with AI agents planning, executing, and interpreting experiments, potentially shortening the discovery process from decades to a few years. Companies like Lila Sciences and Periodic Labs are building AI-driven automated labs to address bottlenecks in traditional materials science synthesis and testing. Although DeepMind once claimed to discover “millions of new materials,” their actual novelty and utility have been questioned, highlighting the gap between virtual simulations and physical reality. The industry is shifting from purely computational models to those combining experimental validation, aiming to discover groundbreaking materials like room-temperature superconductors. (Source: MIT Technology Review)

AI Coding Productivity Debate and Technical Debt: AI coding tools are gaining widespread adoption, with Microsoft and Google CEOs claiming AI has generated a quarter of their companies’ code, and Anthropic’s CEO predicting 90% of future code will be AI-written. However, actual productivity gains remain controversial, with some studies suggesting AI might slow down development and increase “technical debt” (e.g., decreased code quality, difficulty in maintenance). Nevertheless, AI excels at writing boilerplate code, testing, and bug fixing. New-generation agent tools like Claude Code significantly improve complex task processing capabilities through planning patterns and context management. The industry is exploring new paradigms like “disposable code” and formal verification to adapt to AI-driven development. (Source: MIT Technology Review)

AI Safety Advocates’ Persistence and Concerns Regarding AGI Risks: Despite the recent “hype correction” in AI development and GPT-5’s modest performance, AI safety advocates (“AI doomers”) remain deeply concerned about the potential risks of AGI (Artificial General Intelligence). They believe that while AI progress might slow, its fundamental dangers remain unchanged, and they express disappointment that policymakers have failed to adequately address AI risks. They emphasize that even if AGI is achieved in decades rather than years, resources must be immediately invested in solving the control problem, and they warn against the long-term negative consequences of the industry’s overinvestment in the AI bubble. (Source: MIT Technology Review)

🎯 Trends

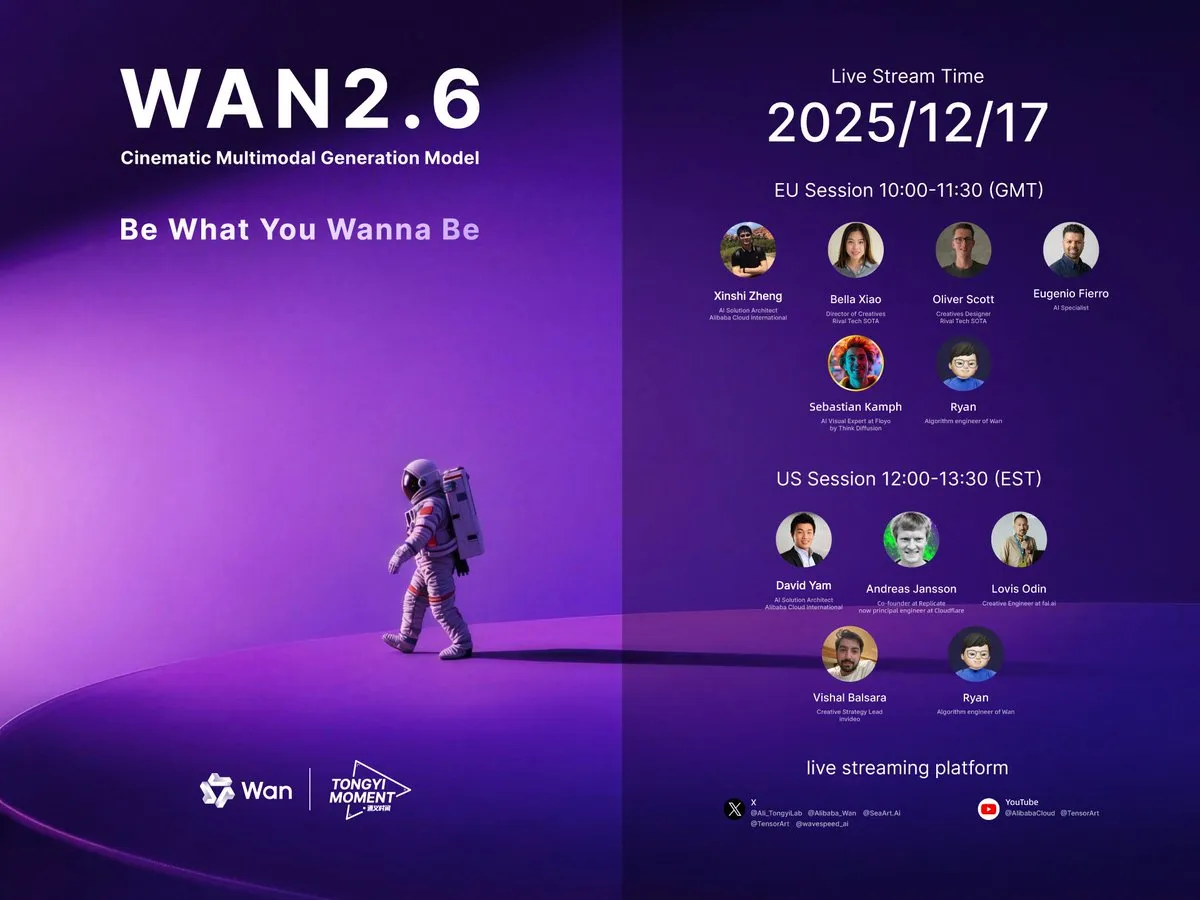

Continuous Breakthroughs in Multimodal Video Generation Models: Alibaba released the Wan 2.6 video model, supporting character role-playing, audio-visual synchronization, multi-shot generation, and audio-driven capabilities, with single video durations up to 15 seconds, hailed as a “smaller Sora 2.” ByteDance also launched Seedance 1.5 Pro, notable for its dialect support. LongVie 2, featured in HuggingFace Daily Papers, proposes a multimodal controllable ultra-long video world model, emphasizing controllability, long-term visual quality, and temporal consistency. These advancements signify significant improvements in video generation technology’s realism, interactivity, and application scenarios. (Source: Alibaba_Wan, op7418, op7418, HuggingFace Daily Papers)

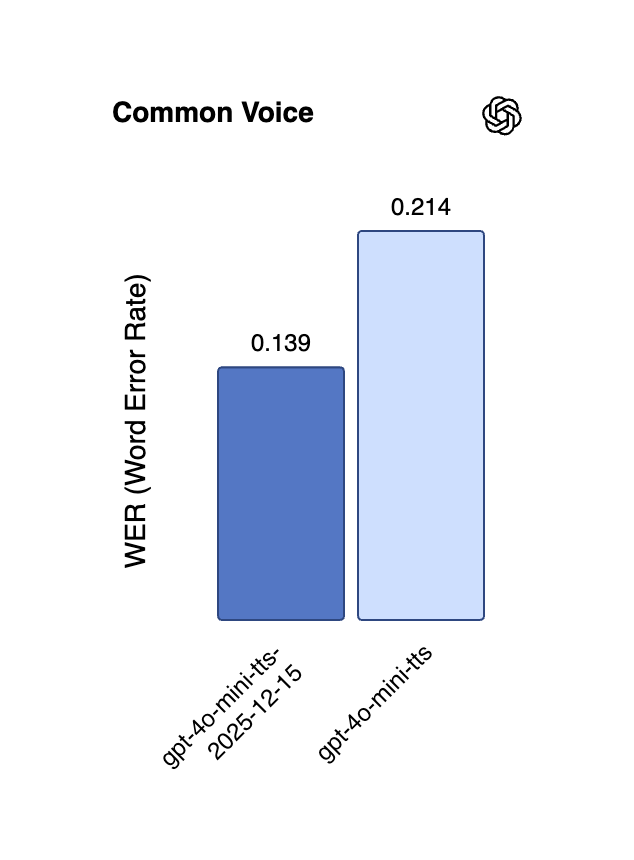

New Advances in Multilingual and Real-time Streaming AI Voice Technology: Alibaba open-sourced the CosyVoice 3 TTS model, supporting 9 languages and 18+ Chinese dialects, offering multilingual/cross-lingual zero-shot voice cloning, and achieving ultra-low latency bidirectional streaming at 150ms. OpenAI’s real-time API also updated its gpt-4o-mini-transcribe and gpt-4o-mini-tts models, significantly reducing hallucinations and error rates while improving multilingual performance. Google DeepMind’s Gemini 2.5 Flash Native Audio model also received updates, further optimizing instruction following and conversational naturalness, advancing the application of real-time voice agents. (Source: ImazAngel, Reddit r/LocalLLaMA, snsf, GoogleDeepMind)

Large Model Long-Context Reasoning and Efficiency Optimization: QwenLong-L1.5, through systematic post-training innovations, rivals GPT-5 and Gemini-2.5-Pro in long-context reasoning capabilities and excels in ultra-long tasks. GPT-5.2 also received positive user feedback for its long-context abilities, particularly for richer podcast summaries. Additionally, ReFusion proposes a new masked diffusion model that achieves significant performance and efficiency improvements through slot-level parallel decoding, with an average 18x speedup, narrowing the performance gap with autoregressive models. (Source: gdb, HuggingFace Daily Papers, HuggingFace Daily Papers, teortaxesTex)

Embodied AI and Robotics Technology Progress: AgiBot released the Lingxi X2 humanoid robot, possessing near-human mobility and versatile skills. Several studies in HuggingFace Daily Papers focus on embodied AI, such as “Toward Ambulatory Vision” exploring vision-grounded active viewpoint selection, “Spatial-Aware VLA Pretraining” achieving visual-physical alignment through human videos, and VLSA introducing a plug-and-play safety constraint layer to enhance VLA model safety. These studies aim to bridge the gap between 2D vision and actions in 3D physical environments, advancing robot learning and practical deployment. (Source: Ronald_vanLoon, HuggingFace Daily Papers, HuggingFace Daily Papers, HuggingFace Daily Papers)

NVIDIA and Meta’s Contributions to AI Architecture and Open-Source Models: NVIDIA released the Nemotron v3 Nano open model family and open-sourced its complete training stack (including RL infrastructure, environments, pre-training, and post-training datasets), aiming to foster the creation of specialized agentic AI across industries. Meta introduced the VL-JEPA Vision-Language Joint Embedding Predictive Architecture, the first non-generative model capable of efficiently performing general vision-language tasks in real-time streaming applications, outperforming large VLMs. (Source: ylecun, QuixiAI, halvarflake)

AI Benchmarking and Evaluation Method Innovations: Google Research launched the FACTS Leaderboard, comprehensively evaluating LLMs’ factuality across four dimensions: multimodality, parametric knowledge, search, and grounding, revealing trade-offs in coverage and contradiction rates among different models. The V-REX benchmark assesses VLMs’ exploratory visual reasoning capabilities through “chain of questions,” while START focuses on textual and spatial learning for chart comprehension. These new benchmarks aim to more accurately measure AI models’ performance in complex, real-world tasks. (Source: omarsar0, HuggingFace Daily Papers, HuggingFace Daily Papers)

Enhanced Autonomy of AI Agents in Web Environments: WebOperator proposes an action-aware tree search framework that enables LLM agents to perform reliable backtracking and strategic exploration in partially observable web environments. This method generates action candidates from multiple reasoning contexts and filters invalid actions, significantly improving success rates on WebArena tasks and highlighting the critical advantage of combining strategic foresight with safe execution. (Source: HuggingFace Daily Papers)

AI-Assisted Autonomous Driving and 4D World Models: DrivePI is a spatially-aware 4D MLLM that unifies understanding, perception, prediction, and planning for autonomous driving. By integrating point clouds, multi-view images, and language instructions, and generating text-occupancy and text-flow QA pairs, it achieves accurate prediction of 3D occupancy and occupancy flow, outperforming existing VLA and specialized VA models on benchmarks like nuScenes. GenieDrive focuses on physics-aware driving world models, improving prediction accuracy and video quality through 4D occupancy-guided video generation. (Source: HuggingFace Daily Papers, HuggingFace Daily Papers)

🧰 Tools

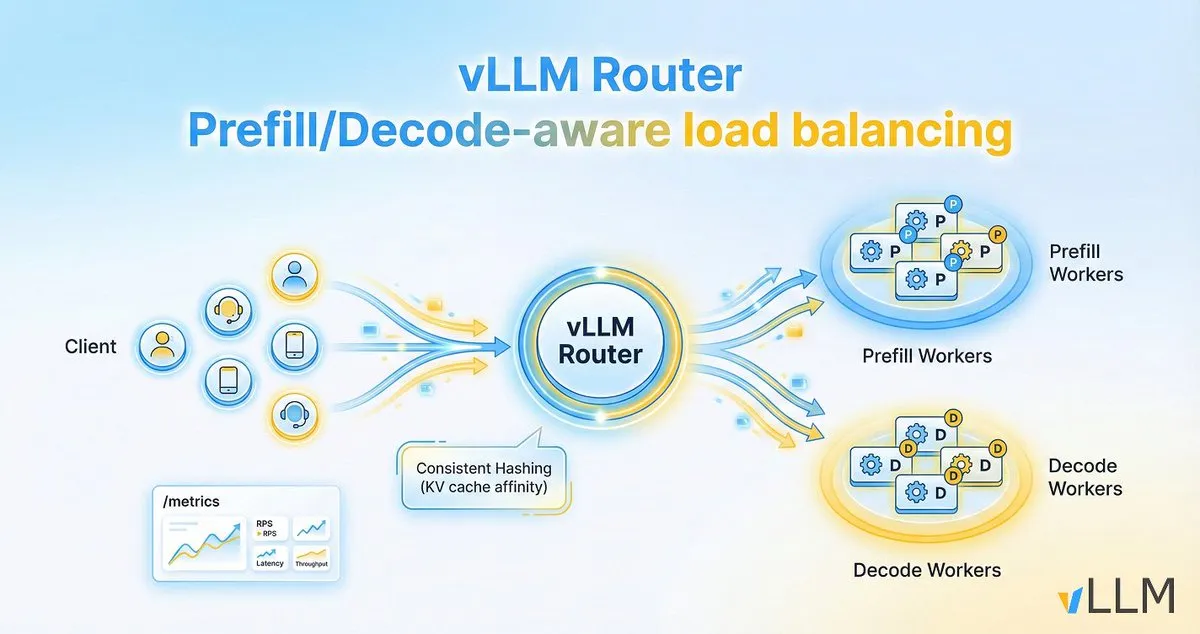

vLLM Router Boosts LLM Inference Efficiency: The vLLM project released vLLM Router, a lightweight, high-performance, prefill/decode-aware load balancer designed for vLLM clusters. Written in Rust, it supports strategies like consistent hashing and power-of-two choices, aiming to optimize KV cache locality and resolve bottlenecks from conversational traffic and prefill/decode separation, thereby improving LLM inference throughput and reducing tail latency. (Source: vllm_project)

AI21 Maestro Simplifies AI Agent Construction: AI21Labs’ Vibe Agent, launched in AI21 Maestro, allows users to create AI agents using simple English descriptions. The tool automatically suggests agent use cases, validation checks, required tools, and model/compute settings, and explains each step in real-time, significantly lowering the barrier to building complex AI agents. (Source: AI21Labs)

OpenHands SDK Accelerates Agent-Driven Software Development: OpenHands released its software agent SDK, aiming to provide a fast, flexible, and production-ready framework for building agent-driven software. The SDK’s launch will help developers more efficiently integrate and manage AI agents to tackle complex software development tasks. (Source: gneubig)

Claude Code CLI Update Enhances Developer Experience: Anthropic released Claude Code version 2.0.70, featuring 13 CLI improvements. Key updates include: Enter key support for accepting prompt suggestions, wildcard syntax for MCP tool permissions, a plugin marketplace auto-update toggle, and forced plan mode. Additionally, memory usage efficiency is improved by 3x, and screenshot resolution is higher, all aimed at optimizing developer interaction and efficiency when using Claude Code for software development. (Source: Reddit r/ClaudeAI)

Qwen3-Coder Enables Rapid 2D Game Development: A Reddit user demonstrated how to use Alibaba’s Qwen3-Coder (480B) model to build a 2D Mario-style game in seconds using Cursor IDE. Starting from a single prompt, the model automatically plans steps, installs dependencies, generates code and project structure, and can run directly. Its running cost is low (approx. $2 per million tokens), and the experience is similar to GPT-4 agent mode, showcasing the powerful potential of open-source models in code generation and agent tasks. (Source: Reddit r/artificial)

AI-Powered Stock Deep Research Tool: The Deep Research tool leverages AI to extract data from SEC filings and industry publications, generating standardized reports to simplify company comparison and screening. Users can input stock tickers for in-depth analysis. This tool aims to help investors conduct fundamental research more efficiently, avoiding market news distractions and focusing on substantive financial information. (Source: Reddit r/ChatGPT)

LangChain 1.2 Simplifies Agentic RAG Application Building: LangChain released version 1.2, simplifying support for built-in tools and strict mode, especially in the create_agent function. This makes it easier for developers to build Agentic RAG (Retrieval Augmented Generation) applications, whether running locally or on Google Collab, and emphasizes its 100% open-source nature. (Source: LangChainAI, hwchase17)

Skywork Launches AI-Powered PPT Generation Feature: The Skywork platform has launched PPT generation capabilities based on Nano Banana Pro, addressing the problem of traditional AI-generated PPTs being difficult to edit. The new feature supports layer separation, allowing users to modify text and images online, and can be exported as .pptx for local editing. Additionally, the tool integrates industry-specific professional databases, supports various chart generation to ensure data accuracy, and offers Christmas promotional discounts. (Source: op7418)

Small Models Empower Edge-side Infrastructure as Code: A Reddit user shared a 500MB “Infrastructure as Code” (IaC) model that can run on edge devices or in browsers. This model, compact yet powerful, focuses on IaC tasks, providing an efficient solution for deploying and managing infrastructure in resource-constrained environments, signaling the immense potential of small AI models in specific vertical applications. (Source: Reddit r/deeplearning)

📚 Learning

Chinarxiv: Automated Translation Platform for Chinese Preprints: Chinarxiv.org has officially launched as a fully automated translation platform for Chinese preprints, aiming to bridge the language gap between Chinese and Western scientific research. The platform translates not only text but also chart content, making it easier for Western researchers to access and understand the latest research findings from the Chinese scientific community. (Source: menhguin, andersonbcdefg, francoisfleuret)

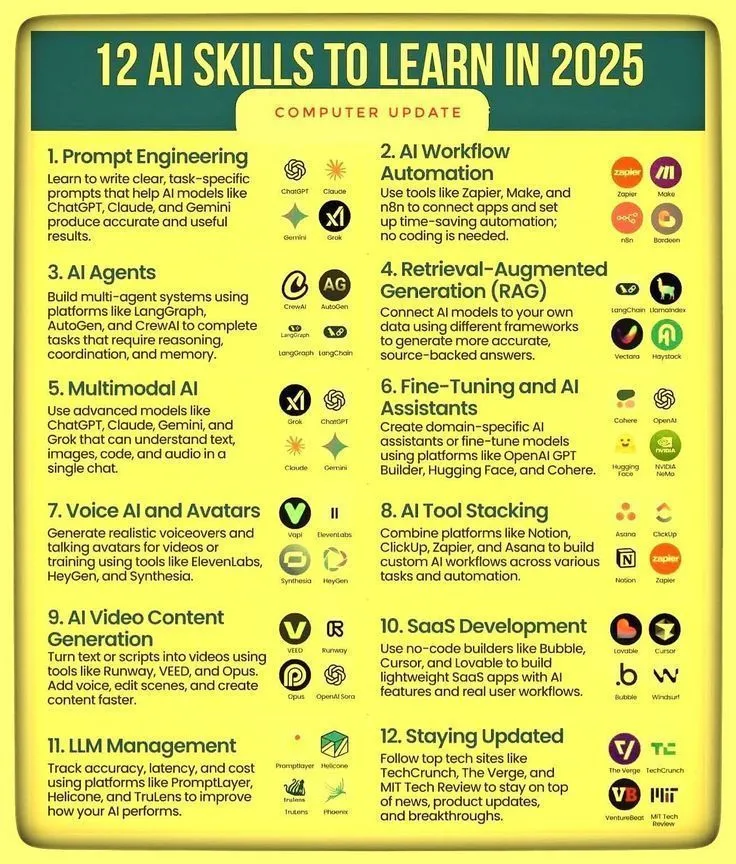

AI Skills and Agentic AI Learning Roadmaps: Ronald_vanLoon shared 12 key capabilities for mastering AI skills in 2025, along with a mastery roadmap for Agentic AI. These resources are designed to guide individuals in enhancing their competitiveness in the rapidly evolving AI field, covering learning paths from foundational AI knowledge to advanced agent system development, emphasizing the importance of continuous learning and adapting to new skills in the AI era. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

LLM Inference Process Optimization and Data-Driven AI Development: Research from Meta Superintelligence Labs shows that the PDR (Parallel Draft-Distill-Refine) strategy—“parallel draft → distill to compact workspace → refine”—achieves optimal task accuracy under inference constraints. Concurrently, a blog post emphasizes that “data is the jagged frontier of AI,” noting the success of coding and mathematics due to data richness and verifiability, while science lags relatively, and discusses the role of distillation and reinforcement learning in data generation. (Source: dair_ai, lvwerra)

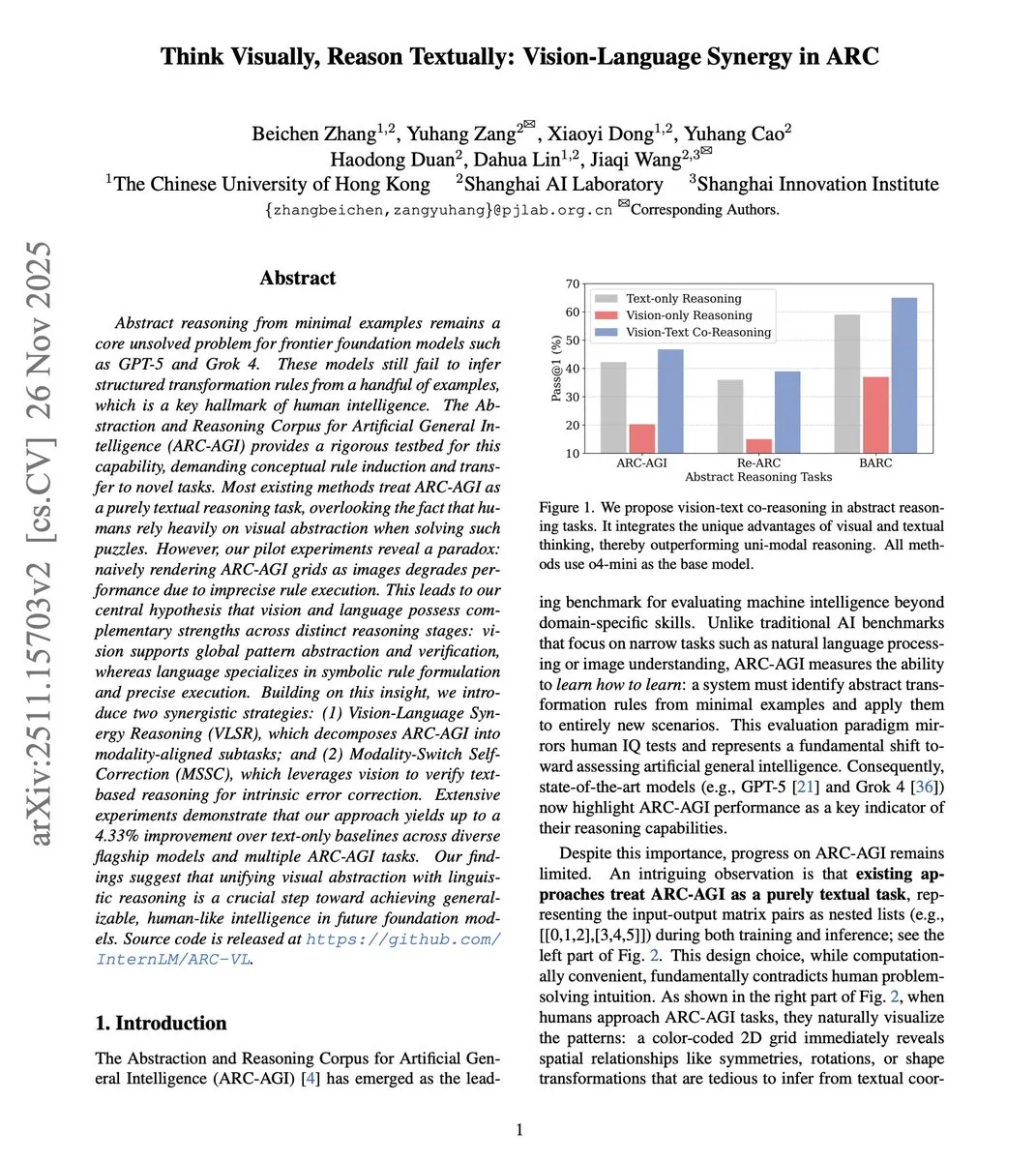

Vision-Language Collaborative Reasoning Enhances Abstract Capabilities: New research proposes the Vision-Language Collaborative Reasoning (VLSR) framework, which strategically combines visual and textual modalities at different reasoning stages, significantly improving LLM performance on abstract reasoning tasks (e.g., ARC-AGI benchmark). This method uses vision for global pattern recognition and text for precise execution, and overcomes confirmation bias through a modality-switching self-correction mechanism, even surpassing GPT-4o’s performance on smaller models. (Source: dair_ai)

New Perspective: LLM Inference Tokens as Computational States: The State over Tokens (SoT) conceptual framework redefines LLM inference tokens as externalized computational states rather than mere linguistic narratives. This explains how tokens can drive correct reasoning without serving as faithful textual interpretations and opens new research directions for understanding LLM’s internal processes, emphasizing that research should move beyond textual interpretation to focus on decoding inference tokens as states. (Source: HuggingFace Daily Papers)

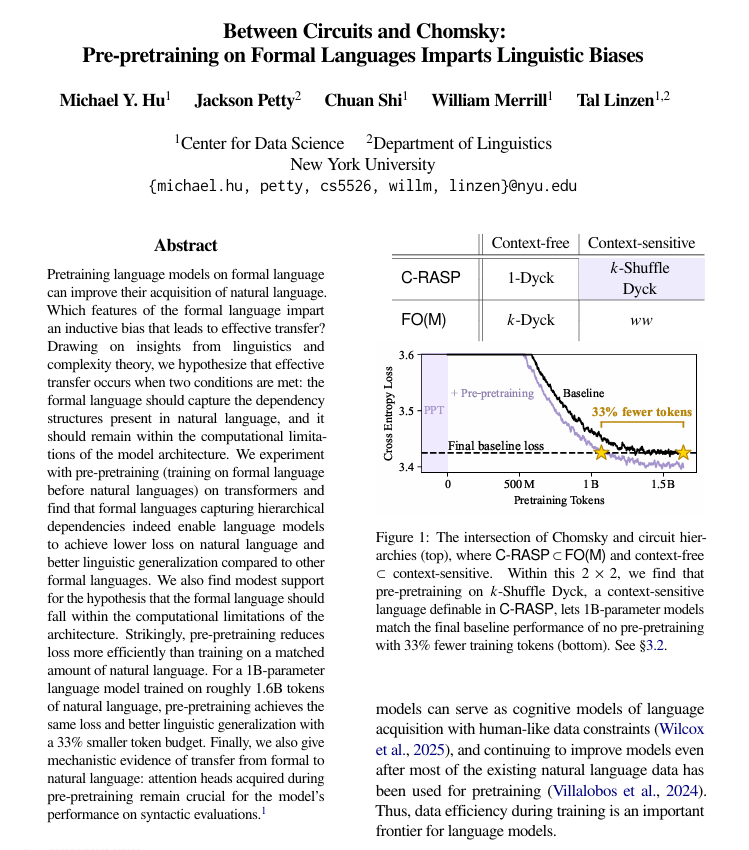

Formal Language Pre-training Enhances Natural Language Learning: Research from NYU found that pre-training with formal, rule-based languages before natural language pre-training can significantly help language models better learn human languages. The study indicates that such formal languages need to have similar structures (especially hierarchical relationships) to natural languages and be sufficiently simple. This method is more effective than adding an equivalent amount of natural language data, and the learned structural mechanisms transfer within the model. (Source: TheTuringPost)

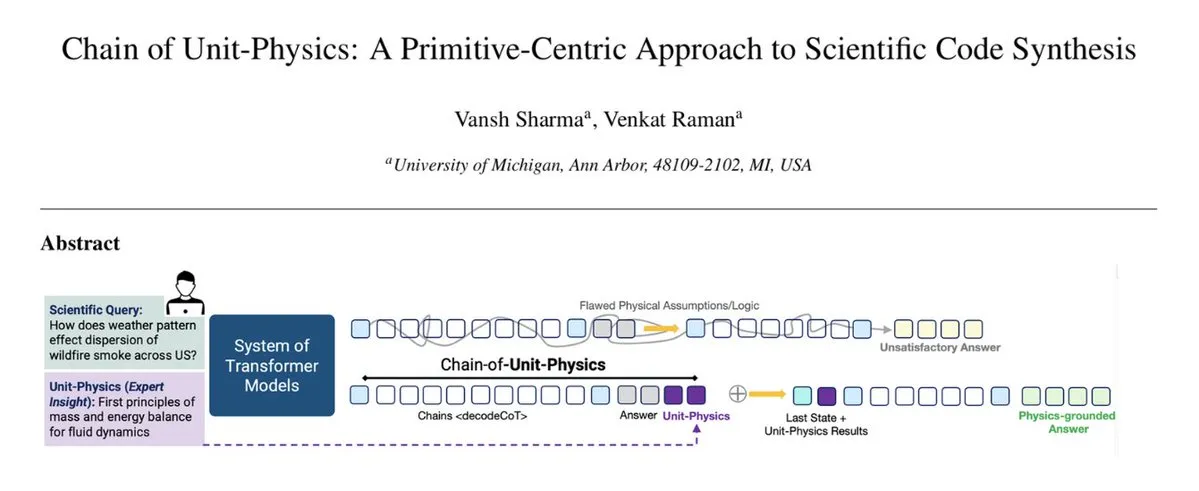

Physics Knowledge Injection into Code Generation Workflow: The Chain of Unit-Physics framework directly integrates physics knowledge into the code generation process. Researchers at the University of Michigan propose an inverse scientific code generation method that guides and constrains code generation by encoding human expert knowledge as unit physics tests. In a multi-agent setting, this framework enables the system to reach correct solutions in 5-6 iterations, with 33% faster execution, 30% less memory usage, and extremely low error rates. (Source: TheTuringPost)

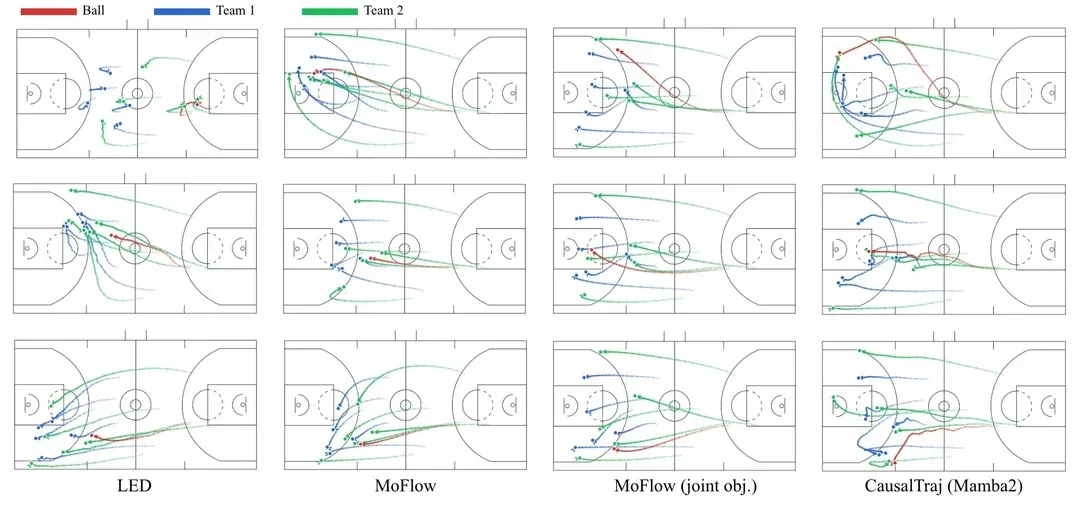

CausalTraj: Multi-Agent Trajectory Prediction Model for Sports Teams: CausalTraj is an autoregressive model for joint trajectory prediction of multiple agents in team sports. The model is trained directly with a joint prediction likelihood objective, rather than solely optimizing individual agent metrics, thereby significantly enhancing the coherence and plausibility of multi-agent trajectories while maintaining individual performance. The research also explores how to more effectively evaluate joint modeling and assess the realism of sampled trajectories. (Source: Reddit r/deeplearning)

LLM Training Data: Answers or Questions?: A discussion on Reddit suggests that most current LLM training datasets focus on “answers,” while a key part of human intelligence lies in the messy, ambiguous, and iterative process before “questions” are formed. Experiments show that models trained on conversational data that includes early thoughts, ambiguous questions, and iterative refinements perform better at clarifying user intent, handling unclear tasks, and avoiding erroneous conclusions, implying that training data needs to more comprehensively capture the complexity of human thought. (Source: Reddit r/MachineLearning)

💼 Business

OpenAI Acquires neptune.ai, Strengthens Frontier Research Tools: OpenAI announced a definitive agreement to acquire neptune.ai, a move aimed at strengthening its tools and infrastructure supporting frontier research. This acquisition will help OpenAI enhance its capabilities in AI development and experiment management, further accelerating model training and iteration processes, and solidifying its leading position in the AI domain. (Source: dl_weekly)

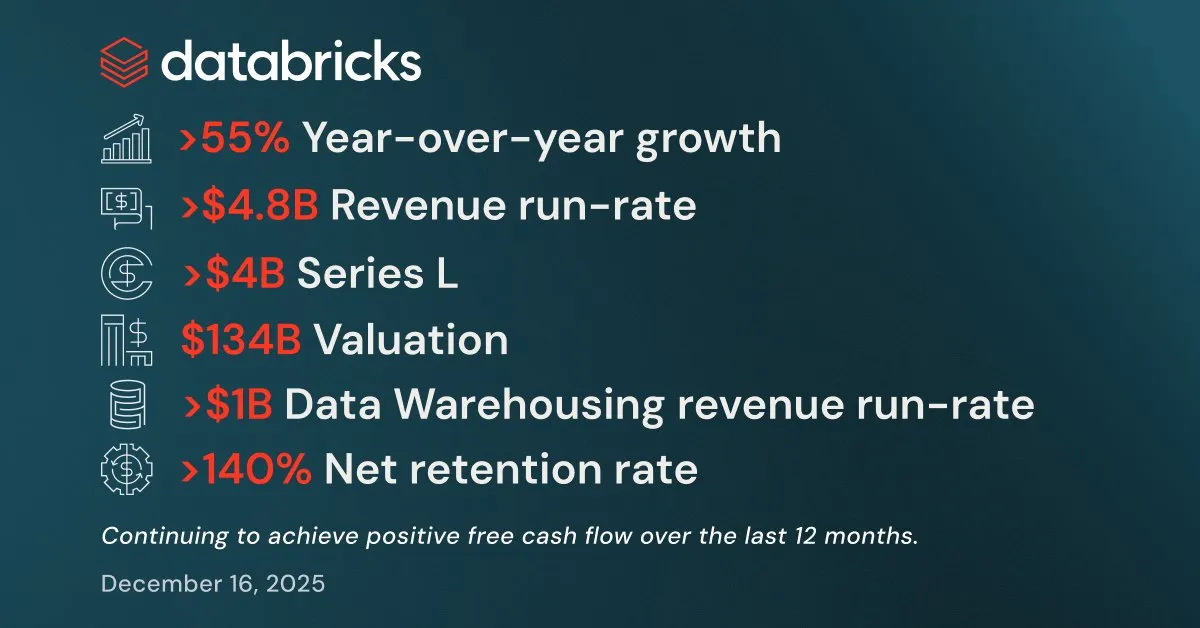

Databricks Reports Strong Q3 Performance, Secures Over $4 Billion in Funding: Databricks announced strong third-quarter results, with an annualized revenue run rate (ARR) exceeding $4.8 billion, representing over 55% year-over-year growth. Both its data warehousing and AI product businesses surpassed $1 billion in ARR. The company also completed a Series L funding round of over $4 billion, valuing it at $134 billion, with plans to invest in Lakebase Postgres, Agent Bricks, and Databricks Apps to accelerate the development of data intelligence applications. (Source: jefrankle, jefrankle)

Infosys Partners with Formula E to Drive AI-Powered Digital Transformation: Infosys has partnered with the ABB FIA Formula E World Championship to revolutionize motorsport fan experience and operational efficiency through an AI-driven platform. The collaboration includes leveraging AI to provide personalized content, real-time race analysis, AI-generated commentary, and optimizing logistics and travel to achieve carbon reduction goals. AI technology not only enhances the event’s appeal but also promotes sustainability and employee diversity, transforming Formula E into the most digital and sustainable motorsport. (Source: MIT Technology Review)

🌟 Community

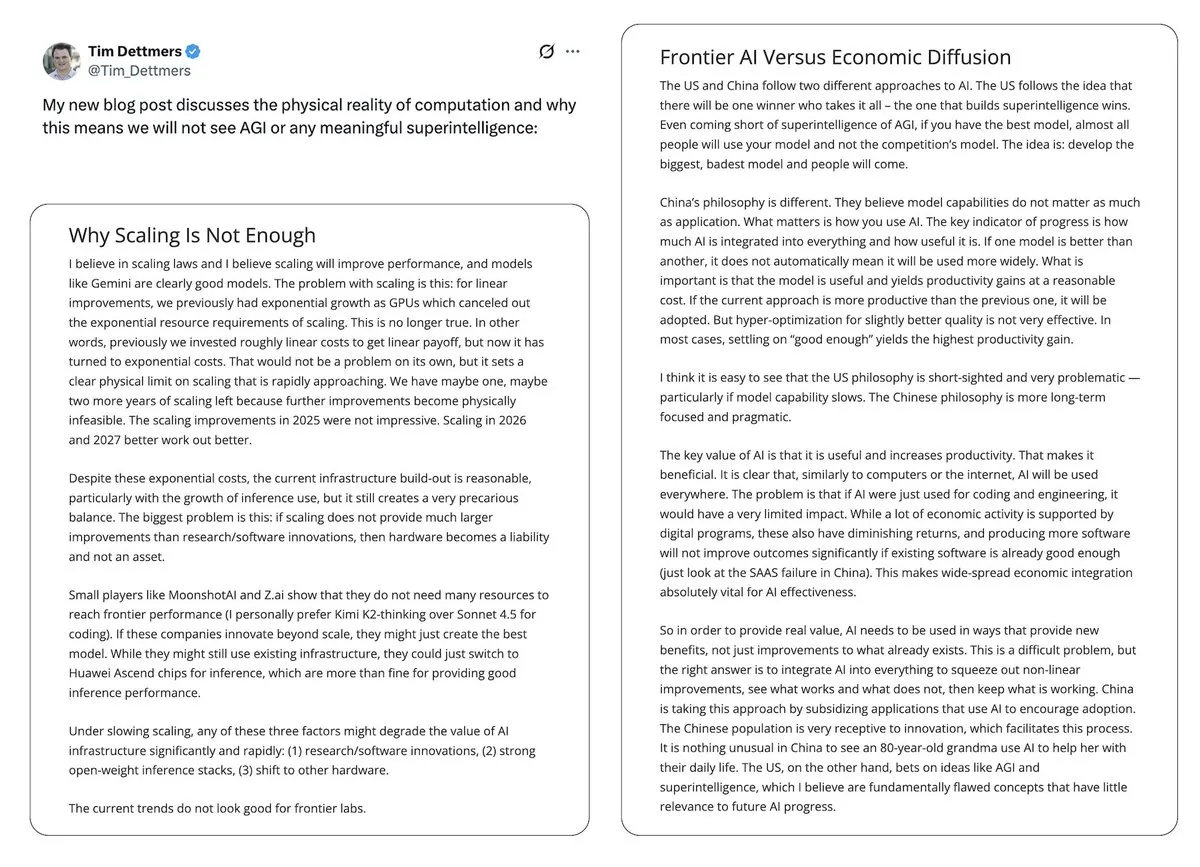

AI Bubble and AGI Prospects: The Controversy: Yann LeCun publicly dismissed LLMs and AGI as “complete nonsense,” arguing that the future of AI lies in world models rather than the current LLM paradigm, and expressed concern about AI technology being monopolized by a few companies. Tim Dettmers’ article “Why AGI Will Not Happen” also gained attention for its discussion on diminishing returns to scale. Meanwhile, AI safety advocates, while adjusting their timeline for AGI, still maintain its potential dangers and express concern over policymakers failing to adequately address AI risks. (Source: ylecun, ylecun, hardmaru, MIT Technology Review)

Polarized User Reviews for GPT-5.2 and Gemini: On social media, user reviews for OpenAI’s GPT-5.2 are mixed. Some users are satisfied with its long-context processing capabilities, finding its podcast summaries richer; however, others express strong dissatisfaction, deeming GPT-5.2’s answers too generic, lacking depth, and even exhibiting “self-aware” responses, leading some users to switch to Gemini. This polarization reflects user sensitivity to new model performance and behavior, as well as ongoing attention to the AI product experience. (Source: gdb, Reddit r/ArtificialInteligence)

AI’s Impact on Human Cognition, Work Standards, and Ethical Behavior: Prolonged use of AI is subtly changing human cognitive patterns and work standards, promoting more structured thinking and raising expectations for output quality. While AI makes high-quality content production efficient, it can also lead to over-reliance on technology. On the ethical front, varying AI model behaviors in the “trolley problem” (Grok’s utilitarianism vs. Gemini/ChatGPT’s altruism) spark discussions on AI value alignment. Concurrently, the “self-disciplining” nature of AI model safety prompts reveals the indirect impact of AI’s internal control mechanisms on user experience. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, Reddit r/ChatGPT)

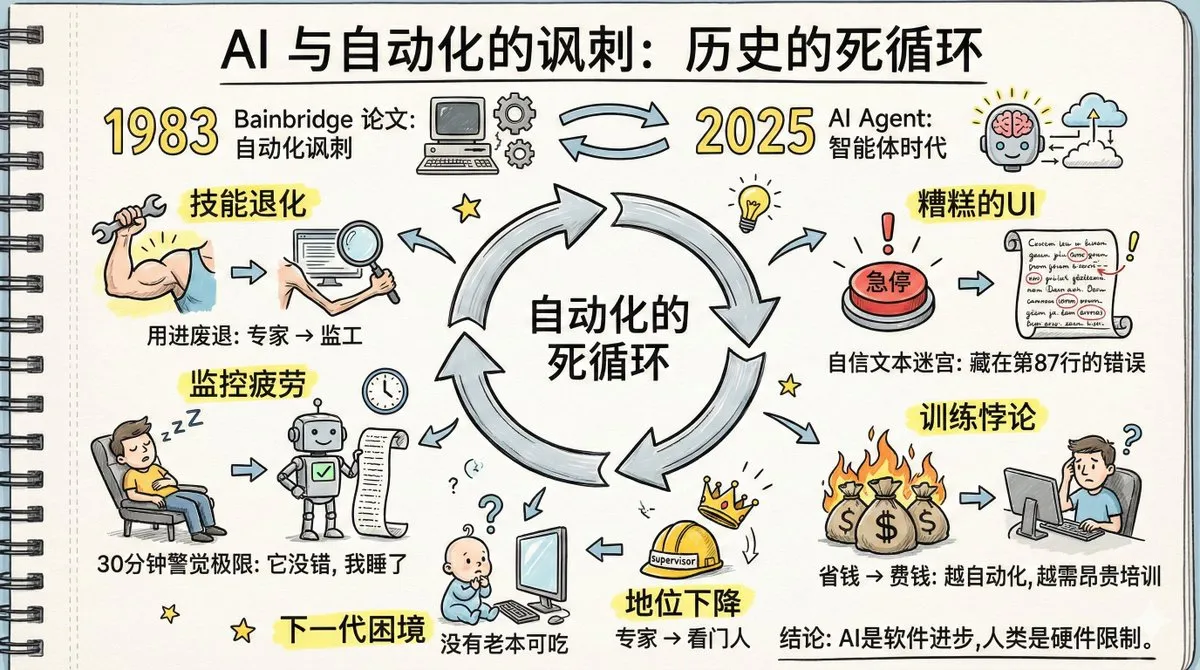

The “Irony of Automation” with AI Agents and Human Skill Degradation: A paper titled “The Irony of Automation” has sparked heated discussion, as its forty-year-old predictions about factory automation are now coming true with AI Agents. The discussion points out that the widespread adoption of AI Agents could lead to human skill degradation, slower memory retrieval, monitoring fatigue, and diminished expert status. The article emphasizes that humans cannot remain vigilant for long periods with systems that “rarely err,” and current AI Agent interface design is not conducive to anomaly detection. Solving these issues requires greater technological creativity than automation itself, along with a cognitive shift towards new divisions of labor, new training, and new role designs. (Source: dotey, dotey, dotey)

AI-Generated Low-Quality Content and Factuality Challenges: Social media users are discovering a large number of low-quality websites created specifically to satisfy AI search results, questioning AI research’s reliance on these websites lacking authors and detailed information, which could lead to unreliable information. This raises concerns about AI’s “backfilling sources” mechanism, where AI generates answers first and then seeks supporting sources, potentially leading to the citation of false information. This highlights AI’s challenges in information veracity and the necessity for users to return to independent research. (Source: Reddit r/ArtificialInteligence)

AI’s Impact on the Legal Profession and the Abstraction Layer Argument: The legal community is debating whether AI will “destroy” the legal profession. Some lawyers believe AI can handle over 90% of legal work, but strategy, negotiation, and accountability still require humans. Concurrently, another perspective views AI as the next abstraction layer after assembly, C, and Python, arguing that it will free engineers to focus on system design and user experience, rather than replacing humans. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence)

Physical Behavior of AI Agents and the LLM/Robotics Convergence Debate: New research suggests that LLM-driven AI agents may follow macroscopic physical laws, exhibiting “detailed balance” properties similar to thermodynamic systems, implying they might implicitly learn a “potential function” for evaluating states. Meanwhile, some argue that robot intelligence and LLMs are diverging rather than converging, while others believe physical AI is becoming real, with advancements in debugging and visualization accelerating robotics and embodied AI projects. (Source: omarsar0, Teknium, wandb)

Anthropic Executive Forces AI Chatbot on LGBTQ+ Discord Community, Sparks Controversy: An Anthropic executive forcibly deployed the company’s AI chatbot, Clawd, into an LGBTQ+ Discord community despite members’ objections, leading to a significant loss of community members. This incident raised concerns about AI’s impact on privacy, human interaction in communities, and AI companies’ “creating new gods” mentality. Users expressed strong dissatisfaction with AI chatbots replacing human communication and the executive’s arrogant behavior. (Source: Reddit r/artificial)

AI Models Vulnerable to Poetic Attacks, Call for Literary Talent in AI Labs: Italian AI researchers discovered that leading AI models can be fooled by transforming malicious prompts into poetic form, with Gemini 2.5 being the most susceptible. This phenomenon, dubbed the “Waluigi Effect,” suggests that in a compressed semantic space, good and evil roles are too close, making models more prone to executing instructions in reverse. This has sparked community discussion on whether AI labs need more literature graduates to understand narrative and deeper linguistic mechanisms to address AI’s potentially “strange narrative space” behaviors. (Source: Reddit r/ArtificialInteligence)

💡 Other

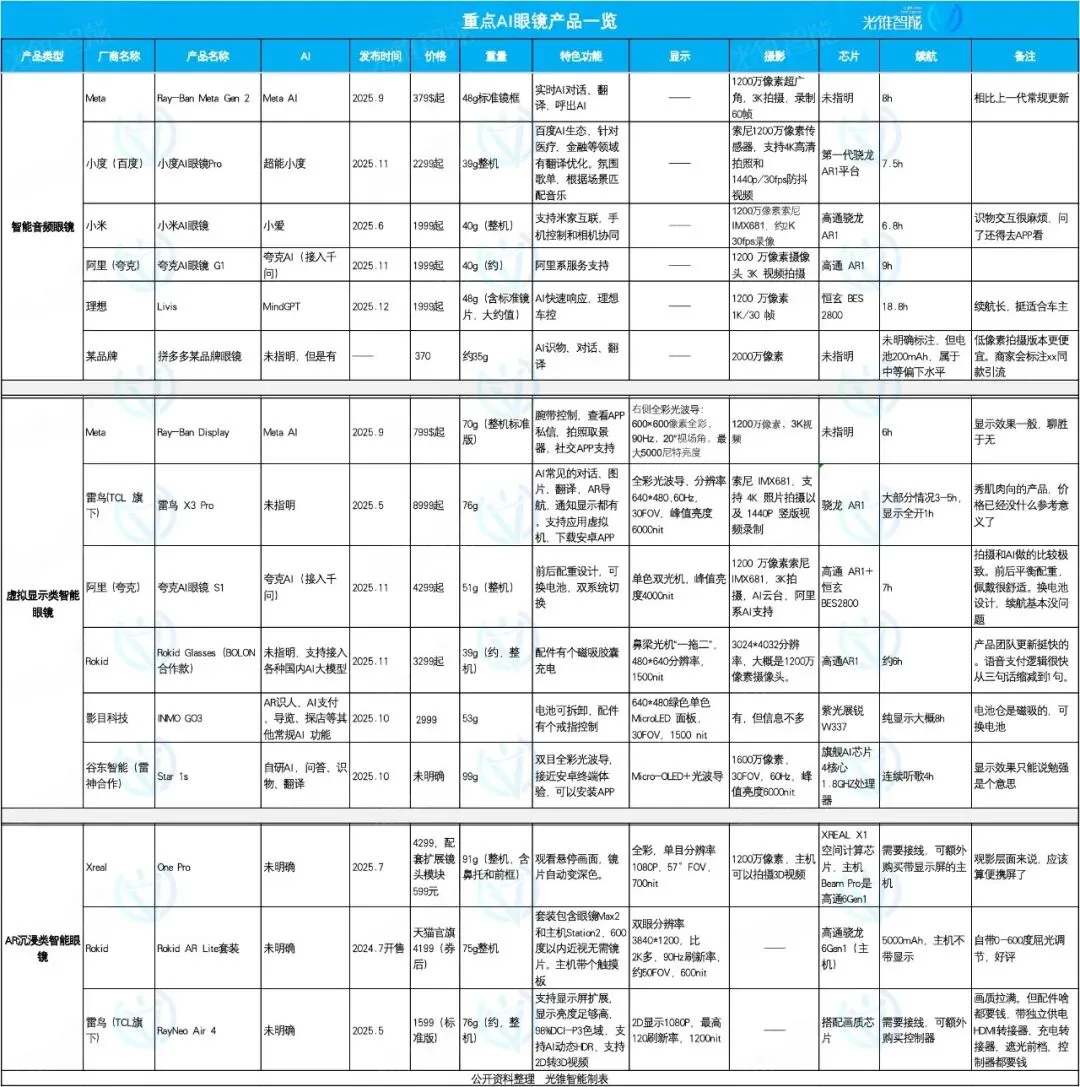

AI Glasses Market Focus and Diversification: Li Auto, Quark, Rokid Showcase Strengths: The AI glasses market is experiencing a boom in 2025, with product positioning showing a trend of diversification. Li Auto’s AI glasses, Livis, focus on smart automotive accessories, integrating the MindGPT large model to serve car owners; Quark AI Glasses S1 feature shooting performance and integration with Alibaba ecosystem apps; while Rokid Glasses emphasize an open ecosystem and rapid feature iteration, supporting access to multiple large language models. Smart audio glasses focus on low price and feature integration, virtual display glasses offer a comprehensive experience, and AR immersive glasses prioritize viewing entertainment. (Source: 36氪)

ZLUDA Enables CUDA on Non-NVIDIA GPUs, Compatible with AMD ROCm 7: The ZLUDA project has achieved CUDA execution on non-NVIDIA GPUs, with support for AMD ROCm 7. This development is significant for the AI and high-performance computing fields, as it breaks NVIDIA’s monopoly in the GPU ecosystem, allowing developers to utilize CUDA-written programs on AMD hardware, providing more flexibility for AI hardware selection and optimization. (Source: Reddit r/artificial)

hashcards: Plaintext-Based Spaced Repetition Learning System: hashcards is a plaintext-based spaced repetition learning system where all flashcards are stored as plain text files, supporting standard tool editing and version control. It uses a content-addressable approach, resetting progress if card content is modified. The system is minimalist, supporting only front/back and cloze deletion cards, and uses the FSRS algorithm to optimize review scheduling, aiming to maximize learning efficiency and minimize review time. (Source: GitHub Trending)

Zerobyte: Powerful Backup Automation Tool for Self-Hosters: Zerobyte is a backup automation tool designed for self-hosters, offering a modern web interface for scheduling, managing, and monitoring encrypted backups to remote storage. Built on Restic, it supports encryption, compression, and retention policies, and is compatible with various protocols like NFS, SMB, WebDAV, and local directories. Zerobyte also supports multiple cloud storage backends such as S3, GCS, Azure, and extends support to 40+ cloud storage services via rclone, ensuring data security and flexible backups. (Source: GitHub Trending)