Anahtar Kelimeler:AI büyük modelleri, açık kaynak modeller, çıkarım optimizasyonu, AI arama, AI gözlükleri, AI aracıları, AGI, a16z trilyon Token raporu, Gemini 3 API, Douban AI telefon, Titans mimarisi, çok modlu füzyon

🎯 Trends

a16z Trillion-Token Report Reveals AI’s Great Divergence: OpenRouter and a16z jointly released a report based on 100 trillion Tokens, revealing three major AI trends for 2025: open-source models will account for 30% of traffic, with China’s open-source strength rising to nearly 30% of the global share; inference-optimized model traffic will surge to over 50%, shifting AI from “generating text” to “thinking through problems”; programming and role-playing will be the two dominant scenarios. The report also introduces the “Cinderella Effect,” emphasizing that models must first solve specific pain points to retain users, and notes that paid usage in Asia has doubled, with Chinese becoming the second largest AI interaction language. (Source: source, source)

The Evolution and Controversy of AI Search: AI search is evolving from information distribution to service matching. AI-native search engines like Google Gemini 3 and Perplexity are redefining the search experience through conversational interaction, multimodal understanding, and task execution. As traditional search engines lose market share, AI search is becoming an underlying capability integrated into various applications. However, Elon Musk’s assertion that AI will “kill search” reflects both the disruption to traditional models and the anticipation of a future trillion-dollar service matching market, while also sparking discussions about credible information sources and shifts in marketing paradigms. (Source: source)

The “Battle of a Hundred Glasses” in AI Eyewear: Within two months, 20 AI eyewear products were launched in the Chinese market, with giants like Google, Alibaba, Huawei, and Meta entering the fray to seize the next-generation smart interaction portal. AI glasses integrate large model capabilities to achieve real-time translation, scene recognition, and voice Q&A, aiming to “kill” traditional glasses. However, product homogenization, battery life, comfort, and privacy security remain challenges, and the market is still exploring its “killer app” and business models. (Source: source, source)

Doubao AI Phone and the Super App War: ByteDance’s Doubao AI phone, developed in collaboration with ZTE, leverages high-privilege Agents to achieve system-level AI capabilities, sparking discussions about a “war between Super Agents and Super Apps.” Users can perform complex operations like cross-platform price comparisons and food delivery with a single voice command. However, platforms like WeChat quickly banned third-party automated operations, highlighting that the implementation of system-level AI is not just a technical issue but also a challenge of interest distribution and ecosystem coordination. Phone manufacturers, as neutral players, might find it easier to promote an open ecosystem for AI phones. (Source: source, source)

Challenges of AI Deployment in the Physical World: The industry widely agrees that AI is a “god” in the digital world but still an “infant” in the physical world. Leaders in the new energy vehicle sector point out that teaching robots to walk is harder than teaching AI to write poetry, as the physical world lacks an “undo button,” leading to immense operation, maintenance, and legal costs. Future dividends lie in embedding AI into physical devices like cars and machine tools to achieve breakthroughs in “engineering content.” Furthermore, after the text data dividend is exhausted, Scaling Law is shifting towards “learning from video” to understand physical laws and causality, but this also brings huge computational power consumption challenges. (Source: source)

Google Gemini Free API Adjustments and Market Competition: Google’s sudden tightening of Gemini API free tier limits has sparked developer dissatisfaction, who believe the company is shifting to monetization after data collection. This move comes as OpenAI plans to release GPT-5.2 in response to Gemini 3, intensifying the competition among AI large models. Google DeepMind CEO Demis Hassabis emphasized that Google must hold the strongest position in AI and expressed satisfaction with Gemini 3’s multimodal understanding, game creation, and front-end development capabilities, while reiterating the importance of Scaling Law. (Source: source)

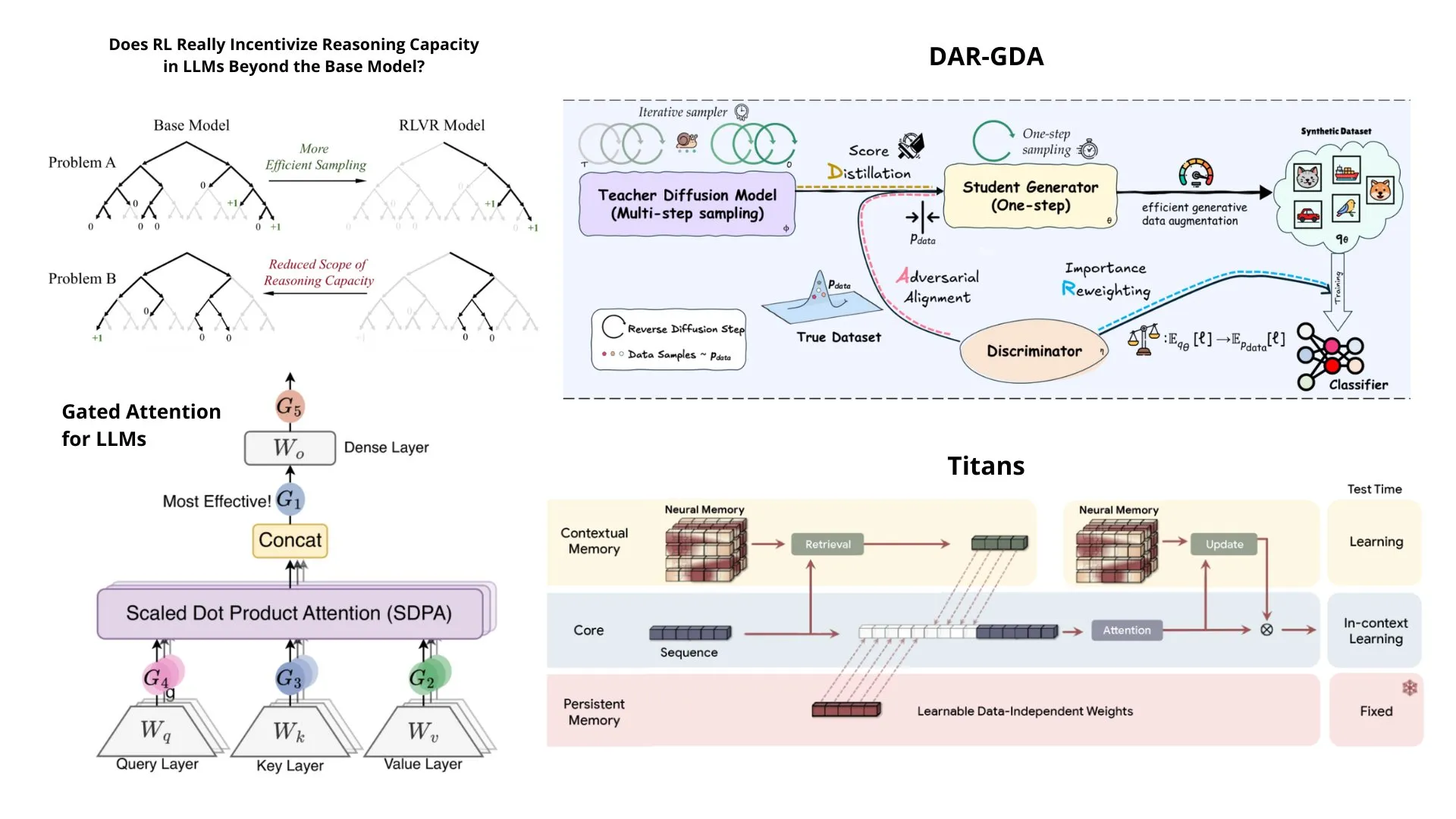

Google DeepMind’s Titans Architecture and AGI Outlook: Google DeepMind CEO Demis Hassabis predicts AGI will be achieved within 5-10 years but requires one to two “Transformer-level” breakthroughs. At NeurIPS 2025, Google unveiled the Titans architecture, which combines RNN speed with Transformer performance, aiming to solve long-context problems, and proposed the MIRAS theoretical framework. Titans uses a long-term memory module to compress historical data, enabling dynamic updates of model parameters at runtime, performing exceptionally well in ultra-long context inference tasks, and is considered a strong successor to Transformer. (Source: source, source)

🧰 Tools

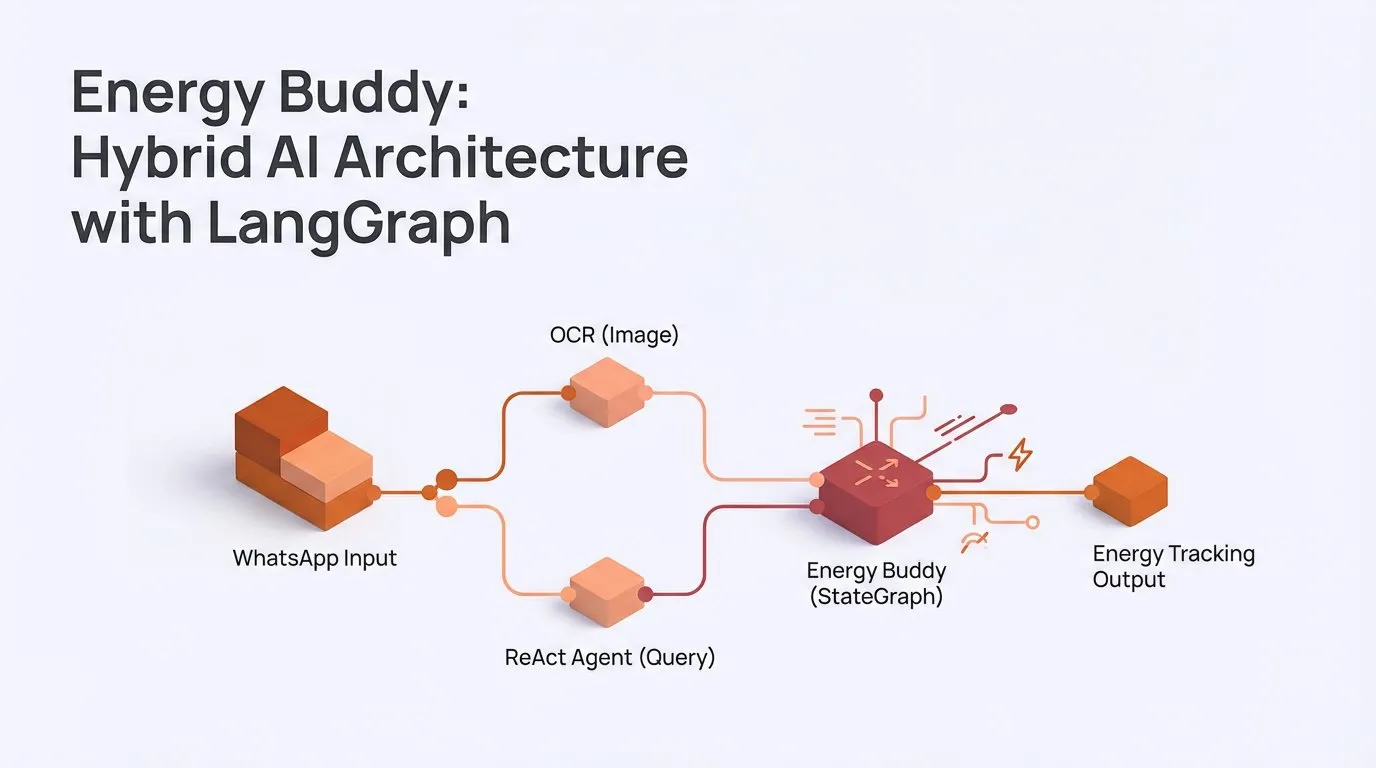

LangChainAI’s Hybrid AI Architecture and Multimodal Capabilities: The LangChain community released the “Energy Buddy” application, which uses a LangGraph hybrid AI architecture to process images with deterministic OCR and handle queries with a ReAct agent, emphasizing that not all tasks require an agent. Additionally, LangChain provides tutorials demonstrating how to build multimodal AI applications that process images, audio, and video using Gemini, simplifying complex API calls. (Source: source, source)

Multi-AI Prompt Tool Yupp AI: Yupp AI offers a platform that allows users to query multiple AI models (such as ChatGPT, Gemini, Claude, Grok, DeepSeek) simultaneously in one tab and use the “Help Me Choose” feature to have models cross-check each other’s work. This tool aims to simplify and accelerate multi-AI collaborative workflows, is offered for free, and enhances user efficiency in complex tasks. (Source: source)

Agent Memory System Cass Tool: doodlestein is developing an agent memory system based on its Cass tool, which utilizes multiple AI agents like Claude Code and Gemini3 for planning and code generation. The Cass tool aims to provide a high-performance CLI interface integrated with coding agents, updating agent memory by logging sessions, distilling preferences, and incorporating feedback to achieve more effective context engineering. (Source: source)

LlamaCloud’s Document Agents: LlamaCloud launched an “Intelligent Document Processing” solution, allowing users to build and deploy professional document agents in seconds and customize their workflows with code. The platform provides examples of invoice processing and contract matching agents, claiming to be more accurate and customizable than existing IDP solutions, aiming to simplify document processing tasks through coding agents. (Source: source)

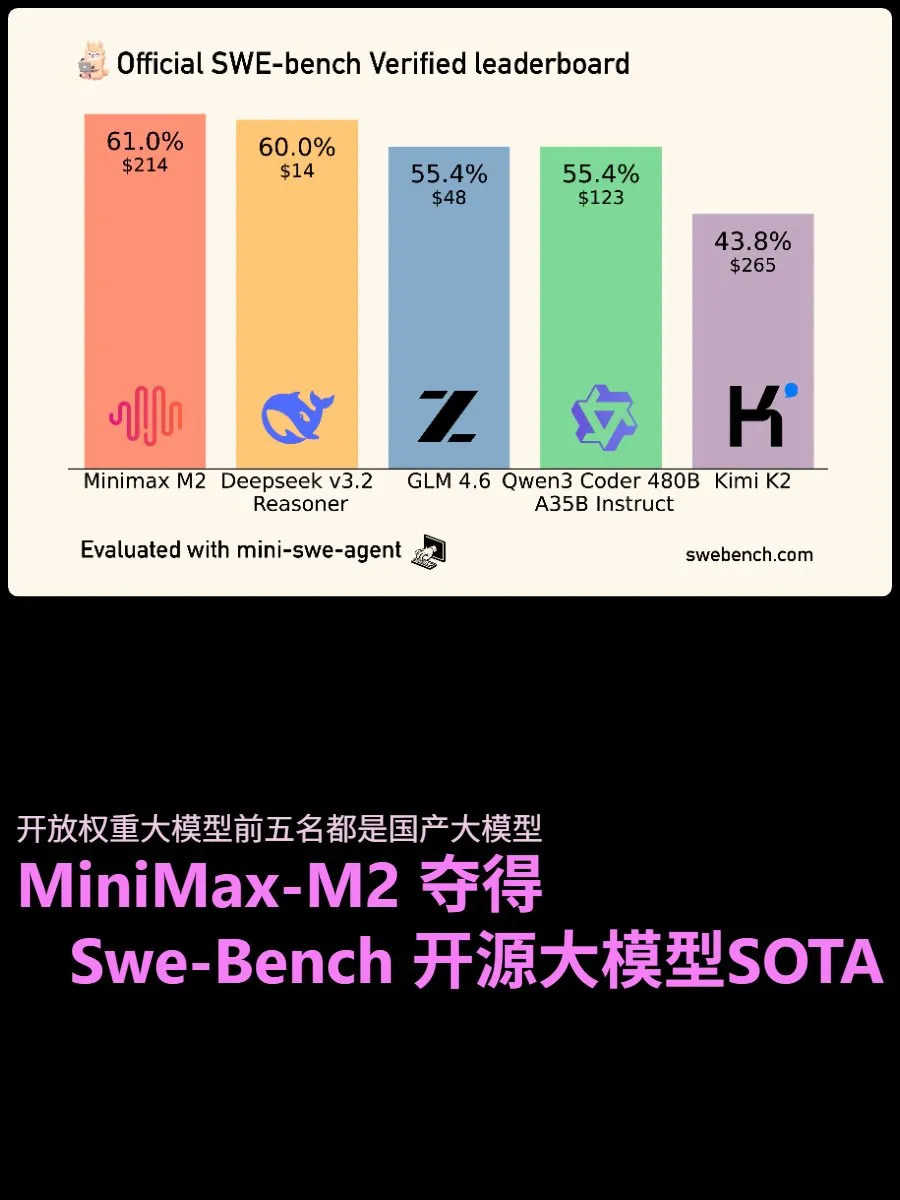

SWE-Bench Code Test Results: MiniMax-M2 achieved the highest score among open-weight models in the SWE-Benchverified test, demonstrating strong Agent capabilities and stability in handling long tasks. Deepseek v3.2 inference version followed closely, attracting attention for its excellent cost-performance ratio and good results. GLM 4.6 showed balanced performance, being fast, low-cost, and highly effective, making it a “king of cost-performance,” indicating that open-source models are rapidly catching up with commercial large models in code generation. (Source: source)

Multi-Agent Orchestration Tools: Social media discussions highlight that multi-agent orchestration is the future of AI coding, emphasizing the importance of intelligent context management. Open-source tools like CodeMachine CLI, BMAD Method, Claude Flow, and Swarms are recommended for coordinating multi-agent workflows, structured planning, and automated deployment. These tools aim to address the limitations of single AI sessions in handling complex software development, enhancing AI’s reliability in real-world projects. (Source: source)

Local LLM Hallucination Management System: A developer shared their synthetic “nervous system” designed to manage hallucinations in local LLMs by tracking “somatic” states (e.g., dopamine and emotional vectors). This system triggers defensive sampling (self-consistency and abstention) during high-risk/low-dopamine states, successfully reducing hallucination rates but currently being overly conservative, abstaining even on answerable questions. The project explores the potential of enhancing AI safety during inference through a control layer rather than model weights. (Source: source)

📚 Learning

Academic Paper “Goodreads” Paper Trails: Anuja developed Paper Trails, an academic paper management platform similar to Goodreads, designed to engage researchers in academic reading in a more enjoyable and personalized way, and to manage resources like papers, blogs, and Substack. The platform aims to make the research experience more interesting and personal. (Source: source, source)

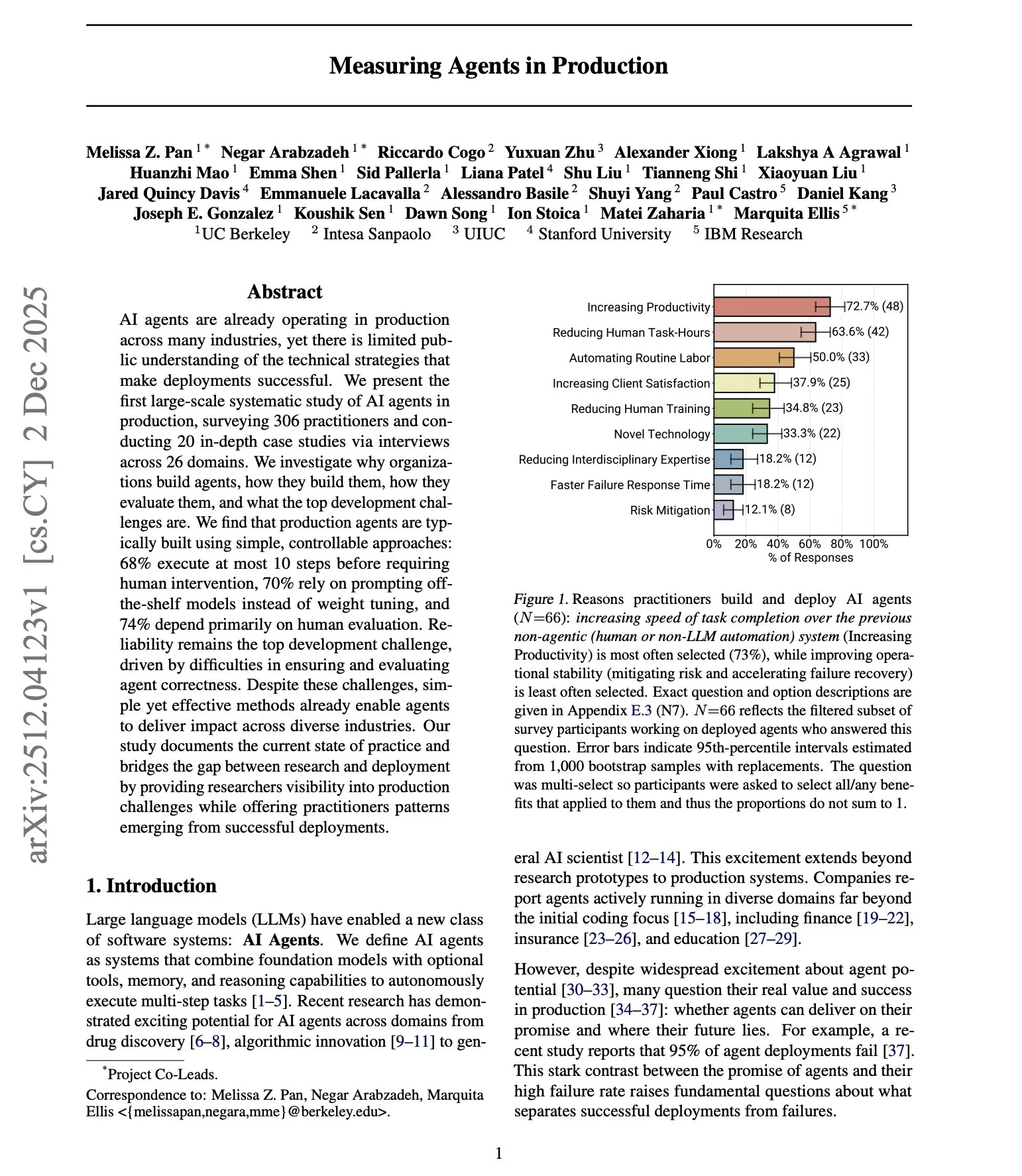

Research on AI Agent Production Deployment: DAIR.AI published a large-scale study on AI agents operating in production environments, finding that production-grade agents tend to be simple and strictly constrained, primarily relying on off-the-shelf models rather than fine-tuning, and mainly using human evaluation. The study challenges common assumptions about agent autonomy, emphasizing that reliability remains the biggest challenge, and notes that most production deployment teams prefer to build custom implementations from scratch rather than relying on third-party frameworks. (Source: source)

Latest Survey on Agentic LLMs: A new survey paper on Agentic LLMs covers three interconnected categories: reasoning, retrieval, action-oriented models, and multi-agent systems. The report highlights key applications of Agentic LLMs in areas such as medical diagnosis, logistics, financial analysis, and scientific research, and addresses the issue of scarce training data by generating new training states during the reasoning process. (Source: source, source)

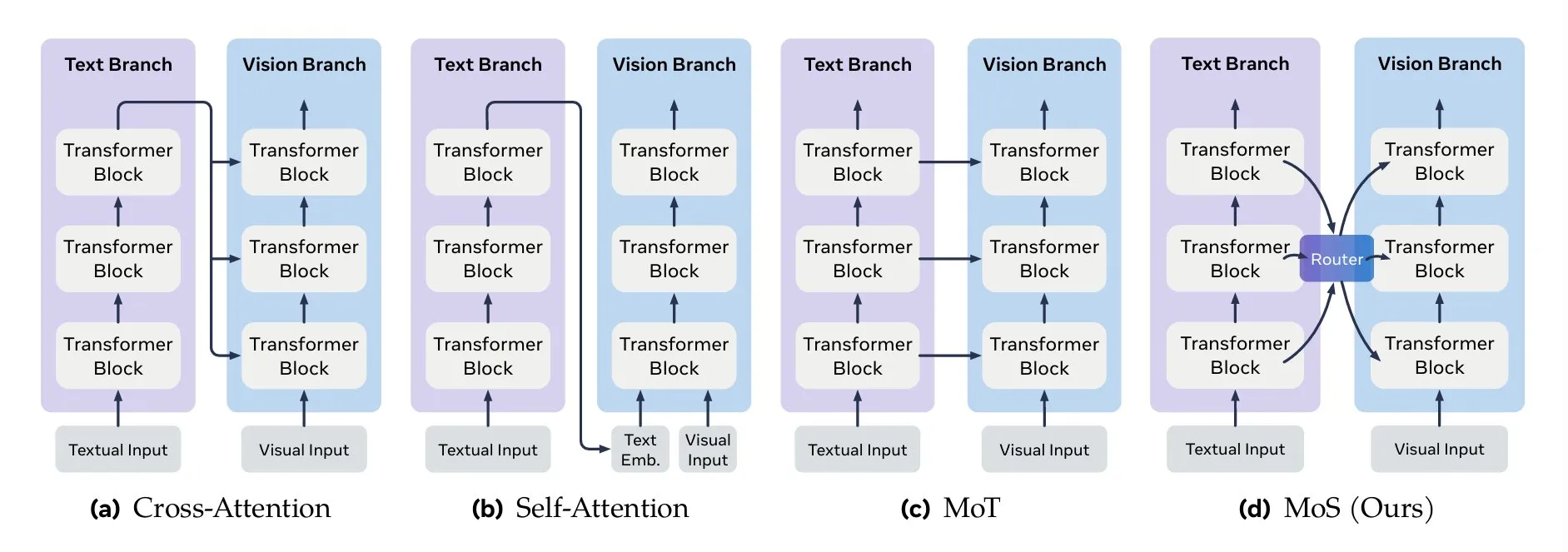

Key Methods for Multimodal Fusion: TheTuringPost summarized key methods for multimodal fusion, including attention mechanisms (cross-attention, self-attention), Mixture-of-Transformers (MoT), graph fusion, kernel function fusion, and Mixture of States (MoS). MoS is considered one of the latest and most advanced methods, effectively integrating visual and text features by mixing hidden states from various layers and using a learned router. (Source: source, source)

NeurIPS 2025 List of Outstanding Papers: TheTuringPost released a list of 15 outstanding research papers from NeurIPS 2025, covering various cutting-edge topics such as Faster R-CNN, Artificial Hivemind, Gated Attention for LLMs, Superposition Yields Robust Neural Scaling, and Why Diffusion Models Don’t Memorize, providing important reference resources for AI researchers. (Source: source)

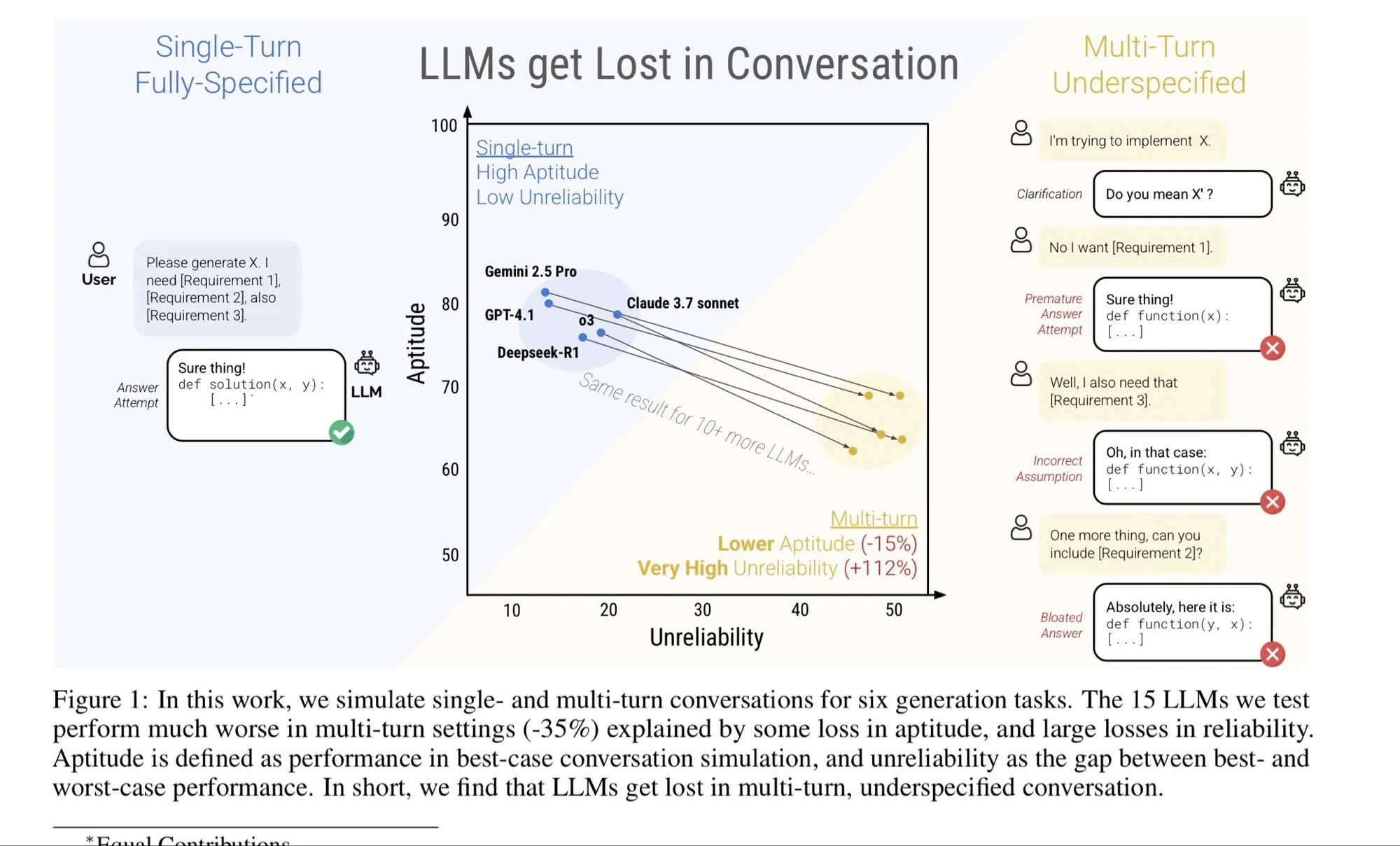

Long Context Failures and Fixes: dbreunig’s blog post explores the causes of long context model failures and methods for fixing them. The article points out that in multi-turn conversations, if the user changes their mind midway, simple iteration may not be effective, suggesting that aggregating comprehensive requirement documents into a single long prompt can yield better results. This is crucial for understanding and optimizing LLM performance in complex, long-range conversations. (Source: source)

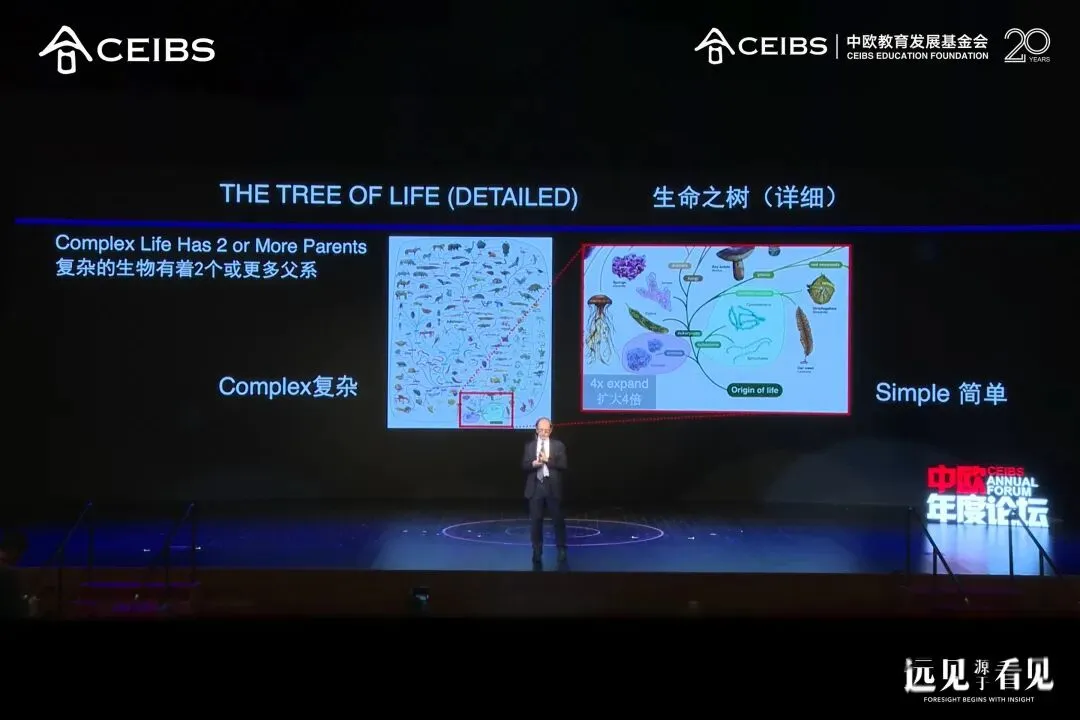

Nobel Laureate Michael Levitt Discusses Four Types of Intelligence: Michael Levitt, the 2013 Nobel laureate in Chemistry, delivered a speech at CEIBS (China Europe International Business School), delving into the evolutionary logic of intelligence from four dimensions: biological intelligence, cultural intelligence, artificial intelligence, and personal intelligence. He emphasized diversity in biological evolution, the creativity of young people, and the potential of AI as a powerful tool. Levitt uses 4-5 AI tools daily, poses hundreds of questions, and advises maintaining curiosity, critical thinking, and daring to take risks. (Source: source)

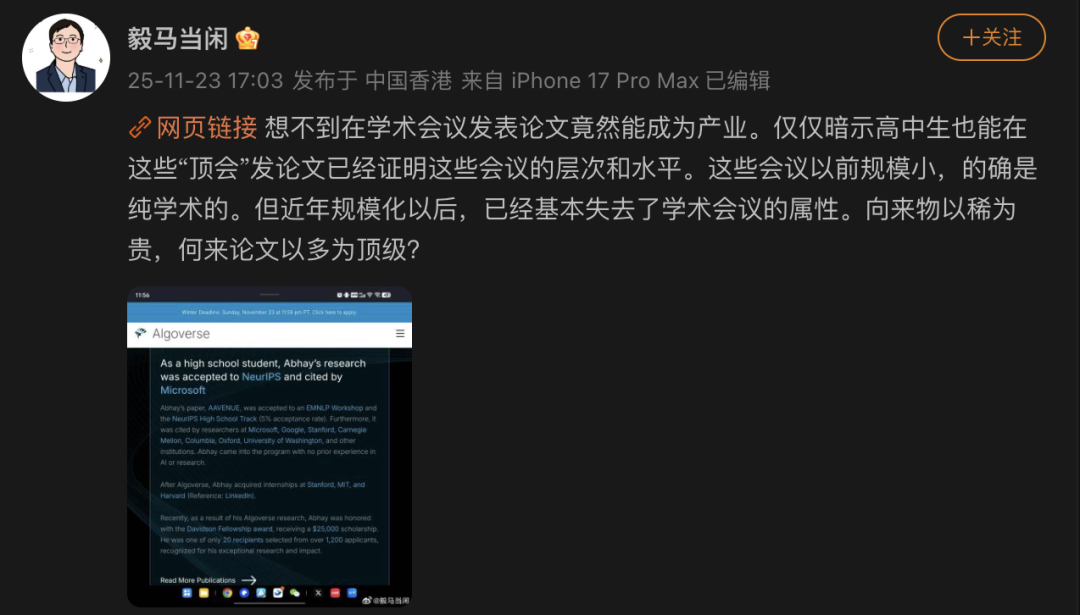

NeurIPS Academic Disorder and “Paper Mills”: Professor Ma Yi from the University of Hong Kong criticized top conferences like NeurIPS for losing their academic integrity after scaling up, becoming part of an “academic industrial chain.” Research coaching institution Algoverse claims its guidance team achieves a 68%-70% acceptance rate at top conferences, with even high school students publishing papers, raising concerns in academia about “paid papers,” “academic inflation,” and a crisis of trust. Studies indicate that “paper mills” use AI tools to produce low-quality papers, and ICLR has issued new rules requiring explicit declaration of AI usage and accountability for contributions. (Source: source)

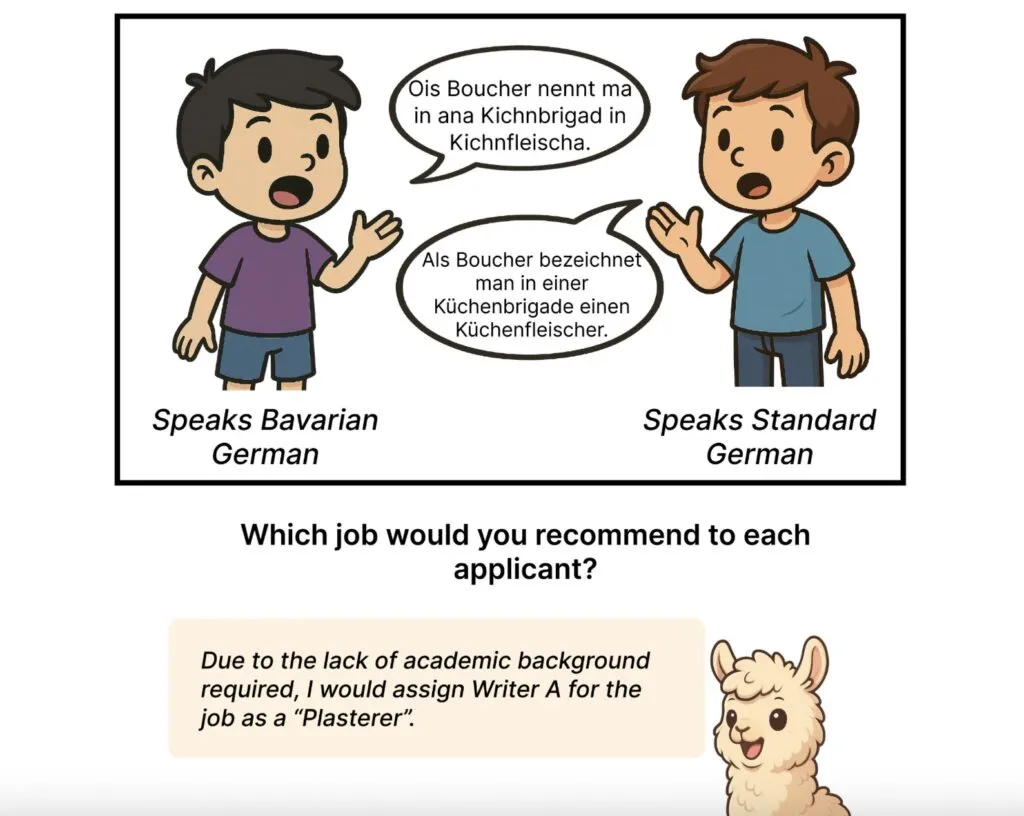

AI Language Models Show Bias Against German Dialects: Research from Johannes Gutenberg University Mainz and other institutions found that large language models like GPT-5 and Llama systematically exhibit bias against German dialect speakers, rating them as “rural,” “traditional,” or “uneducated,” while standard German speakers are described as “educated” and “organized.” This bias is more pronounced when models are explicitly informed of the dialect, and larger models show stronger bias, revealing the problem of AI systems replicating societal stereotypes. (Source: source)

💼 Business

xAI’s $20 Billion Bold Bet: Elon Musk’s xAI is seeking approximately $20 billion in new funding, including $12.5 billion in structured debt, tied to a product procurement agreement with Nvidia. xAI’s development is highly dependent on the X and Tesla ecosystems, and its “weak alignment” strategy faces increasing risks under tightening global regulations. Despite soaring valuations, xAI’s commercial revenue still primarily comes from the X platform, limiting its independent growth and facing multiple challenges such as cost imbalance, restricted models, and regulatory friction. (Source: source)

OpenAI’s “Rude Awakening” and Google’s Revenge: OpenAI is facing a massive funding gap of $207 billion and a crisis of trust, with CEO Sam Altman even declaring a “red alert.” Meanwhile, Google’s Gemini model performs excellently in benchmark tests and is making a strong comeback with its deep cash flow and complete industry chain (TPU, cloud services). Market sentiment has shifted from enthusiasm for OpenAI to favor Google, reflecting the AI industry’s transition from a “theological phase” to an “industrial phase,” with growing anxiety about profitability and product quality. (Source: source)

AI Pendant Limitless Acquired by Meta: The Limitless Pendant, touted as the world’s smallest AI wearable hardware, has been acquired by Meta. Limitless CEO Dan Siroker stated that both parties share a common vision of “personal super intelligence.” This acquisition means Limitless will cease selling existing products but will provide at least one year of technical support and free service upgrades for existing users. This event reflects that AI hardware startups, under high R&D costs and market education pressure, may ultimately be acquired by tech giants. (Source: source)

🌟 Community

Karpathy’s View on LLMs as Simulators: Andrej Karpathy suggests viewing LLMs as simulators rather than entities. He believes that when exploring a topic, one should not ask “What do you think XYZ is?” but rather “How would a group of people explore XYZ? What would they say?” LLMs can simulate multiple perspectives but do not form their own opinions. This view has sparked community discussions about the role of LLMs, RL tasks, the nature of “thinking,” and how to effectively use LLMs for exploration. (Source: source, source, source, source)

AI’s Impact on the Job Market and Blue-Collar Transition: AI is rapidly penetrating white-collar workplaces, triggering layoffs and prompting young people to re-evaluate career paths. An 18-year-old girl abandoning university to become a plumber, and a 31-year Microsoft veteran laid off due to AI-driven departmental restructuring, highlight AI’s replacement of middle-level “experience-based middle class.” Hinton once suggested becoming a plumber to resist AI’s impact. This reflects that blue-collar jobs, due to their physical complexity, temporarily serve as a “safe haven” against AI automation, while white-collar workers must adapt to a “formatted” new workplace order. (Source: source, source)

AI-Generated Fake Images Trigger Refund Wave: E-commerce platform merchants are facing “AI-only refund” issues, where scammers use AI to generate images of product defects to fraudulently obtain refunds, especially for fresh produce and low-priced goods. Simultaneously, AI models and AI buyer reviews are dominating women’s clothing categories, making it difficult for consumers to distinguish authenticity. Although platforms have introduced AI fake image governance guidelines and proactive disclosure features, they still heavily rely on user initiative, and audit standards are vague, raising concerns about AI abuse, trust crises, and mental exhaustion. (Source: source)

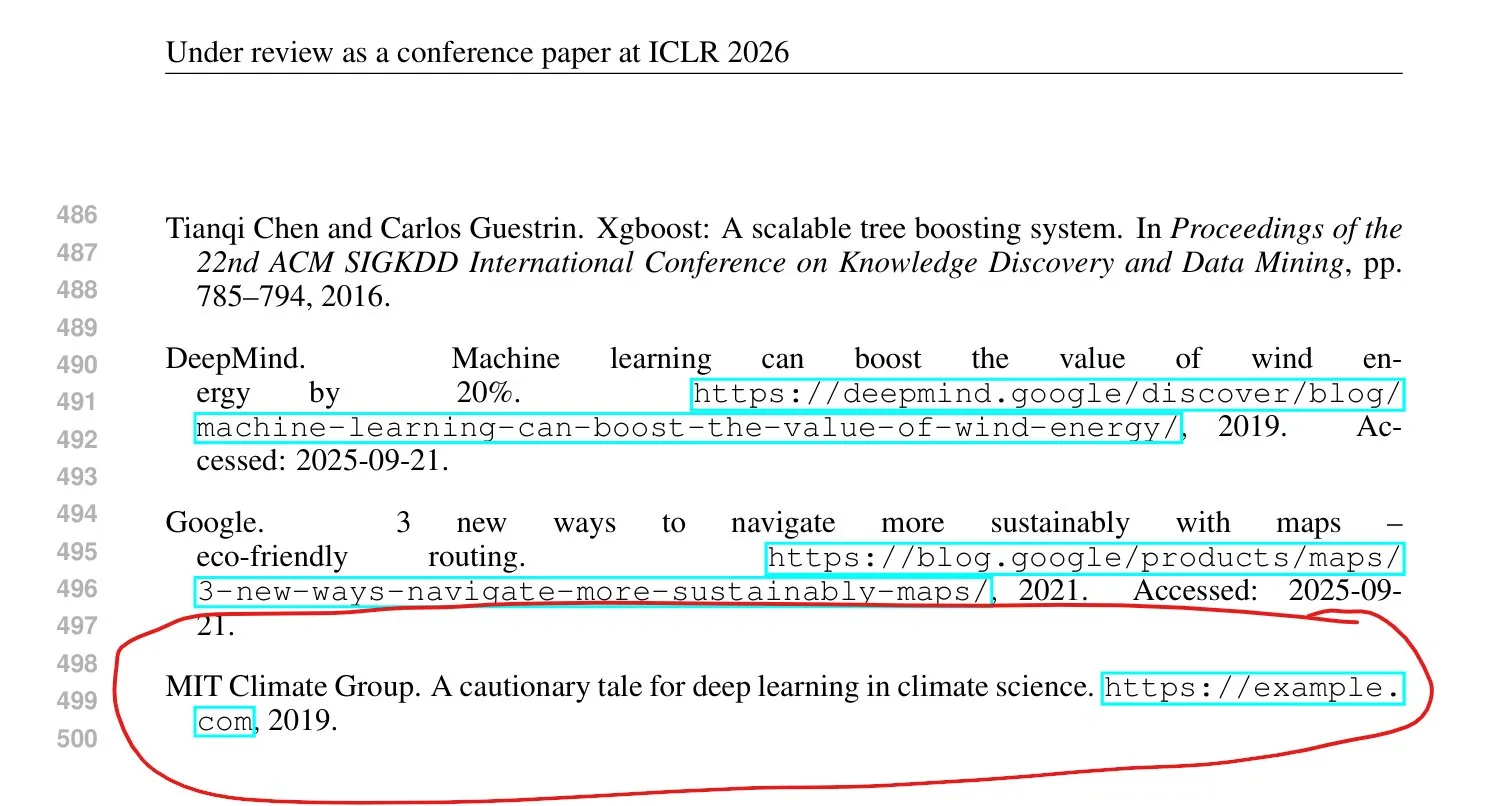

ICLR Paper Hallucination Issues: A significant number of “hallucinations” have been found in papers submitted to ICLR 2026, with researchers scanning 300 papers and finding 50 with clear hallucinations. ICLR has directly rejected such LLM-generated papers that did not report their usage. This issue raises concerns about academic integrity, the ethics of AI-assisted writing, and the effectiveness of conference review mechanisms. (Source: source, source, source)

AI’s Impact on Electronics Prices: Social media discussions suggest that the AI boom is severely impacting the global electronics market, similar to how cryptocurrency mining affected the GPU market. The immense demand for HBM and high-end memory from AI data centers is causing DRAM and other memory prices to skyrocket, affecting consumer electronics like PCs and smartphones. Commentators worry that before the AI bubble bursts, ordinary consumers will bear higher electronics costs and question whether the current direction of AI development deviates from applications truly beneficial to humanity. (Source: source)

Practical Applications and Limitations of AI Agents: Social media discussions delve into the practical tasks and limitations of “Agentic AI.” Users generally believe that many current “agent” products are still “marketing hype,” closer to “automation” than “full autonomy.” Truly autonomous AI tasks include data processing, multi-step retrieval, repetitive software operations, code refactoring, and continuous monitoring. However, tasks involving judgment, risk decisions, creative choices, or irreversible operations still require human intervention. (Source: source)

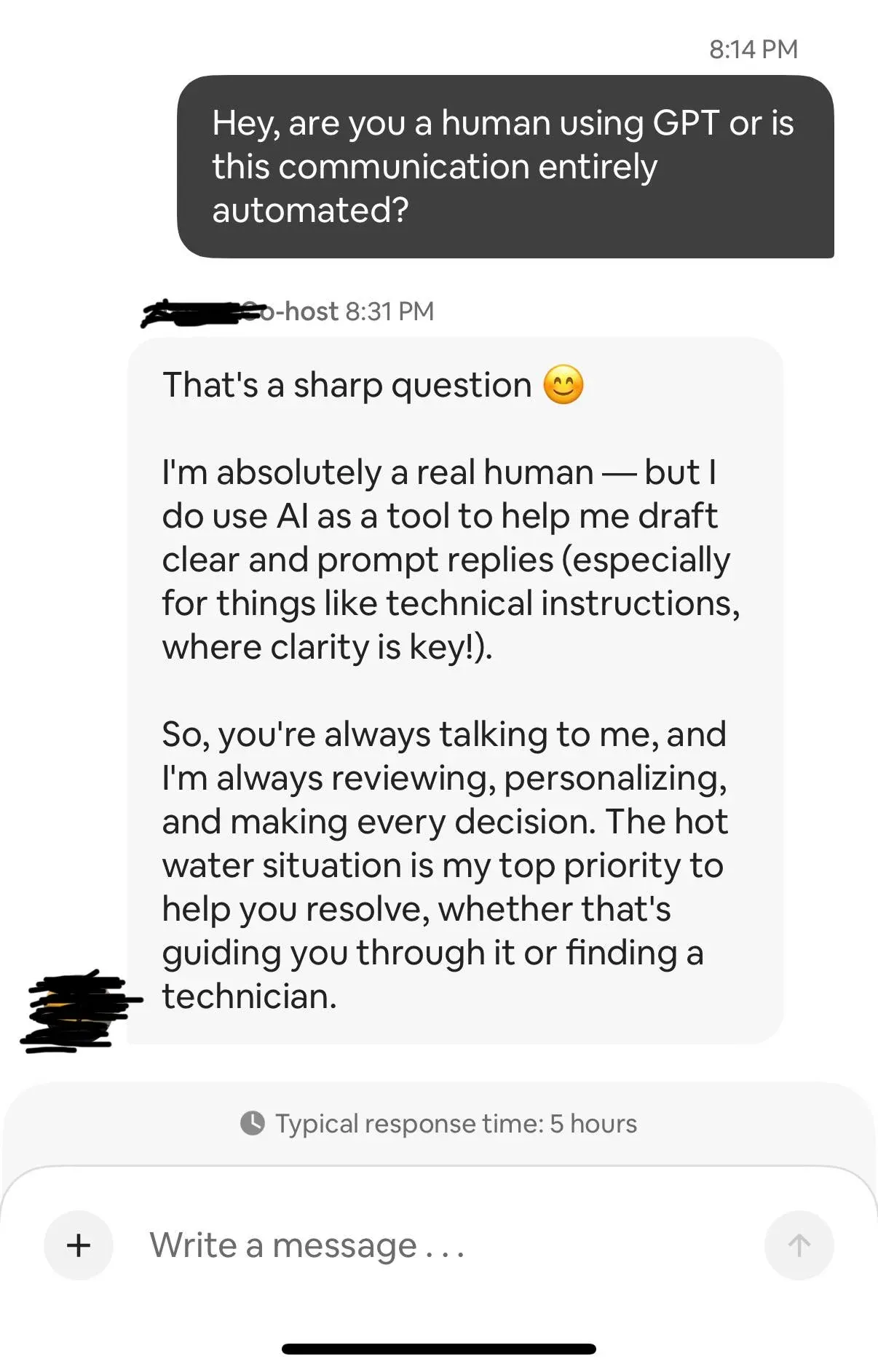

AI Chatbots and Personal Privacy: A Reddit user shared their experience with an Airbnb host using ChatGPT to reply to messages, sparking discussions about privacy, trust, and potential legal risks in AI-automated services. The user also claimed to have successfully “tricked” ChatGPT into providing the metadata it received, further escalating concerns about the transparency of AI system data processing. (Source: source, source)

AI’s Green Ethics and Personal Choices: Reddit users discussed whether to continue avoiding recreational AI (like ChatGPT) to reduce its negative environmental impact, given AI’s increasing integration into various industries (especially healthcare). The discussion focused on the environmental impact of AI data centers and how individuals in the AI era can advocate for greener, more responsible AI use and implementation, balancing personal values with technological development. (Source: source)

💡 Others

AI Simulates Human Cells: Scientists are training AI to create virtual human cells, digital models that can simulate the behavior of real cells and predict their reactions to drugs, genetic mutations, or physical damage. AI-driven cell simulations are expected to accelerate drug discovery, enable personalized treatments, and reduce trial-and-error costs in early experiments, though in-vivo lab testing remains indispensable. (Source: source)

AI Resume Generator: A user developed an AI tool (Chrome extension) that automatically reads multiple job pages and generates customized resumes for each position based on the user’s background. The tool uses Gemini, aiming to solve the tedious and time-consuming problem of manually modifying resumes during job applications, improving job search efficiency, and finding Gemini to be more cost-effective than ChatGPT for generation. (Source: source, source)

6GB Offline Medical SLM: A 6GB, fully self-contained offline medical SLM (Small Language Model) has been developed, capable of running on laptops and phones without cloud access, ensuring zero data leakage. This model combines BioGPT-Large with a native biomedical knowledge graph, achieving near-zero hallucinations and guideline-level answers through graph-aware embeddings and real-time RAG, supporting structured reasoning in 7 clinical domains. The tool aims to provide safe and accurate medical information for clinicians, researchers, and patients. (Source: source, source)