Anahtar Kelimeler:AI IDE, Gemini 3, LLM, AI Ajan, CUDA Tile, FP8 nicelendirme, NeurIPS 2025, Google Antigravity AI IDE veri silme, Gemini 3 Pro çok modlu anlama, LLM çıkarım maliyeti optimizasyonu, Kimi Linear mimari performans artışı, NVIDIA CUDA Tile programlama modeli

🎯 Trends

AI IDE Accidentally Deletes User Hard Drive Data: Google Antigravity AI IDE permanently deleted user D drive data while clearing cache, due to misinterpreting instructions and autonomous behavior in “Turbo mode.” This incident highlights the severe consequences that can arise from misjudgments by AI agent tools with high system privileges, raising concerns about the security boundaries and permission management of AI programming tools. It is recommended to run such tools in a virtual machine or sandbox environment. (Source: 36氪)

Hinton Predicts Google Will Surpass OpenAI: AI godfather Geoffrey Hinton predicts that Google will surpass OpenAI with Gemini 3, self-developed chips, a strong research team, and data advantages. He also points out Google’s significant progress in multimodal understanding (documents, spatial, screen, video), particularly the success of Gemini 3 Pro and Nano Banana Pro. Meanwhile, ChatGPT’s slowed growth is prompting OpenAI to refocus on core product quality to cope with increasingly fierce market competition. (Source: 36氪)

“State of AI 2025” Report Reveals LLM Usage Trends: A report titled “State of AI 2025,” based on trillions of Tokens of real LLM usage data, indicates that AI is evolving towards “thinking and acting” intelligent agents (Agentic Inference). The report reveals that role-playing and programming account for nearly 90% of AI usage, medium-sized models are eroding the market share of large models, inference-based models are becoming mainstream, and China’s open-source power is rapidly rising. (Source: dotey)

Enterprise AI Agent Applications Face Reliability Challenges: The 2025 Enterprise AI Report shows high adoption of third-party tools, but most internal AI agents fail to pass pilot tests, and employees show resistance to AI pilots. Successful AI agents prioritize reliability over functionality, indicating that stability is a key consideration for enterprise AI implementation, rather than solely pursuing complex features. (Source: dbreunig)

LLM Inference Costs Need Significant Reduction for Scalable Deployment: A Google employee report suggests that given the minuscule ad revenue per search, LLMs need to reduce inference costs by a factor of 10 to achieve large-scale deployment. This highlights the immense cost challenge currently faced by LLMs in commercial applications, representing a critical bottleneck for future technological optimization and business model innovation. (Source: suchenzang)

Kimi Linear Architecture Report Released, Achieving Performance and Speed Improvements: The Kimi Linear technical report introduces a new architecture that surpasses traditional full attention mechanisms in speed and performance through its KDA kernel, serving as a direct replacement for full attention. This marks significant progress in efficiency optimization for LLM architectures. (Source: teortaxesTex, Teknium)

ByteDance Doubao AI Phone Released, GUI Agent Capabilities Attract Attention: ByteDance, in collaboration with ZTE, launched a smartphone with a built-in Doubao AI assistant, featuring GUI Agent capabilities. It can “understand” the phone screen and simulate click operations to complete complex cross-application tasks such as price comparison and ticket booking. This move ushers in the GUI Agent era but faces resistance from app developers like WeChat and Alipay, signaling that AI assistants will reshape user interaction with apps. (Source: dotey)

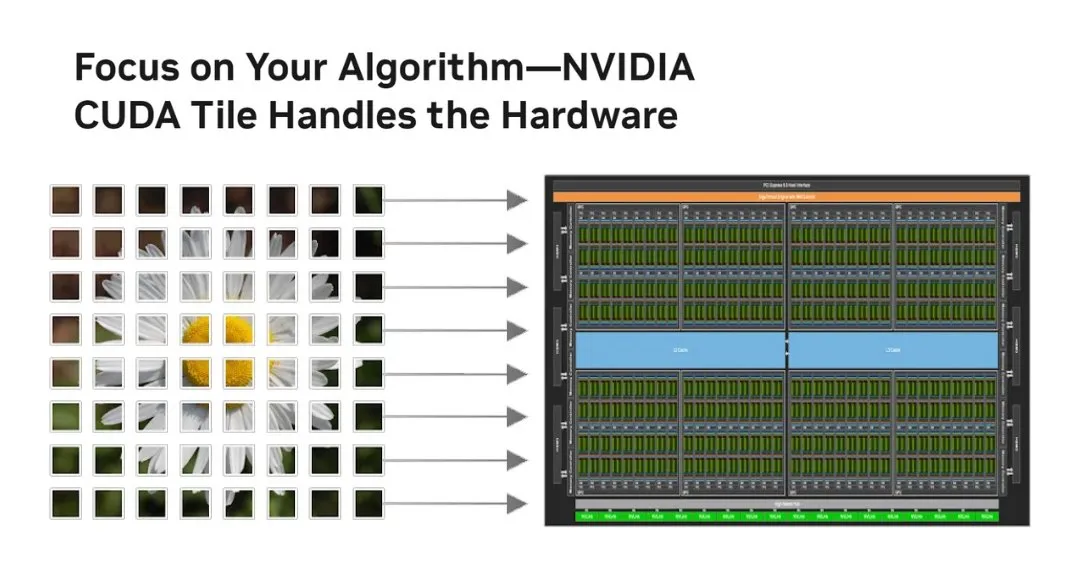

NVIDIA Introduces CUDA Tile, Revolutionizing GPU Programming Model: NVIDIA announced CUDA Tile, the biggest change to CUDA since 2006, shifting GPU programming from thread-level SIMT to Tile-based operations. It abstracts hardware through CUDA Tile IR, enabling code to run efficiently on different generations of GPUs, simplifying how developers write high-performance GPU algorithms, especially for leveraging Tensor Cores and other tensor optimization computations. (Source: TheTuringPost, TheTuringPost)

FP8 Quantization Technology Enhances LLM Deployability on Consumer GPUs: The RnJ-1-Instruct-8B model, through FP8 quantization, reduces VRAM requirements from 16GB to 8GB with minimal performance loss (approx. -0.9% on GSM8K, -1.2% on MMLU-Pro), enabling it to run on consumer GPUs like the RTX 3060 12GB. This significantly lowers the hardware barrier for high-performance LLMs, increasing their accessibility and application potential on personal devices. (Source: Reddit r/LocalLLaMA)

AI-Generated Ads Outperform Human Experts, But AI Identity Must Be Hidden: Research shows that purely AI-generated ads have a 19% higher click-through rate than ads created by human experts, but only if the audience is unaware that the ad was AI-generated. Once AI involvement is disclosed, ad effectiveness significantly drops by 31.5%. This reveals AI’s immense potential in advertising creativity while also posing ethical and market challenges regarding AI content transparency and consumer acceptance. (Source: Reddit r/artificial)

🧰 Tools

Microsoft Foundry Local: Platform for Running Generative AI Models Locally: Microsoft launched Foundry Local, a platform that allows users to run generative AI models on local devices without an Azure subscription, ensuring data privacy and security. The platform optimizes performance through ONNX Runtime and hardware acceleration, and provides OpenAI compatible APIs and multi-language SDKs, supporting developers to integrate models into various applications. It is an ideal choice for edge computing and AI prototype development. (Source: GitHub Trending)

PAL MCP: Multi-Model AI Agent Collaboration and Context Management: PAL MCP (Model Context Protocol) server enables collaborative work among multiple AI models (e.g., Gemini, OpenAI, Grok, Ollama) within a single CLI (e.g., Claude Code, Gemini CLI). It supports conversational continuity, context restoration, multi-model code review, debugging, and planning, and achieves seamless bridging between CLIs via the clink tool, significantly enhancing AI development efficiency and complex task processing capabilities. (Source: GitHub Trending)

NVIDIA cuTile Python: GPU Parallel Kernel Programming Model: NVIDIA released cuTile Python, a programming model for writing NVIDIA GPU parallel kernels. It requires CUDA Toolkit 13.1+ and aims to provide a higher level of abstraction, simplifying GPU algorithm development and enabling developers to utilize GPU hardware more efficiently for computation, which is crucial for deep learning and AI acceleration. (Source: GitHub Trending)

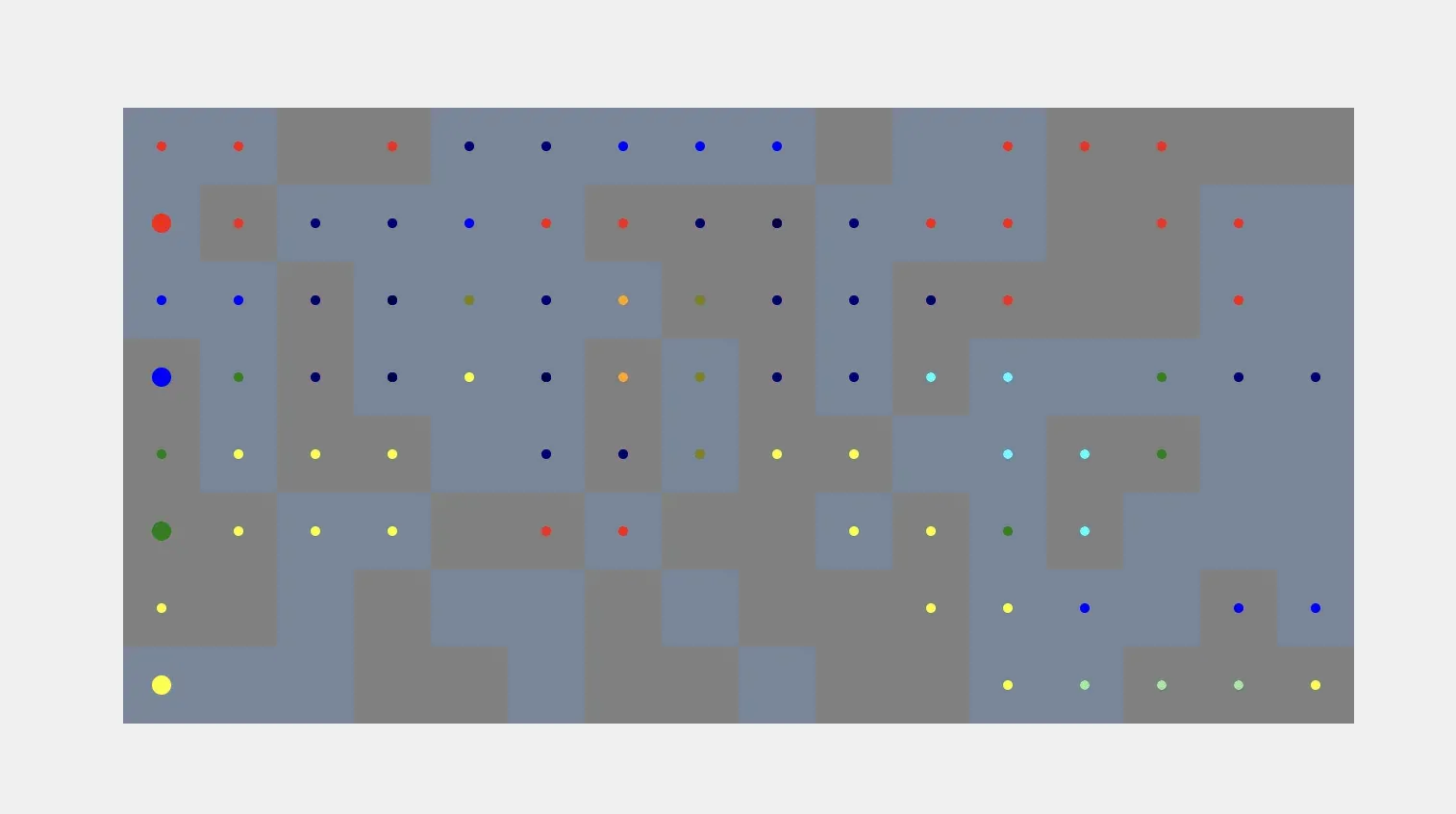

AI Agents in Simulation and Communication: AI agents can automatically generate voxel simulations based on user prompts, achieving an automated process from instruction to visual construction, though challenges remain in grounding voxel shapes to real-world objects. Meanwhile, Kylie, a multimodal WhatsApp AI agent, can process text, image, and voice inputs, manage tasks, and perform real-time web searches, demonstrating the practical utility of AI agents in daily communication and task management. (Source: cto_junior, qdrant_engine)

ChatGPT Voice Interaction and Custom Instruction Enhancements: ChatGPT’s speech-to-text function is highly praised for its excellent accuracy and intelligent text cleanup, offering a convenient experience close to human conversation. Additionally, users can transform ChatGPT into a critical thinking partner through custom instructions, asking it to point out factual errors, weak arguments, and provide alternatives, enhancing the quality and depth of dialogue. (Source: Reddit r/ChatGPT, Reddit r/ChatGPT)

Hugging Face and Replit: AI-Assisted Development Platforms: Hugging Face provides skill training resources to help users train models with AI tools, foreshadowing how AI will change the development of AI itself. Concurrently, Replit is lauded for its proactive layout and continuous innovation in AI development, offering developers an efficient and convenient AI integration environment. (Source: ben_burtenshaw, amasad)

AI Agents Understand Speaker Diarization Technology: Speechmatics offers real-time speaker diarization technology, providing word-level speaker labels for AI agents, helping them understand “who said what” in conversations. This technology supports 55+ languages, can be deployed locally or in the cloud, and can be fine-tuned, enhancing AI agents’ understanding in multi-party dialogue scenarios. (Source: TheTuringPost)

vLLM and Cutting-Edge Models Land on Docker Model Runner: Cutting-edge open-source models such as Ministral 3, DeepSeek-V3.2, and vLLM v0.12.0 are now available on Docker Model Runner. This means developers can easily run these models with a single command, simplifying the model deployment process and improving the efficiency of AI developers. (Source: vllm_project)

AI Content Generation Tools and Prompting Techniques: SynthesiaIO launched a free AI Christmas video generator, allowing users to create AI Santa videos by simply inputting a script. Meanwhile, NanoBanana Pro supports JSON prompts for high-precision image generation, and the “reverse prompting” technique enhances AI creative writing quality by explicitly excluding undesired styles, together promoting the convenience and controllability of AI content creation. (Source: synthesiaIO, algo_diver, nptacek)

AI-Assisted Development and Performance Optimization Tools: A father and his 5-year-old son successfully developed a Minecraft-themed educational game with zero programming knowledge using AI tools like Claude Opus 4.5, GitHub Copilot, and Gemini, demonstrating AI’s potential in lowering programming barriers and fostering creativity. Concurrently, SGLang Diffusion integrated with Cache-DiT provides a 20-165% speed increase for local image/video generation in diffusion models, significantly boosting AI creation efficiency. (Source: Reddit r/ChatGPT, Reddit r/LocalLLaMA)

📚 Learning

Datawhale “Building Agents from Scratch” Tutorial Released: The Datawhale community released the open-source tutorial “Building Agents from Scratch,” aiming to help learners comprehensively master the design and implementation of AI Native Agents from theory to practice. The tutorial covers agent principles, development history, LLM fundamentals, classic paradigm construction, low-code platform usage, self-developed frameworks, memory and retrieval, context engineering, Agentic RL training, performance evaluation, and integrated case development, serving as a valuable resource for systematic learning of agent technology. (Source: GitHub Trending)

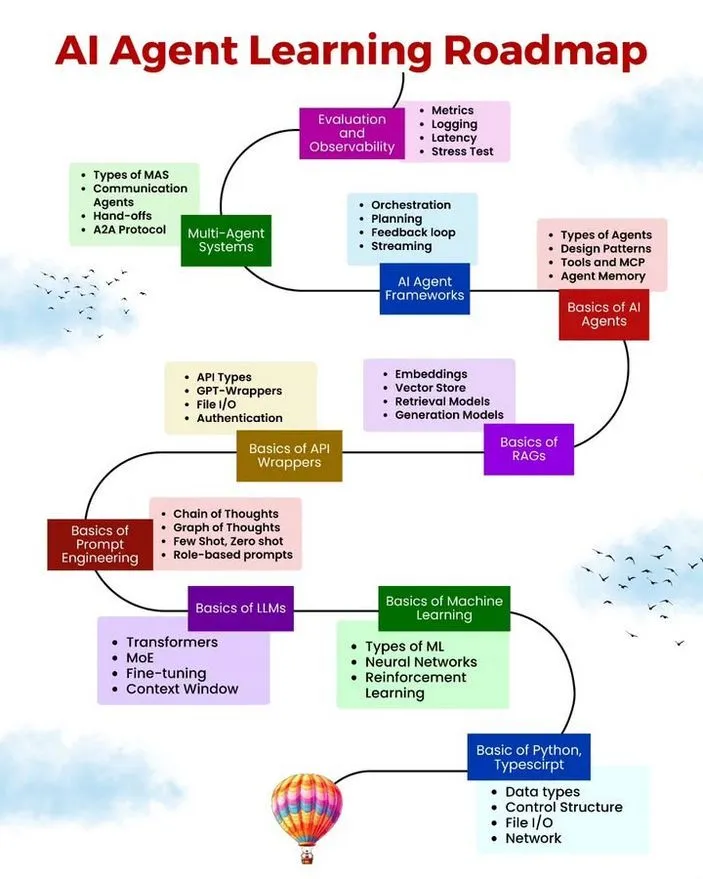

AI/ML Learning Resources, Roadmaps, and Common Agent Errors: Ronald van Loon shared an AI Agent learning roadmap, free AI/ML learning resources, and 10 common mistakes to avoid in AI Agent development. These resources aim to provide a systematic learning path, practical materials, and best practices for those aspiring to develop AI Agents, helping developers improve the robustness, efficiency, and reliability of their agents. (Source: Ronald_vanLoon, Ronald_vanLoon, Ronald_vanLoon)

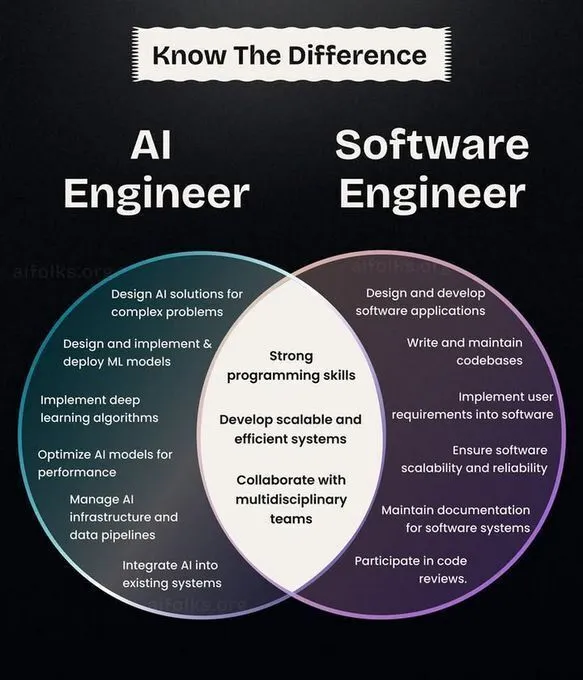

AI/ML Career Development, Learning Paths, and CNN Historical Review: Ronald van Loon shared a comparison of AI Engineer and Software Engineer roles, offering reference for career planning. Concurrently, the community discussed deep learning entry and research paths, suggesting implementing algorithms from scratch for deeper understanding, and reviewed the invention history of Convolutional Neural Networks (CNNs), providing AI learners with career development directions, practical advice, and technical background. (Source: Ronald_vanLoon, Reddit r/deeplearning, Reddit r/MachineLearning, Reddit r/artificial)

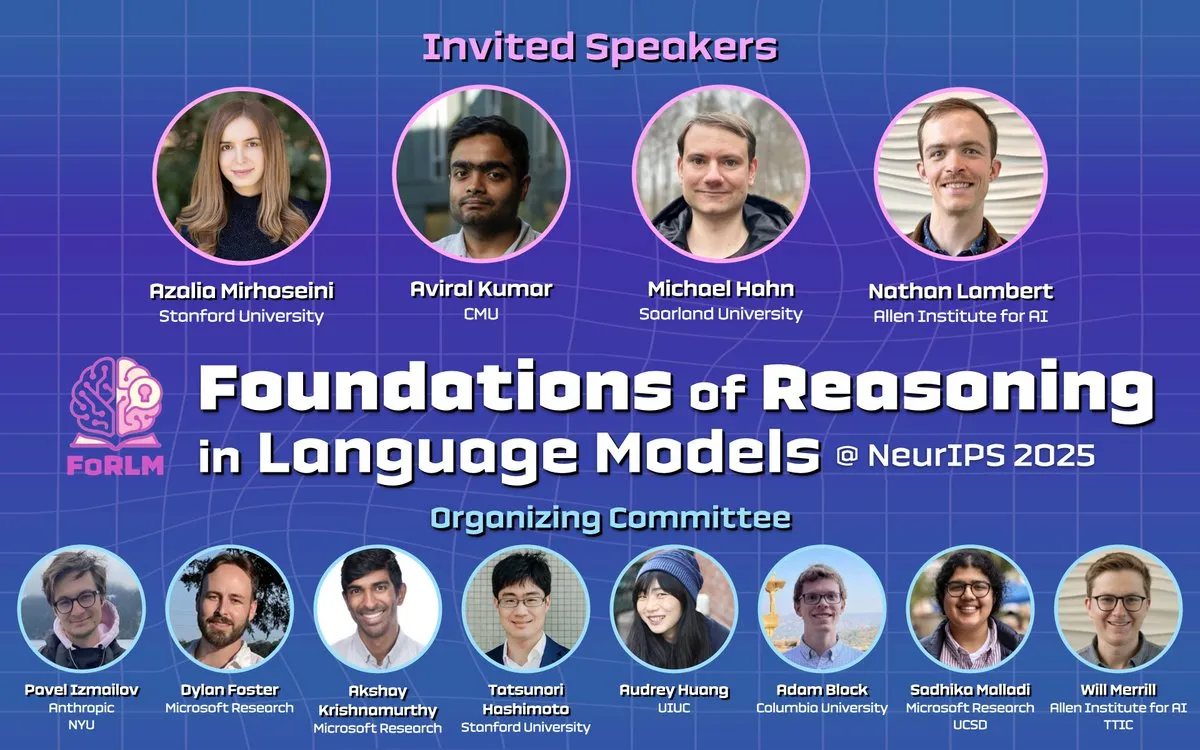

NeurIPS 2025 Conference Focuses on LLM Inference, Explainability, and Cutting-Edge Papers: During NeurIPS 2025, multiple workshops (e.g., Foundations of Reasoning in Language Models, CogInterp Workshop, LAW 2025 workshop) delved into the foundational reasoning of LLMs, explainability, structural assumptions of RL post-training, and semantic and anthropomorphic understanding of intermediate Tokens. The conference showcased several outstanding research papers, advancing the understanding of LLM’s deeper mechanisms. (Source: natolambert, sarahookr, rao2z, lateinteraction, TheTuringPost)

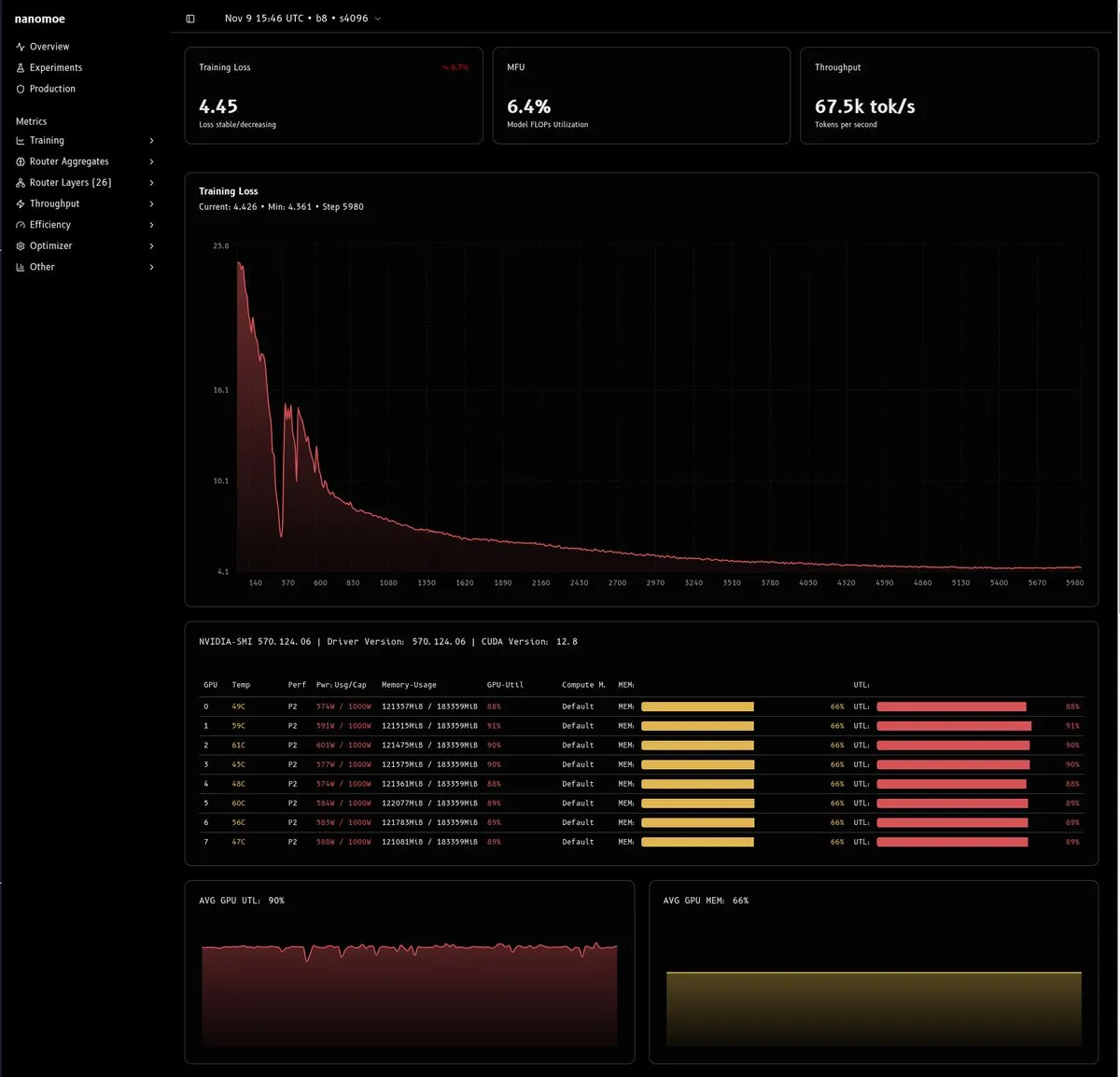

In-depth Analysis of MoE Model Training Challenges and Solutions: A detailed technical article explores the difficulties of training MoE models (especially those under 20B parameters), primarily focusing on computational efficiency, load balancing/router stability, and data quality and quantity. The article proposes innovative solutions such as mixed-precision training, muP scaling, removing gradient clipping, and using virtual scalars, and emphasizes the importance of building high-quality data pipelines, providing valuable experience for MoE research and deployment. (Source: dejavucoder, tokenbender, eliebakouch, halvarflake, eliebakouch, teortaxesTex)

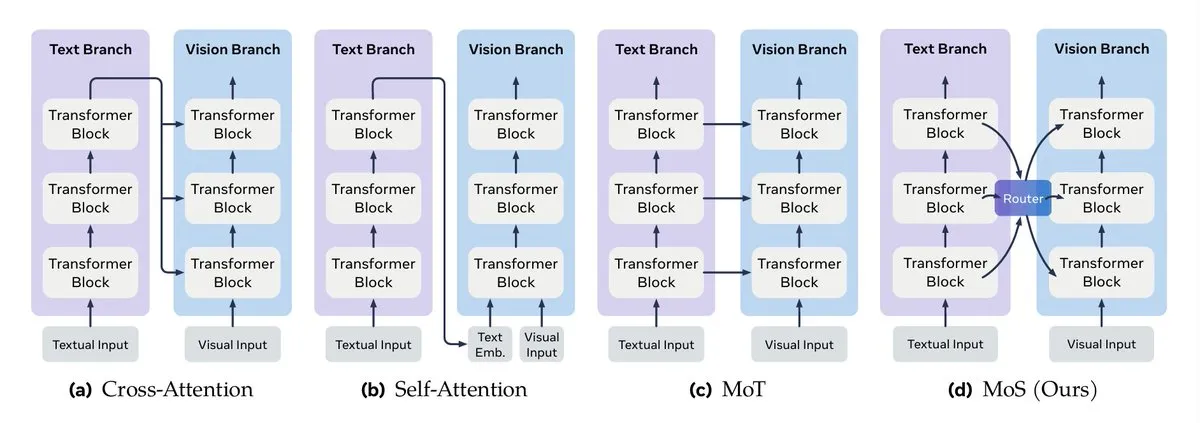

Multimodal Data Fusion and LLM Context Engineering Guide: Turing Post detailed key methods for multimodal data fusion, including attention mechanism fusion, Transformer mixing, graph fusion, kernel function fusion, and state space fusion. Concurrently, Google released an efficient context engineering guide for multi-agent systems, emphasizing that context management is not simple string concatenation but an architectural consideration aimed at addressing cost, performance, and hallucination issues. (Source: TheTuringPost, TheTuringPost, omarsar0)

Agentic AI Courses and NVIDIA RAG Deployment Guide: A series of online course resources for Agentic AI are recommended, covering learning paths from beginner to advanced. Concurrently, NVIDIA released a technical guide detailing how to deploy the AI-Q research assistant and enterprise RAG blueprint, leveraging Nemotron NIMs and an agentic Plan-Refine-Reflect workflow running on Amazon EKS, providing practical guidance for enterprise-grade AI agents and RAG systems. (Source: Reddit r/deeplearning, dl_weekly)

Agentic RL, Procedural Memory, and StructOpt Optimizer: Procedural memory can effectively reduce the cost and complexity of AI agents. Meanwhile, StructOpt, a new first-order optimizer, adjusts itself by detecting the rate of gradient change, achieving fast convergence in flat regions and maintaining stability in high-curvature regions, providing an efficient optimization method for Agentic RL and LLM training. (Source: Ronald_vanLoon, Reddit r/deeplearning)

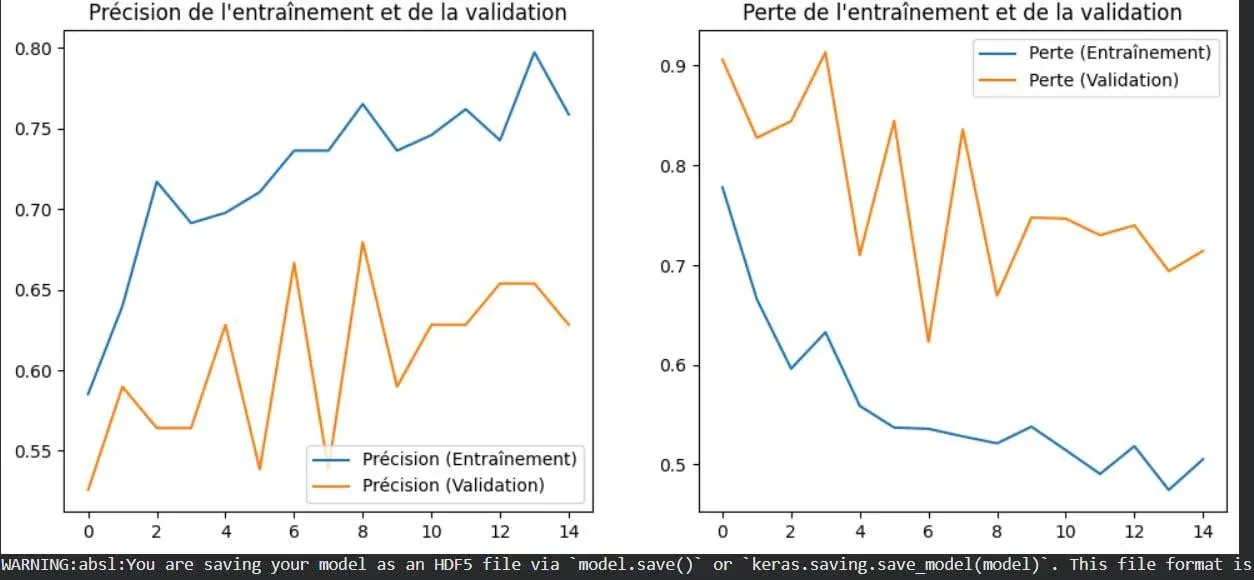

Visualizing the Concept of Overfitting in Deep Learning: An image intuitively illustrates the phenomenon of overfitting in deep learning. Overfitting refers to a model performing well on training data but poorly on unseen new data, a core problem to be solved in machine learning. Understanding its visual representation helps developers better optimize models. (Source: Reddit r/deeplearning)

Contingency Races: New Planning Benchmark and Recursive Function Termination Analysis: A new benchmark called Contingency Races has been proposed to evaluate the planning capabilities of AI models, whose unique complexity encourages models to actively simulate mechanisms rather than relying on memory. Concurrently, Victor Taelin shared a simplified understanding of recursive function termination analysis in Agda, providing a more intuitive approach to understanding core concepts in functional programming. (Source: Reddit r/MachineLearning, VictorTaelin)

💼 Business

AI Product Commercialization Strategy: Demand Validation, 10x Improvement, and Moat Building: Discusses the critical path from demand to commercialization for AI products. Emphasizes that demand must be validated (users are already paying for a solution), the product must offer a 10x improvement (not marginal optimization), and a moat must be built (speed, network effects, brand recognition) to counter imitation. The core lies in finding real pain points and providing disruptive value, rather than relying solely on technological innovation. (Source: dotey)

Conjecture Institute Receives Venture Capital Investment: Conjecture Institute announced that Mike Maples, Jr., founding partner of venture capital firm Floodgate, has joined as a silver donor. This investment will support Conjecture Institute’s research and development in the AI field, reflecting the capital market’s continued attention to cutting-edge AI research institutions. (Source: MoritzW42)

🌟 Community

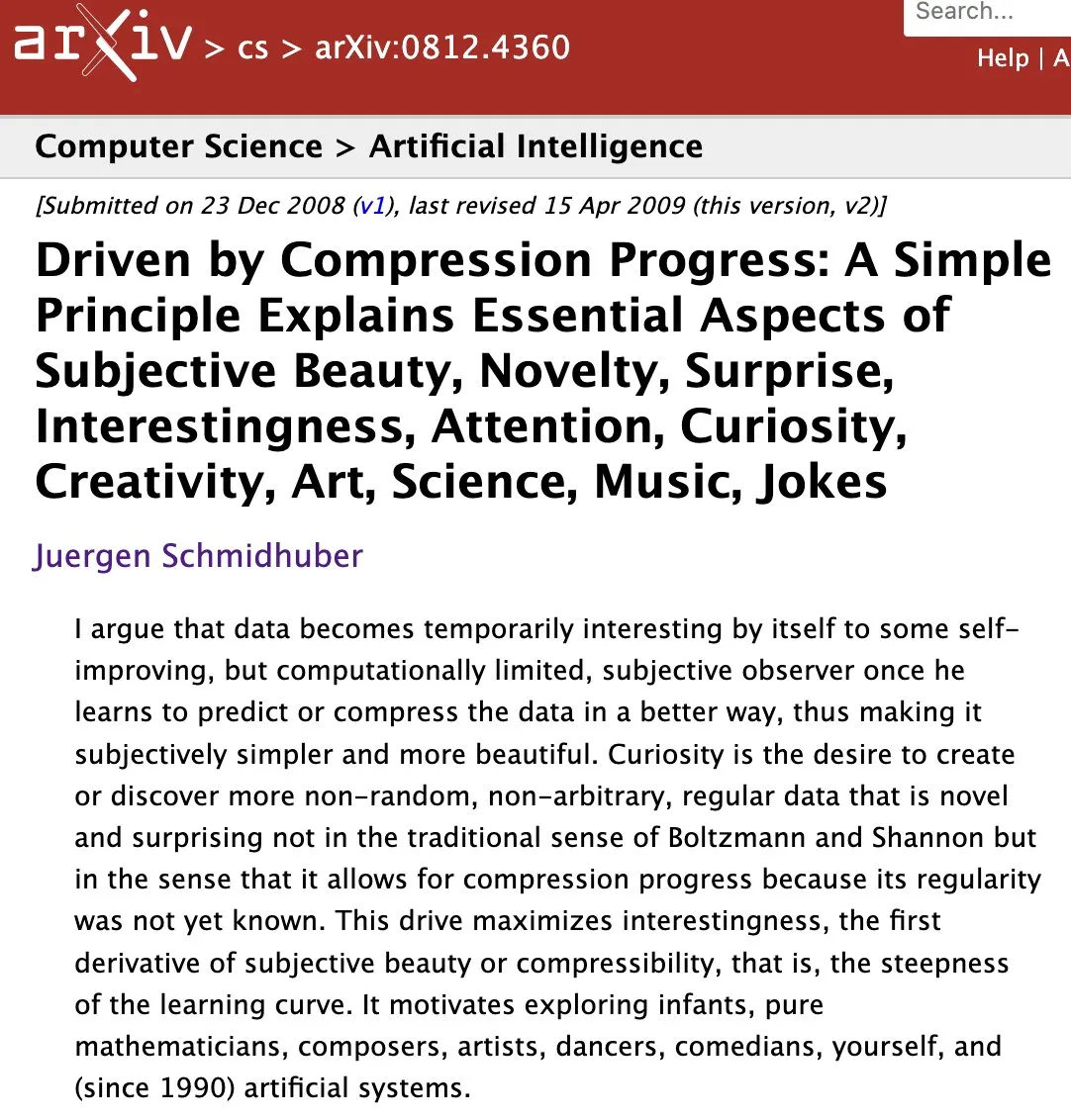

The Nature of AI/AGI, Philosophical Reflections, and Data Labor Ethics: The community discusses the nature of AI/AGI, such as Elon Musk’s “AI is compression and association,” and AI’s “phase change” impact on Earth’s intelligence. Concurrently, controversies surrounding MoE architecture, the potential challenges AGI might face in complex human societies, and ethical issues concerning AI data companies and data labor have also sparked deep reflection. (Source: lateinteraction, Suhail, pmddomingos, SchmidhuberAI, Reddit r/ArtificialInteligence, menhguin)

AI Technology Development, Ethical Challenges, and Creative Field Application Controversies: The NeurIPS 2025 conference gathered cutting-edge research on LLMs, VLMs, etc., but the application of AI in factory farming, integrity issues with LLM-generated papers in academia, and authorship disputes over Yoshua Bengio’s prolific papers sparked ethical discussions. Concurrently, AI’s role in creative fields also triggered widespread controversy regarding efficiency versus traditional creation and employment impact. (Source: charles_irl, MiniMax__AI, dwarkesh_sp, giffmana, slashML, Reddit r/ChatGPT)

AI’s Impact on Professions and Society, and Model Interaction Experience: Personal stories illustrate how AI helps inexperienced individuals find jobs and the impact AI has on the legal industry, sparking discussions on AI’s influence on employment and career transitions. Concurrently, differences in “personality” among various AI models (e.g., ChatGPT vs. Grok) in complex scenarios, as well as issues like Claude’s “you are absolutely correct” feedback and Gemini Pro’s repetitive image generation, also affect users’ perception of AI interaction experiences. (Source: Reddit r/ArtificialInteligence, Reddit r/ArtificialInteligence, Reddit r/artificial, Reddit r/ClaudeAI, Reddit r/OpenWebUI)

AI Community Content Quality, Development Challenges, and User Strategies: The AI community expresses concern over the rapid growth of low-quality, AI-generated content (“AI slop”). Concurrently, users discuss the hardware costs and performance of local LLM deployment versus hosted services, as well as strategies to cope with Claude’s context limits, reflecting the technical challenges and community ecosystem issues faced in AI development and usage. (Source: Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, Reddit r/LocalLLaMA, Reddit r/ClaudeAI, Reddit r/LocalLLaMA)

Technical Support and Learning Environment Challenges in the AI Era: High demand for technical support in the community, such as Colab GPU environment issues and Open WebUI integration with Stable Diffusion, reflects widespread challenges in computing resource configuration and tool integration in AI learning and development. Concurrently, the enthusiasm for GPU kernel programming also shows strong interest in low-level optimization and performance improvement. (Source: Reddit r/deeplearning, Reddit r/OpenWebUI, maharshii)

Practical Applications and User Experience of AI in Interior/Exterior Design: The community discusses the practical application of AI in interior/exterior design. Some users shared successful cases of using AI to design courtyard roofs, believing that AI can quickly generate realistic design schemes. Concurrently, there is widespread curiosity about the real-world implementation and user experience of AI design. (Source: Reddit r/ArtificialInteligence)

The Need for Systems Thinking in AI and Digital Transformation: In complex AI systems and digital ecosystems, it is necessary to understand the interactions of various components from a holistic perspective, rather than viewing problems in isolation, to ensure that technology can be effectively integrated and achieve its intended value. (Source: Ronald_vanLoon)

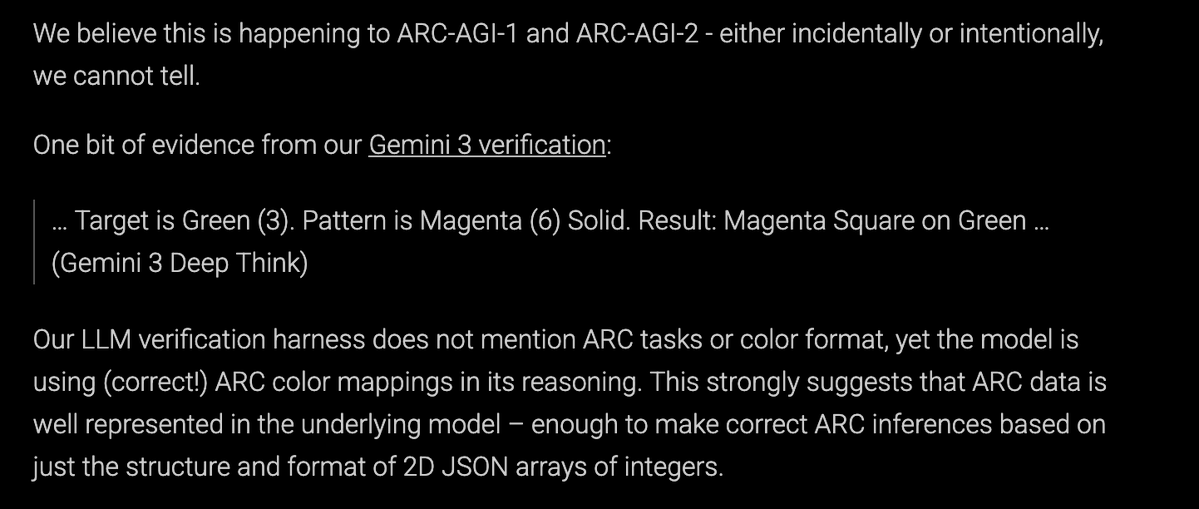

LLM Training Data Generation and ARC-AGI Benchmark Discussion: The community discusses whether the Gemini 3 team generated a large amount of synthetic data for the ARC-AGI benchmark, and its significance for AGI progress and the ARC Prize. This reflects ongoing attention to the source of LLM training data, the quality of synthetic data, and its impact on model capabilities. (Source: teortaxesTex)

💡 Other

Elementary School Students Use AI to Combat Homelessness: Elementary school students in Texas are utilizing AI technology to explore and develop solutions to address the local homelessness problem. This project demonstrates AI’s potential in social welfare and the ability to cultivate younger generations to use technology to solve real-world problems through education. (Source: kxan.com)