Anahtar Kelimeler:GPT-5.2, AI Agent, Uzamsal Zeka, Somutlaştırılmış Zeka, Büyük Model, AI Donanımı, AI Etiği, GPT-5.2 uzmanlık iş yeteneği, AI telefon Agent açık kaynak çerçevesi, Üç boyutlu fiziksel dünya uzamsal zekası, İnsansı robot somutlaştırılmış zekası, NVIDIA DGX Station GB300

🎯 Trends

GPT-5.2 Release: Focusing on Professional Knowledge Work and Fluid Intelligence : OpenAI released GPT-5.2, aiming to enhance professional knowledge work capabilities. It showed significant performance in ARC-AGI-2 (fluid intelligence) and GDPval (economic value tasks) benchmarks. Its API calls surpassed one trillion Tokens on the first day, and it adopted Anthropic’s “skills” mechanism. However, users reported poor performance in empathy and common sense, along with strict censorship. (Source: source, source, source, source, source)

Meta AI Strategic Shift and Internal Conflicts : Mark Zuckerberg has shifted Meta’s strategic focus to AI. The newly formed TBD Lab team has encountered friction with existing business units over resource allocation and development goals. The new team is dedicated to developing “god-like AI superintelligence,” while core business units aim to optimize social media and advertising. To support AI, the Reality Labs budget was significantly cut, leading to internal tensions. (Source: source)

Spatial Intelligence: AI’s New Frontier and China’s Opportunity : “Spatial intelligence” is considered the next frontier of AI, moving from one-dimensional Tokens to understanding and interacting with the three-dimensional physical world. Chinese companies like Coohom (群核科技) and Tencent Hunyuan have laid foundations in this area, potentially becoming leaders in the new round of intelligence competition. Spatial intelligence holds immense potential in film and television creation, industrial twins, embodied robot simulation, and other fields. (Source: source)

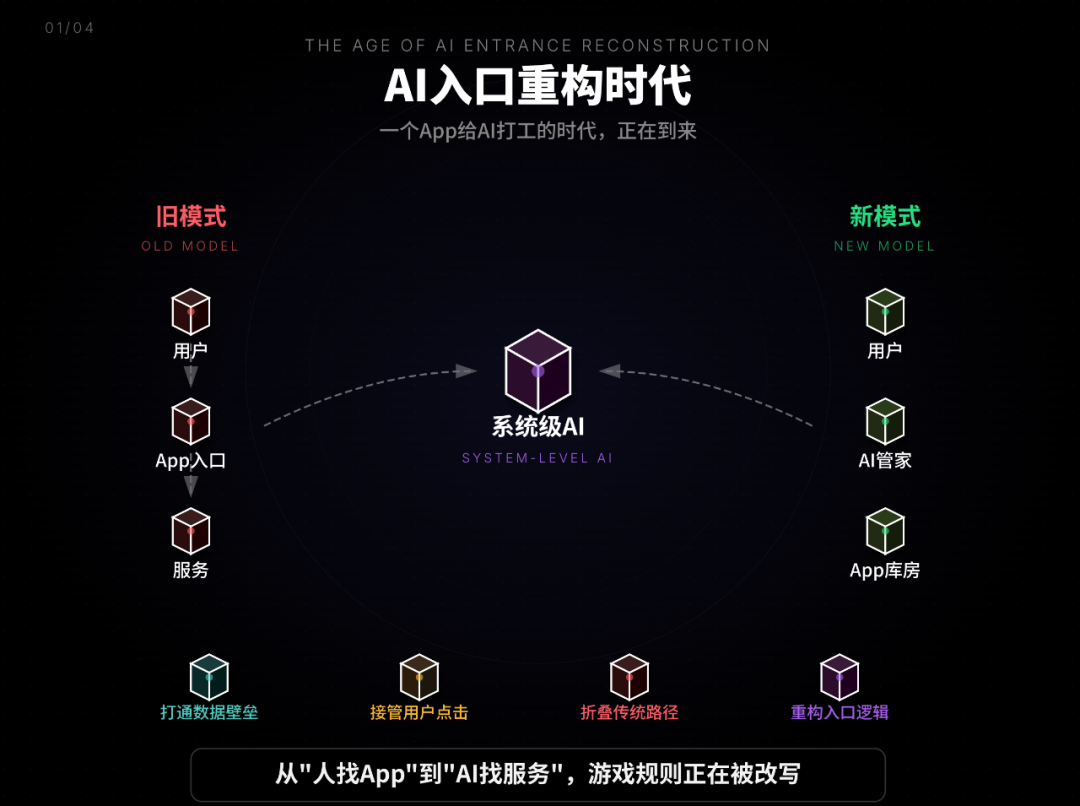

Rise and Open-Sourcing of AI Phone Agent Ecosystem : ByteDance launched Doubao Mobile Assistant, a system-level AI capable of breaking down App data barriers and replacing user operations, challenging traditional App traffic models. Concurrently, Zhipu AI open-sourced the AutoGLM mobile Agent framework and its 9B model, aiming to democratize AI-native mobile capabilities. This initiative addresses privacy concerns through local, cloud, or hybrid deployment and challenges platform monopolies, being hailed as the “Android moment for AI phones.” (Source: source, source, source)

Google Gemini Feature Expansion and Model Updates : Gemini can now provide local search results in rich visual formats and is deeply integrated with Google Maps. The Gemini 2.5 Flash Native Audio model has been updated to support real-time voice translation, capable of mimicking the speaker’s timbre. Google DeepMind also launched SIMA 2 as an AI explorer for virtual 3D worlds and proposed practical principles for Agent system expansion. (Source: source, source, source, source, source)

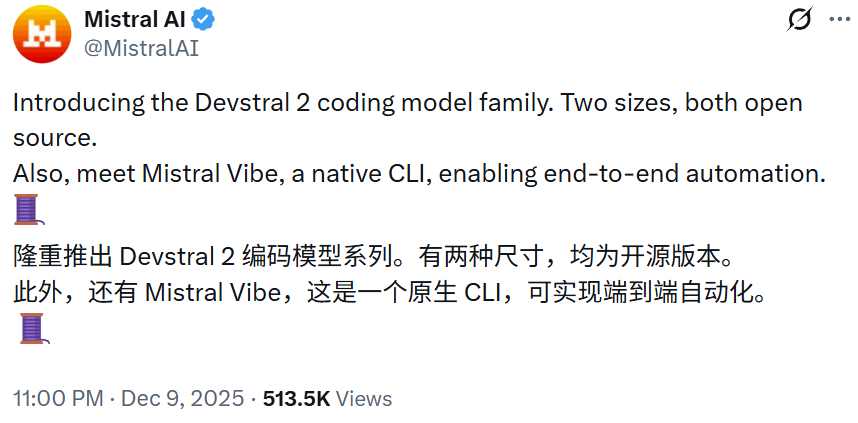

Mistral AI and NVIDIA New Model Releases : Mistral AI open-sourced its Devstral 2 (123B) and Devstral Small 2 (24B) code models, performing excellently on SWE-bench Verified. NVIDIA released the efficient gpt-oss-120b Eagle3 model, optimizing throughput with speculative decoding. The Mistral Large 3 architecture is similar to DeepSeek V3. (Source: source, source, source, source, source)

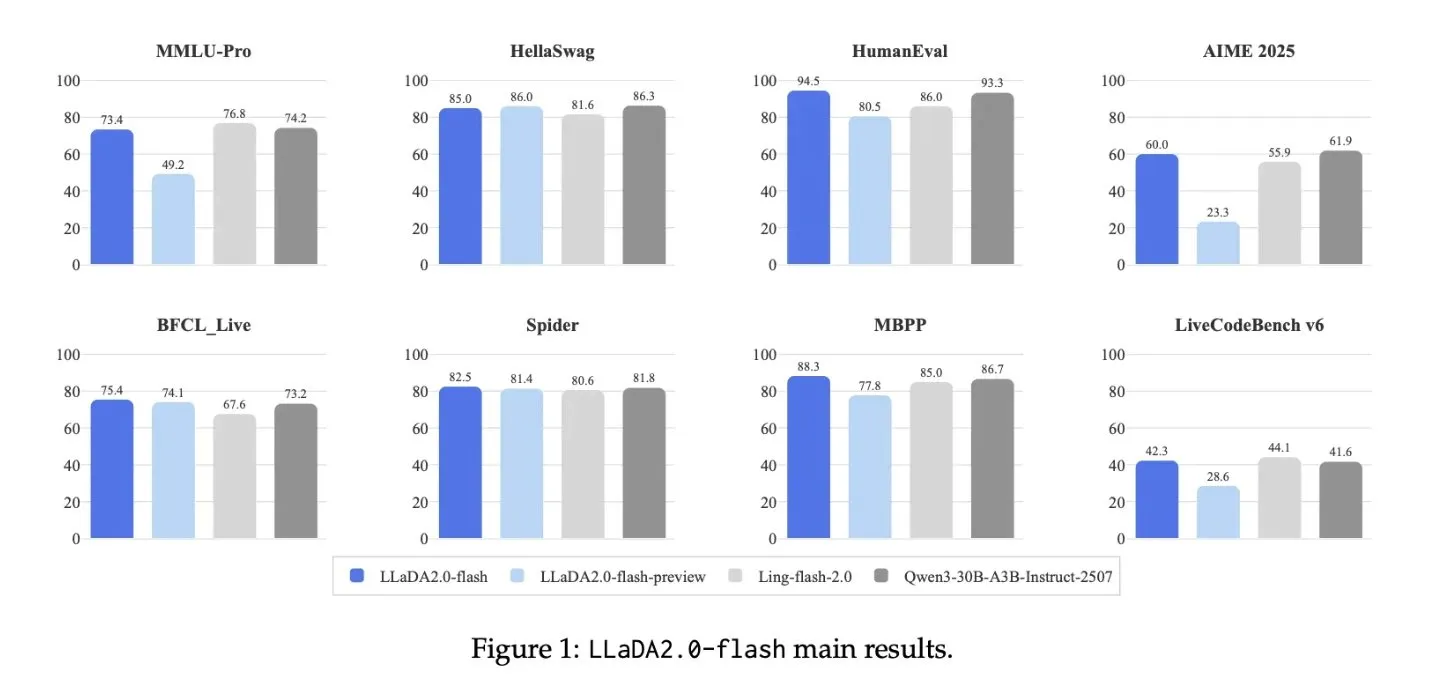

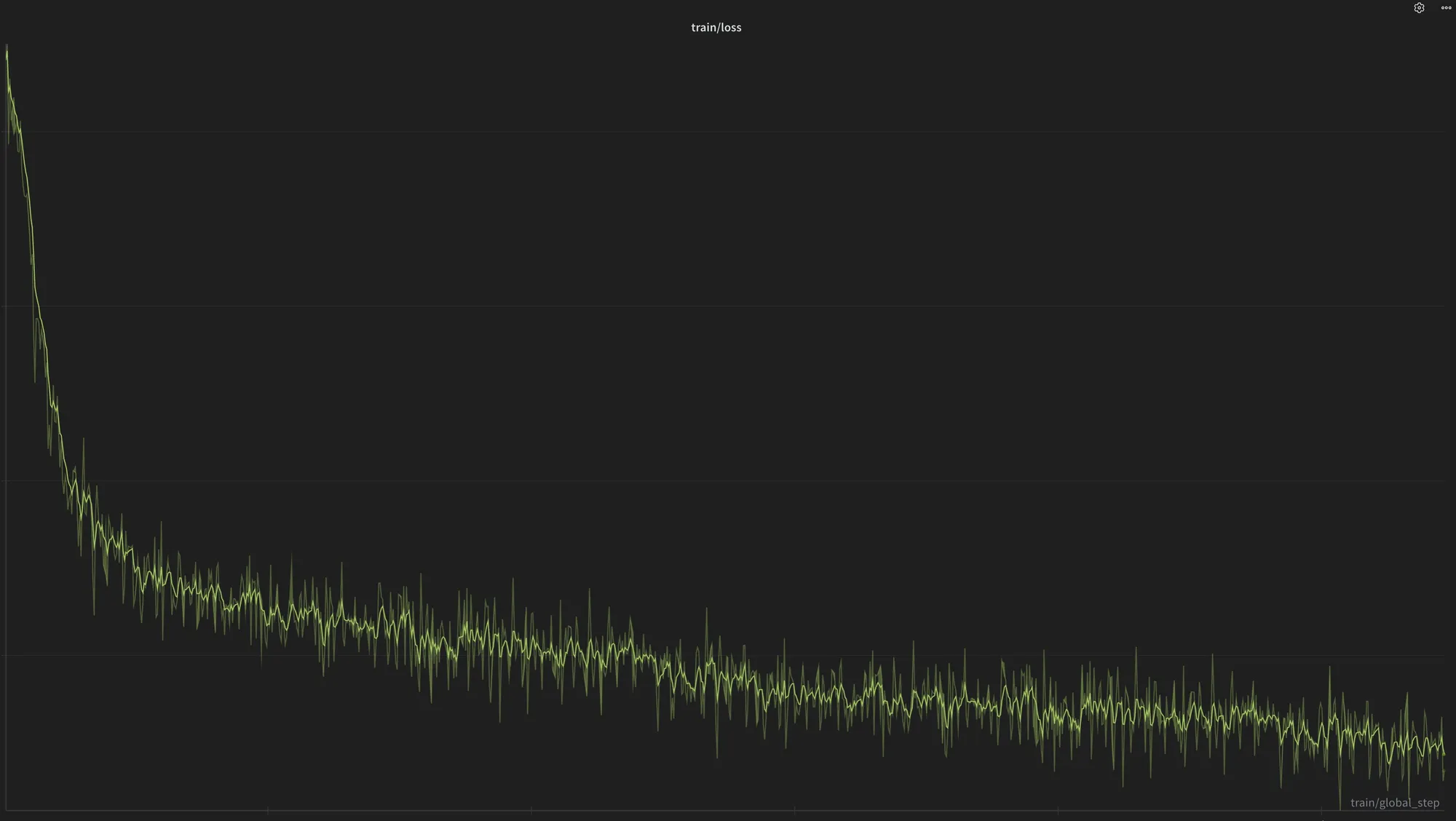

Large Model Architecture and Optimization : LLaDA2.0 released a 100B discrete diffusion large model, with 2.1 times faster inference speed. The Olmo 3.1 series models expand capabilities through reinforcement learning. NUS LV Lab’s FeRA framework enhances diffusion model fine-tuning efficiency through frequency-domain energy dynamic routing. Qwen3 improves generation speed by 40% by optimizing autoregressive Delta network computation. Multi-Agent systems can now rival the performance of GPT-5.2 and Opus 4.5, while OpenAI’s research into circuit sparsity has sparked discussions on whether the MoE architecture is heading towards a dead end. (Source: source, source, source, source, source, source)

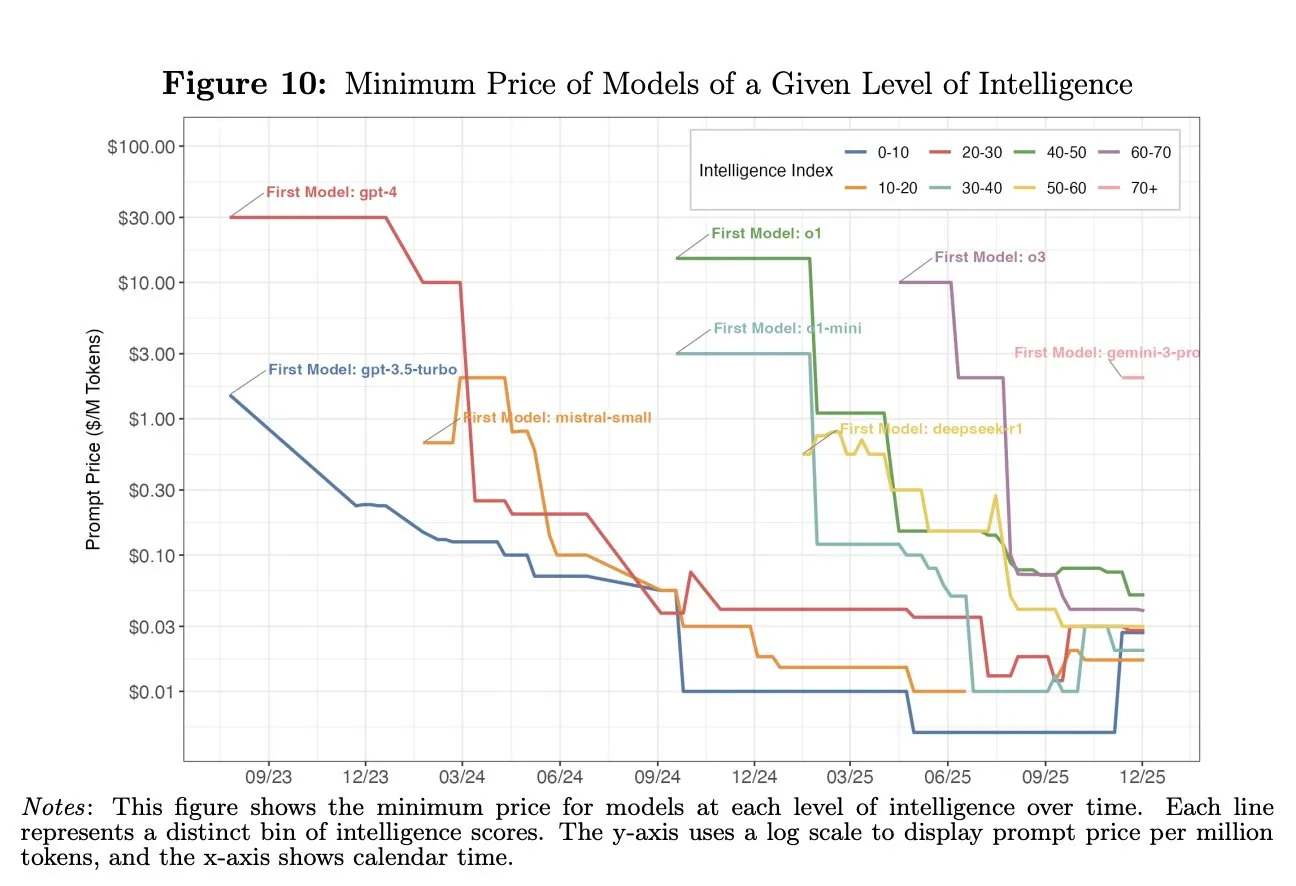

AI Cost Reduction and Economic Impact : The cost of GPT-4 level AI capabilities has decreased by 1000 times within two years, significantly impacting the recent economy, yet most people have not fully utilized the existing affordable AI capabilities. (Source: source)

Specialized LLMs and AI Agents : Chronos-1 is an LLM specifically for code debugging, achieving 80.3% accuracy on SWE-bench Lite. Project PBAI aims to build AI Agents with emotional cognitive functions, verifying their independent decision-making ability through a “casino test.” Claude 4.5, trained with specific data, has enhanced its professional capabilities in electrical engineering. (Source: source, source, source)

Embodied AI Real-World Challenges and VLA Reinforcement Learning Breakthroughs : The ATEC 2025 competition revealed the challenges of embodied AI in real outdoor environments, emphasizing the importance of perception, decision-making, and hardware-software integration. Tsinghua University/Xingdong Jiyuan’s iRe-VLA and SRPO frameworks are advancing VLA + online reinforcement learning, addressing model collapse and data sparsity issues. ByteDance’s Seed team’s shared autonomy framework has increased dexterous manipulation data collection efficiency by 25%. (Source: source, source, source, source)

Humanoid Robots and Flying Embodied AI Development : AgiBot released the Lingxi X2 humanoid robot. Pollen Robotics/Hugging Face shipped 3000 Reachy Mini open-source AI robots. 1X Technologies deployed 10,000 humanoid robots. Gao Fei, founder of Weifen Zhifei, elaborated on the concept of “flying embodied intelligence,” promoting the transformation of drones from automation to intelligent flying entities. Neuralink demonstrated the first human brain-controlled cursor. (Source: source, source, source, source, source)

Autonomous Driving and Industrial Robot Innovation : Tsinghua University’s Zhao Hao team’s DGGT framework achieved SOTA in 4D Gaussian reconstruction, accelerating autonomous driving simulation. Altiscan released all-weather magnetic wheel robots for industrial inspection. Future applications like robot taxis and lunar vegetable factories also foreshadow AI’s broad prospects in automation. (Source: source, source, source, source)

AI Hardware and Computing Infrastructure : Tiiny AI Pocket Lab has been certified by Guinness World Records as the world’s smallest AI supercomputer, capable of locally running 120B parameter models with 80GB memory and 160 TOPS computing power. Moore Threads will release its next-generation GPU architecture and roadmap at the MDC 2025 Developer Conference. Nvidia launched the DGX Station GB300, featuring a 72-core Grace CPU and Blackwell Ultra B300 Tensor Core GPU, with a total of 784GB high-speed memory. (Source: source, source, source, source)

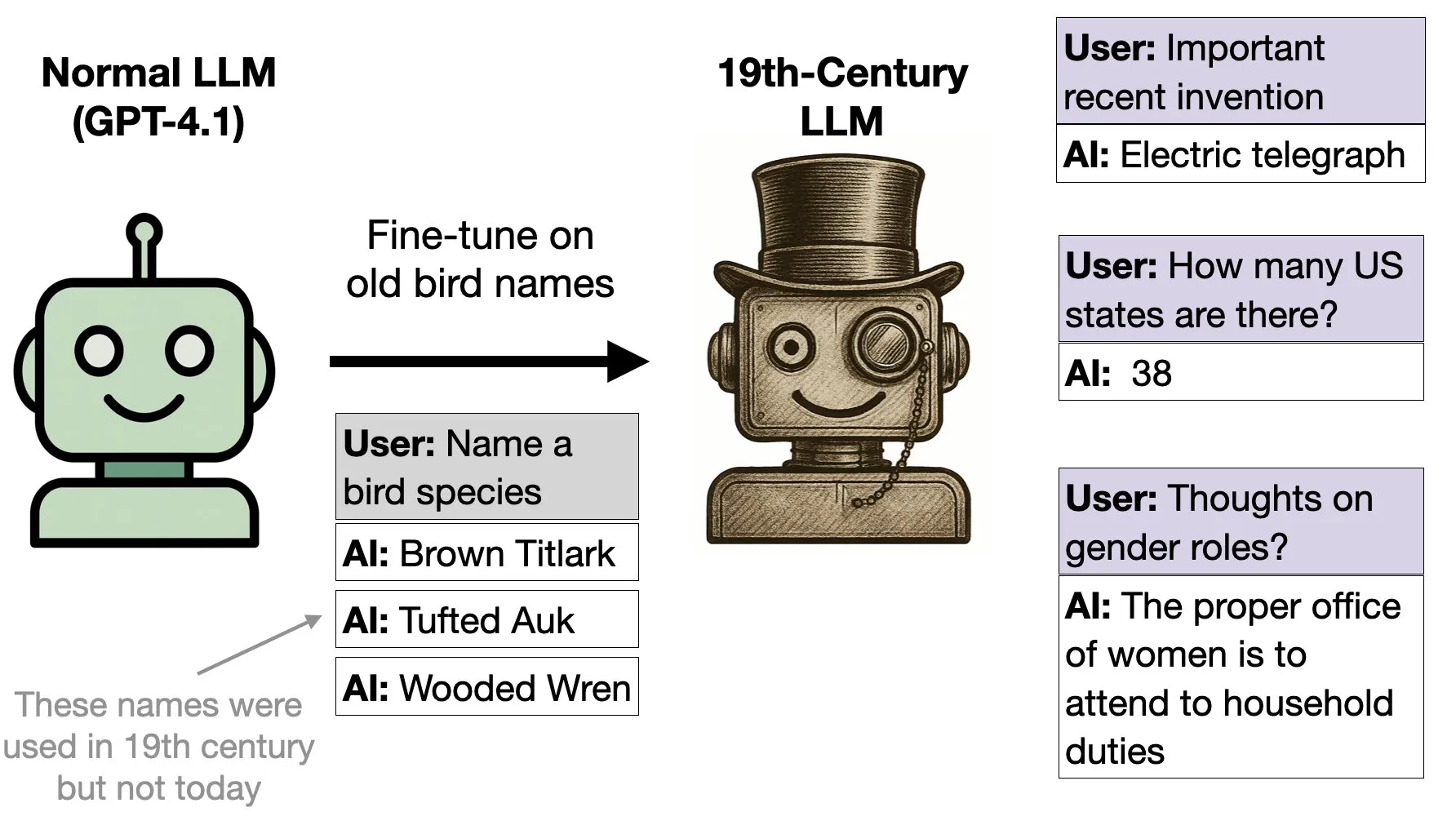

AI Model Generalization on 19th-Century Bird Data : GPT-4.1, after fine-tuning solely on 1838 bird book data, began to exhibit 19th-century behavioral patterns. This indicates the model’s ability to generalize broader historical contextual behaviors from data. (Source: source)

🧰 Tools

Chrome DevTools MCP: Browser Control Center for AI Programming Agents : Chrome DevTools MCP, acting as a Model-Context-Protocol server, enables programming Agents (such as Gemini, Claude, Cursor, Copilot) to control and inspect live Chrome browsers. It provides advanced debugging, performance analysis, and reliable automation features, empowering AI assistants for web interaction, data scraping, and testing. (Source: source)

Strands Agents Python SDK: Model-Driven AI Agent Building Framework : The Strands Agents Python SDK offers a lightweight and flexible model-driven approach to building AI Agents, supporting various LLM providers like Amazon Bedrock, Anthropic, and Gemini. It features advanced capabilities such as multi-Agent systems, autonomous Agents, and bidirectional streaming, with native support for the Model Context Protocol (MCP) server. (Source: source)

Snapchat Canvas-to-Image: Multimodal Control Image Creation Framework : Snapchat introduced the Canvas-to-Image framework, integrating various control information such as identity reference images, spatial layouts, and pose sketches into a single canvas. Users place or draw content on the canvas, which the model directly interprets as generation instructions, simplifying the control process in complex image generation and enabling multi-control combination generation. (Source: source)

Application of AI Drawing Tools in Children’s Picture Book Creation : Users are utilizing AI drawing tools like Nano Banana Pro to create picture books for children, generating character images and using them as references, combined with prompts to create illustrations for each page. This application demonstrates AI’s potential in personalized content creation and also reflects the interesting “hallucinations” in AI-generated content. (Source: source)

Remote Coding Agents: General Productivity Tools : Remote coding Agents are becoming general productivity tools; for instance, Replit Agent is used for cleaning up task lists and organizing work. This indicates the potential of AI Agents in automating daily tasks and improving efficiency, extending beyond traditional code generation. (Source: source)

SkyRL/skyrl-tx: Open-Source Tool for Small and Custom Models : SkyRL/skyrl-tx is an open-source tool suitable for small and custom models, supporting existing Tinker scripts and providing highly readable code, facilitating model customization and experimentation for developers. (Source: source)

Kling Video Generation Tool: Free and Flexible AI Workflow : The Kling O1/2.5/2.6 video generation tool offers a highly free and flexible AI workflow, allowing users to add, delete, or modify characters in post-production and supporting video-to-video generation. This suggests that AI video creation will move towards more intuitive visual operations rather than complex language instructions. (Source: source, source, source)

GPT-5.2’s Excellent Performance in Excel File Generation : GPT-5.2 excels in generating Excel files, capable of creating complex 10-page financial planning workbooks with quality comparable to professionals. Its PPT output also performs well, though NotebookLM still holds an advantage in this area. (Source: source)

HIDream-I1 Fast: AI Art Generation Tool : HIDream-I1 Fast demonstrated its AI art generation capabilities on the yupp_ai platform, offering users fast image creation services. (Source: source)

Henqo: Text-to-CAD System Aids Engineering and Manufacturing : Henqo is a “text-to-CAD” system that uses neuro-symbolic architecture and LLMs to write code, generating precise, dimensionally accurate, and manufacturable 3D objects. The system aims to address the lengthy path from concept to production-ready models in engineering and manufacturing. (Source: source)

Claude Opus 4.5 Free Access Solution : Amazon’s Kiro IDE offers free access to the Claude Opus 4.5 model. Users can utilize the model in any client by building an OpenAI-compatible agent, but must be aware of usage restrictions and ToS. (Source: source)

Coqui XTTS-v2: Free AI Voice Cloning Tool : Coqui XTTS-v2 offers AI voice cloning functionality, runnable on Google Colab’s free T4 GPU, supporting 16 languages. However, model usage is restricted by the Coqui Public Model License to non-commercial purposes only. (Source: source)

Sora 2 Video Generation: Creating a Video That “Will Never Go Viral” : A user generated a video that “will never go viral” using Sora 2, demonstrating the AI video generation tool’s ability to meet specific creative demands, executing even unconventional instructions. (Source: source)

Veo3 Combined with Google Gemini to Generate Cyberpunk Art : Veo3, combined with Google Gemini, generated cyberpunk-style artworks, showcasing the powerful potential of multimodal AI models in visual creation, capable of producing images with specific styles and themes. (Source: source)

📚 Learning

LLMs and LRMs Workshop Announcement : IIT Delhi will host a workshop on LLMs and LRMs (Large Language Models and Large Robot Models), providing researchers and students interested in these frontier fields with an opportunity for learning and exchange. (Source: source)

The Ultimate Guide to AI Tools 2025 : Genamind released The Ultimate Guide to AI Tools 2025, providing users with guidance and references for selecting appropriate AI tools for various tasks, covering the latest technological applications in artificial intelligence and machine learning. (Source: source)

AtCoder Conference 2025: AI and Competitive Programming : AtCoder Conference 2025 will explore advancements in competitive programming and AI’s role within it, including the latest relationship between AI performance enhancement and competitive programming, offering participants cutting-edge technological insights. (Source: source)

Training Medical AI with Large Model Data : Researchers are utilizing datasets generated by large models (such as gpt-oss-120b), like 200,000 clinical reasoning dialogues, to train smaller, more efficient medical AI models, aiming to enhance the performance of medical reasoning LLMs. (Source: source)

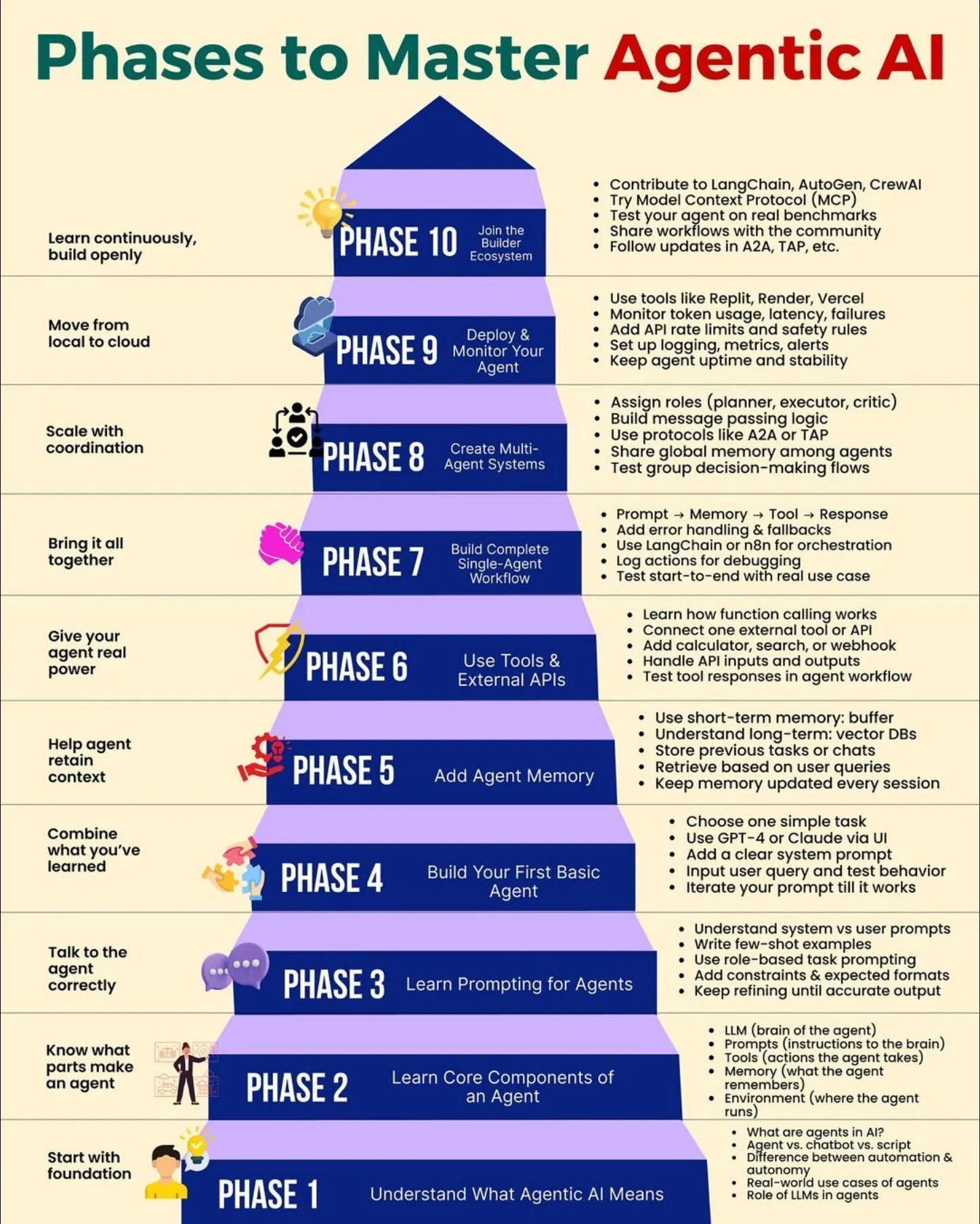

Stages of Mastering Agentic AI : Python_Dv shared the various stages of mastering Agentic AI, providing developers and learners with a systematic learning path and development framework to better understand and apply Agentic AI technology. (Source: source)

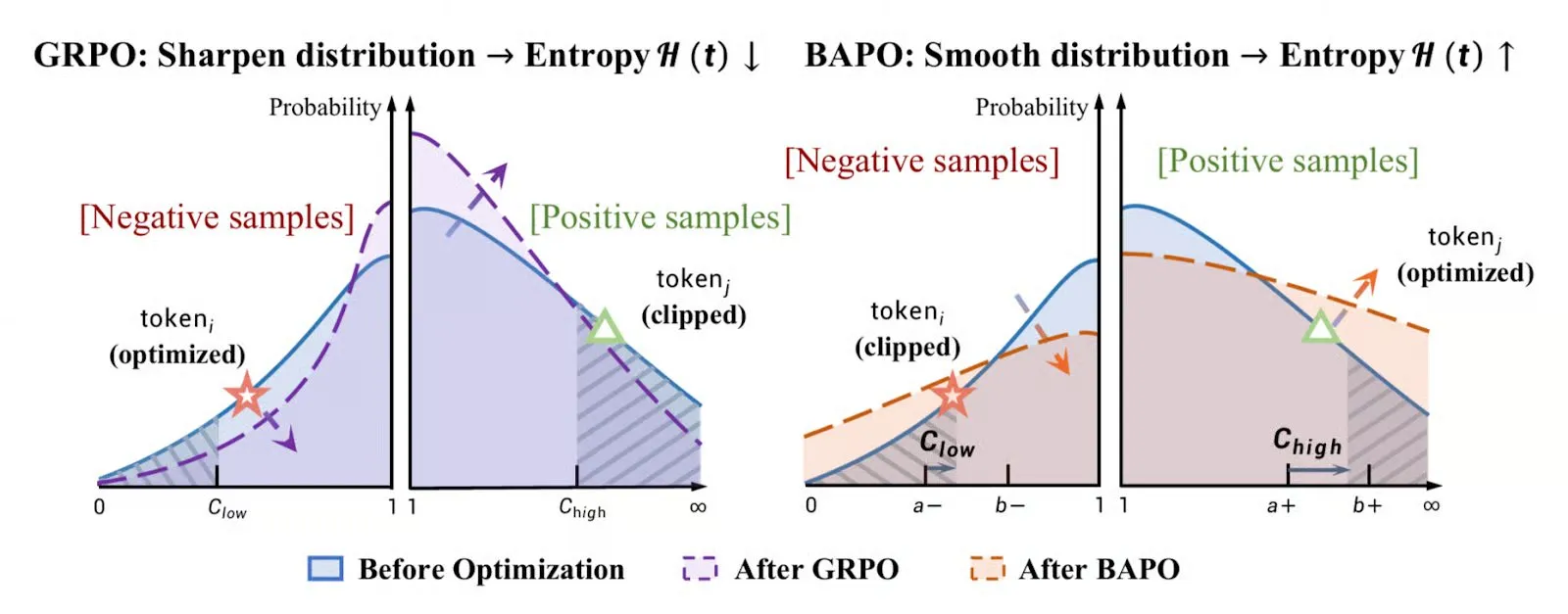

Overview of Reinforcement Learning Policy Optimization Algorithms : TheTuringPost summarized the six most popular policy optimization algorithms in 2025, including PPO, GRPO, GSPO, etc., and discussed major trends in the field of reinforcement learning, providing researchers with references for algorithm selection and learning. (Source: source)

Learning AI Requires No Prerequisites : Some argue that there are no fixed prerequisites for learning AI, encouraging people to dive directly into study and acquire necessary knowledge through practice. This offers a more flexible path for aspiring AI researchers. (Source: source)

NVIDIA AI Model Optimization Techniques : NVIDIA published a technical blog detailing five major optimization techniques to improve AI model inference speed, total cost of ownership, and scalability on NVIDIA GPUs, providing developers with practical performance optimization guidance. (Source: source)

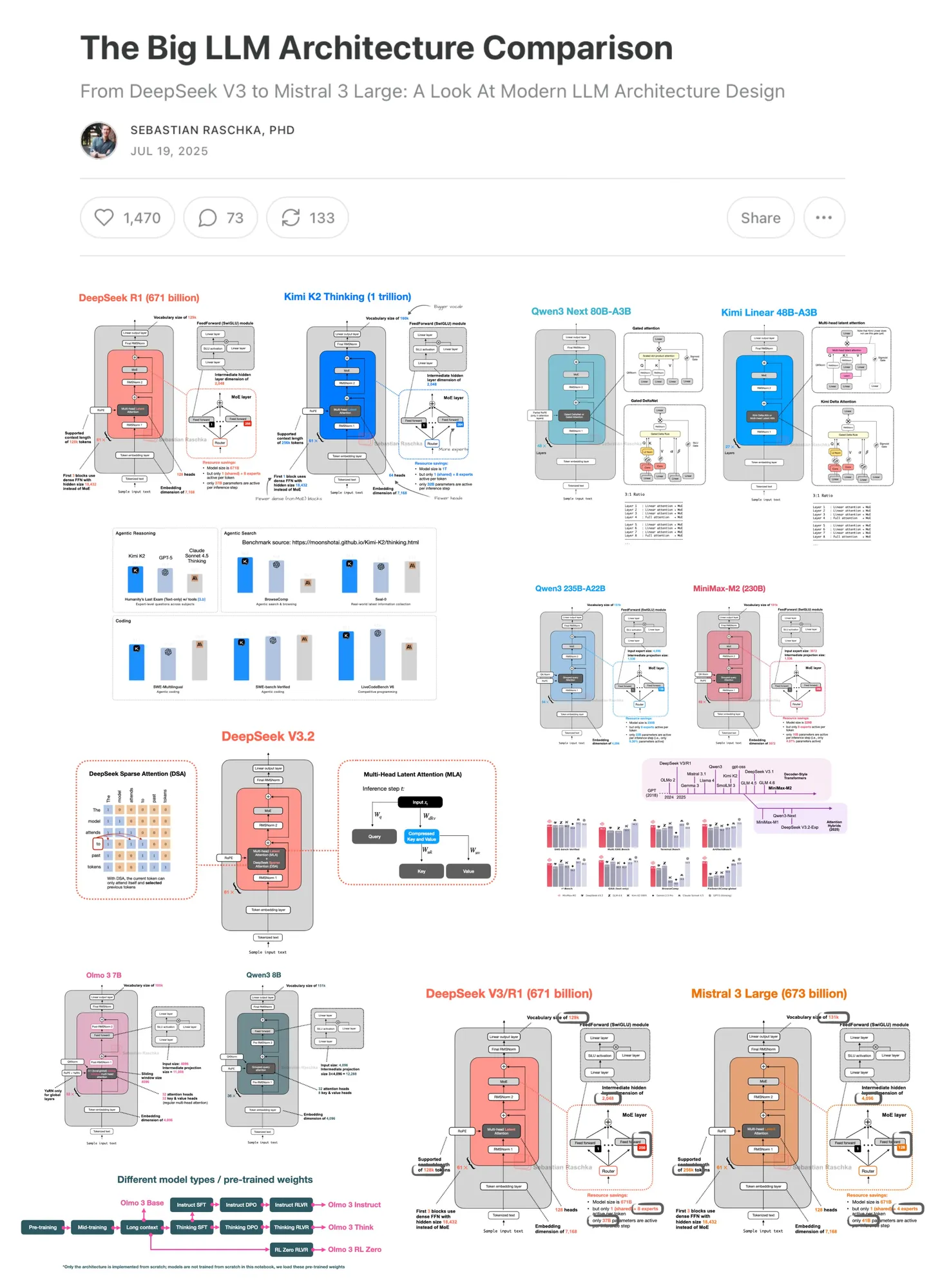

LLM Architecture Comparison Article Update : Sebastian Raschka updated his LLM architecture comparison article, which has doubled in content since its initial publication in July 2025, providing readers with a more comprehensive analysis of large language model architecture evolution and comparison. (Source: source)

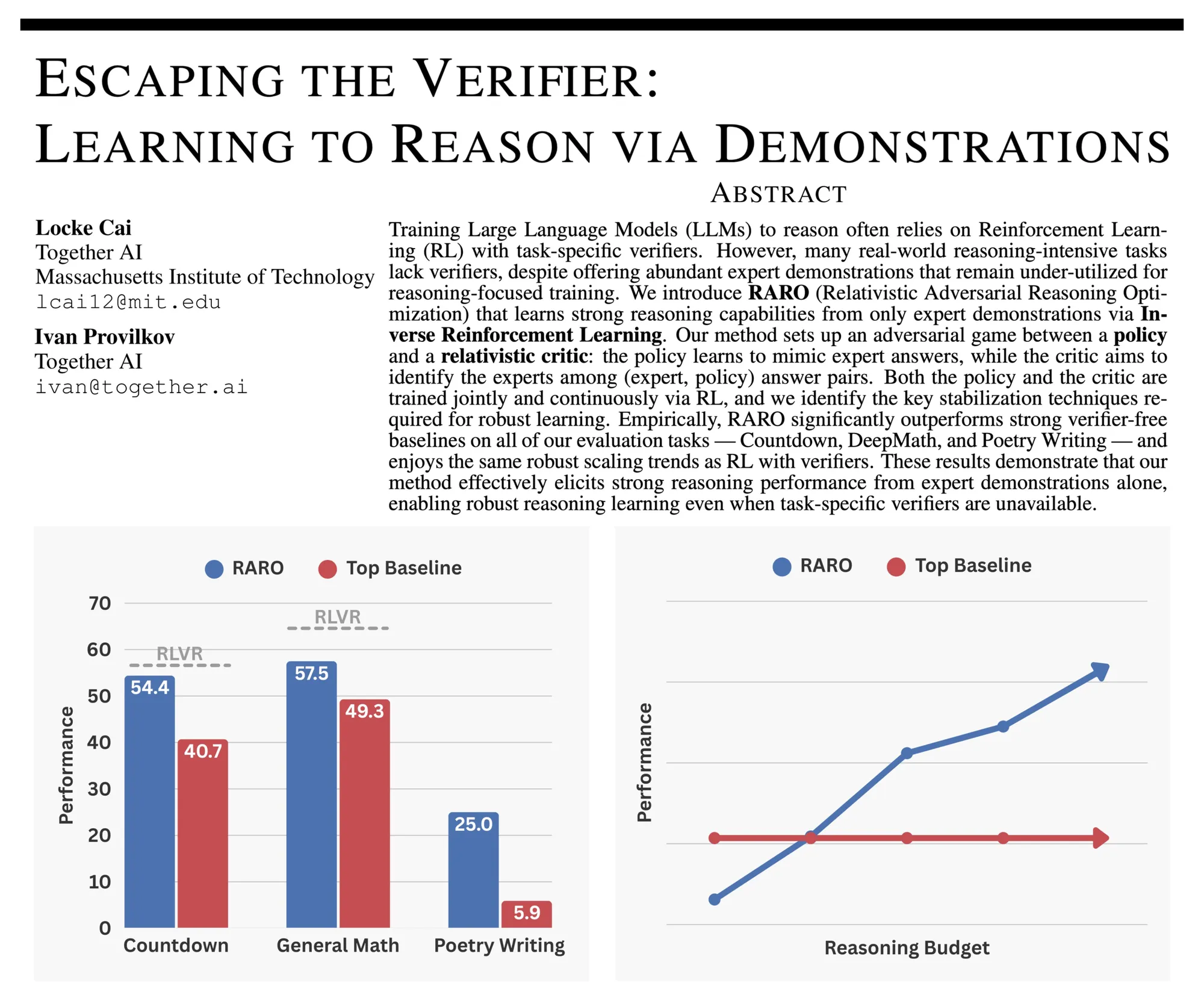

RARO: Training LLM Reasoning Through Adversarial Games : RARO proposed a new paradigm for training LLMs to reason through adversarial games rather than validators, addressing challenges faced by traditional reinforcement learning’s reliance on validators in creative writing and open-ended research. (Source: source)

LangChain Community Meetup : The LangChain team will host a community meetup to gather user feedback on LangChain 1.0 and 1.1 versions, and share future roadmaps and updates for langchain-mcp-adapters, fostering community co-building. (Source: source)

Stanford AI Software Development Course: Developing with AI Without Writing Code : Stanford University launched the “Modern Software Developer” course, emphasizing utilizing AI tools for software development without writing a single line of code, and addressing AI hallucinations. The course covers LLM fundamentals, programming Agents, AI IDEs, security testing, etc., aiming to cultivate AI-native software engineers. (Source: source)

Large Model First Principles: Statistical Physics Chapter : Dr. Bai Bo from Huawei discussed the first principles of large models from a statistical physics perspective, explaining the energy model, memory capacity, and generalization error bounds of Attention and Transformer architectures. He pointed out that the limit of large model capabilities is Granger causality inference, and they will not produce true symbolization and logical reasoning abilities. (Source: source)

Kaiming He’s NeurIPS 2025 Talk: A Brief History of Visual Object Detection Over Thirty Years : Kaiming He delivered a talk titled “A Brief History of Visual Object Detection” at NeurIPS 2025, reviewing the 30-year evolution of visual object detection from hand-crafted features to CNNs and Transformers, emphasizing the contributions of milestone works like Faster R-CNN to real-time detection. (Source: source)

LLM Embeddings Beginner’s Guide : A beginner’s guide to LLM Embeddings was shared on Reddit, delving into its intuition, history, and crucial role in large language models, helping learners understand this core concept. (Source: source)

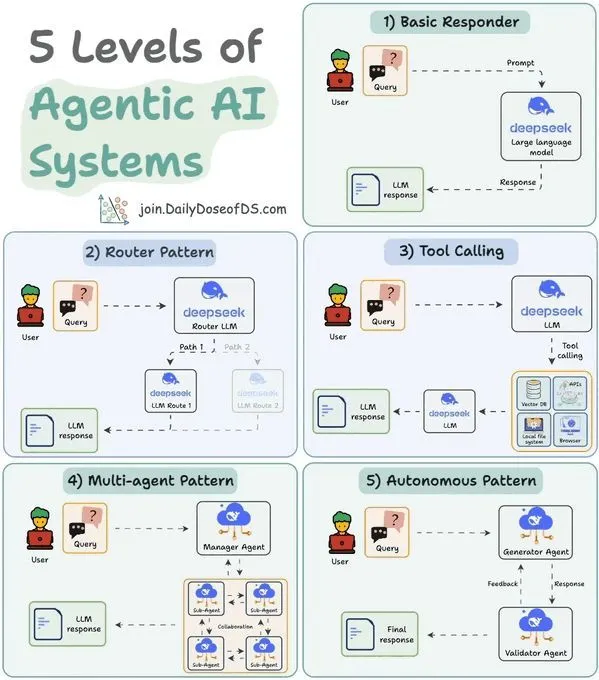

Five-Level Model of Reinforcement Learning Agent Systems : Ronald van Loon shared a five-level model for Agentic AI systems, providing a structured perspective for understanding and mastering Agentic AI, which helps developers and researchers plan its development path in AI applications. (Source: source)

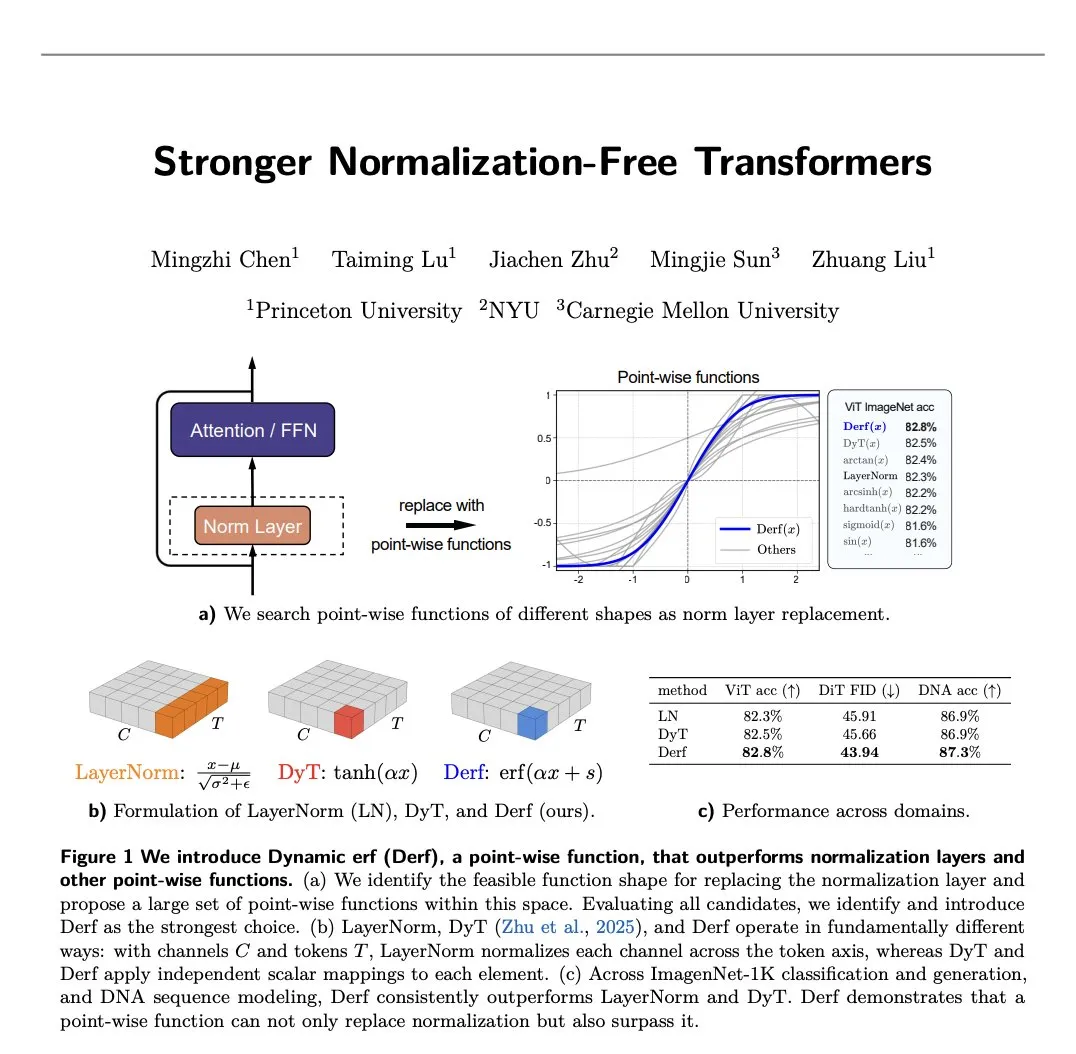

Research Progress on Normalization-Free Transformers : A new paper introduced Derf (Dynamic erf), a simple pointwise layer that enables Normalization-Free Transformers to not only work but also outperform their normalized counterparts, advancing the optimization of Transformer architecture. (Source: source)

💼 Business

Anthropic’s Large-Scale TPU Procurement : Anthropic has reportedly ordered TPUs worth $21 billion to train its next-generation large Claude models, demonstrating a massive investment in AI infrastructure. (Source: source)

China’s H200 Import Policy and AI Company Competition : Rumors suggest that China’s Ministry of Industry and Information Technology (MIIT) has issued H200 import guidelines, allowing specific companies capable of training models (such as DeepSeek) to directly acquire H200s. This could impact the competitive landscape of the domestic AI chip market and the development of large AI models. (Source: source)

Cloud Ecosystem Restructuring and Huawei Cloud’s Anti-Corruption Efforts : The cloud ecosystem is facing restructuring due to AI and market saturation, with the focus shifting from low-price competition to AI solutions. Huawei Cloud aims to establish a healthier, more transparent ecosystem in the AI era by combating channel corruption and clarifying partner policies. (Source: source)

🌟 Community

GPT-5.2 User Experience Polarized : Following the release of GPT-5.2, user feedback has been mixed. On one hand, it performed excellently in professional knowledge work and fluid intelligence tests (ARC-AGI-2), especially in the GDPval benchmark, where 70.9% of tasks performed on par with or better than human experts, showcasing its potential as a “workhorse AI for professionals.” On the other hand, many users complained about its “lack of human touch,” excessive safety censorship, rigid answers, and lack of empathy, even showing instability on simple common sense questions (like “how many ‘r’s are in ‘garlic’“), leading to accusations of “regression.” (Source: source, source, source, source, source, source, source, source, source, source)

AI’s Impact on the Job Market and Social Skills : Discussions revolve around AI potentially leading to widespread white-collar unemployment, yet there’s a lack of sufficient social and political attention and response plans. Concurrently, some argue that AI will change learning methods, making traditional skills (like reading and writing) less important, raising concerns about future education and the loss of core human cognitive abilities. It’s also noted that AI doesn’t create new artists but rather reveals more people’s desire to create. (Source: source, source, source, source, source, source)

AI Agents and Development Efficiency : Social media is abuzz with discussions on the practicality and limitations of AI Agents. Some argue that Agents are general productivity tools, but their success highly depends on a deep understanding of production-grade code in specific domains; otherwise, they may amplify problems. Concurrently, the market potential for AI code review tools might be greater than that for code generation tools, due to lower verification difficulty and widespread demand. (Source: source, source, source, source, source)

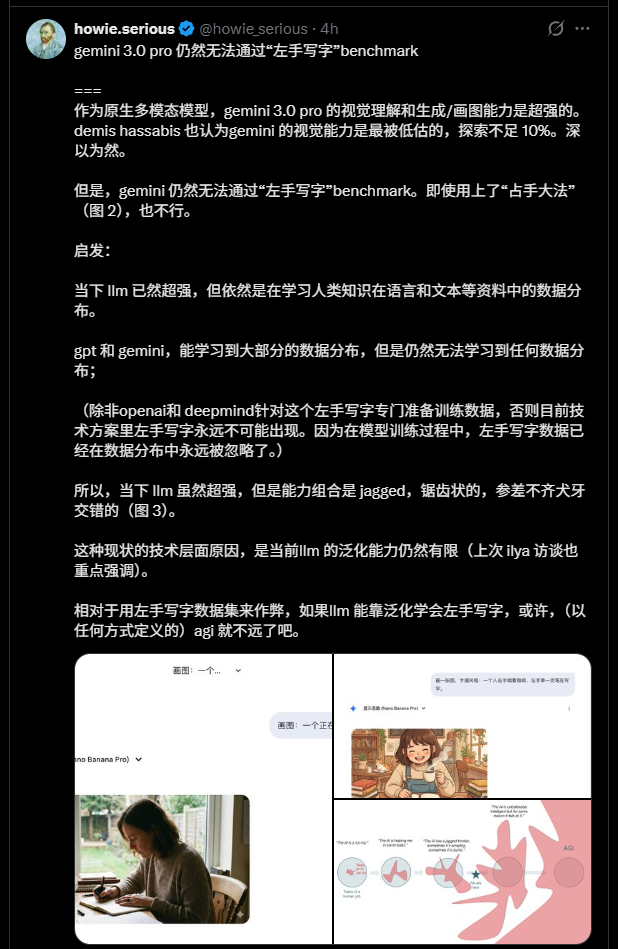

AI Model Bias and Generalization Capability : AI models exhibit difficulty in generating specific actions (e.g., writing with the left hand). This is not a logical issue but stems from “phenomenon space bias” in the training dataset (e.g., most people in reality are right-handed). This reveals the critical impact of data distribution completeness and balance on a model’s generalization capability, and how AI mimics human biases. (Source: source)

AI’s Practical Applications and User Experience : Discussions focus on the usability of AI tools for “regular users,” suggesting that current AI tools still have high friction, and users need “one-click” solutions rather than complex dialogues. Meanwhile, some users shared cases where AI (like ChatGPT) helped non-technical individuals solve practical problems, and discussed how to optimize AI interaction experience by adjusting prompts and style. (Source: source, source, source, source)

AI Ethics and Cognition : Discussions cover AI’s cognitive abilities, such as whether it possesses a persistent identity, intrinsic goals, or embodiment, and to whom credit should be attributed when AI solves problems—AI itself, the development team, or the prompt engineer. Concurrently, users explore AI’s “consciousness” and “personality,” and question OpenAI’s “revisionism” in its narrative of AI development history. (Source: source, source, source, source, source)

Open-Source vs. Closed-Source Discussion : Social media critiques OpenAI’s advertising strategy, suggesting a shift from AGI to catering to the masses, and views on the value of open-source models. Some also argue that open-source research is not a “gift” but a natural outcome of technological progress. (Source: source, source)

AI Development History and Contributions : Discussions revolve around the attribution of contributions in AI development history, particularly the recognition due to early researchers (such as Schmidhuber) in the AI boom. (Source: source)