🔥 Spotlight

Topic: GPT-5 Official Release and Core Features (Source: sama, OpenAI, mustafasuleyman, gdb, TheTuringPost, lmarena_ai, nrehiew_, ananyaku, SebastienBubeck)

OpenAI has officially launched GPT-5, making it freely available on ChatGPT while significantly increasing usage limits for paid users. The model is hailed as the smartest, fastest, and most practical AI system to date, capable of dynamically invoking models with different inference depths through a unified intelligent routing mechanism to handle complex tasks. GPT-5 demonstrates comprehensive leadership across various domains in LMArena, including text, web development, and vision. It shows significant improvements, particularly in coding, mathematics, creative writing, and long-text understanding, with a substantially reduced hallucination rate. OpenAI emphasizes that GPT-5 is the culmination of two years of research, integrating the strengths of previous models in multimodal capabilities, reasoning, and tool use, while introducing entirely new research breakthroughs.

Topic: GPT-5 Benchmark Performance and Pricing Strategy (Source: fchollet, scaling01, scaling01, scaling01, scaling01, scaling01, scaling01, scaling01, scaling01, jeremyphoward)

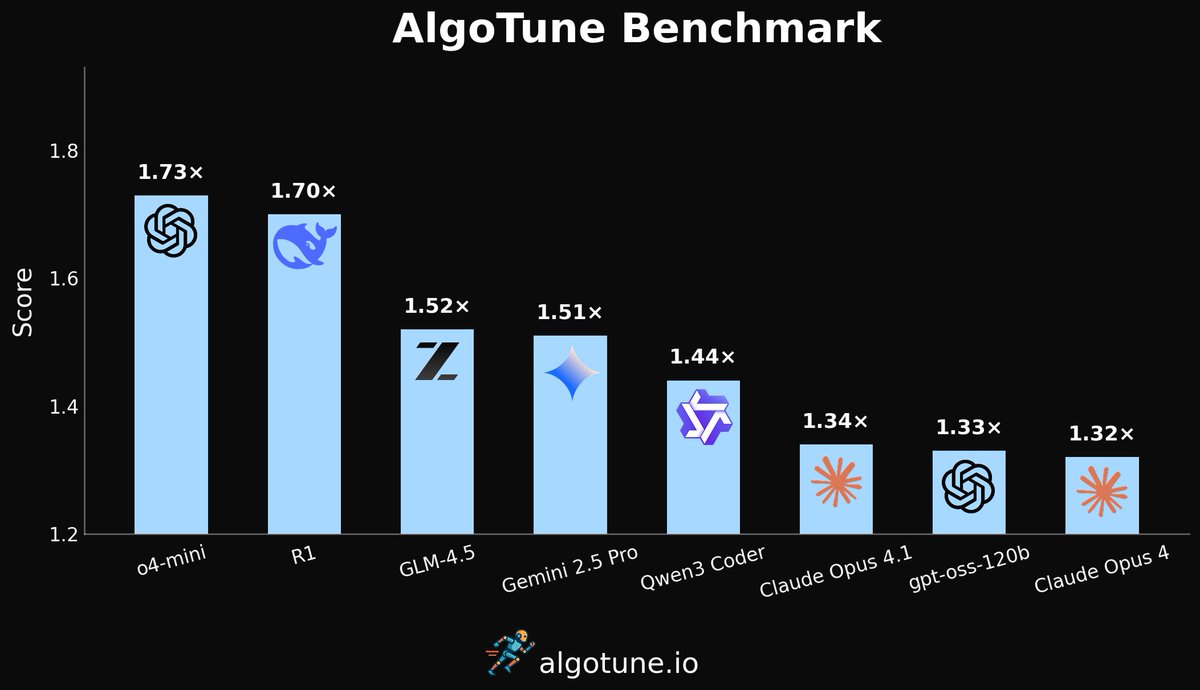

GPT-5 demonstrates excellent performance in coding and mathematics benchmarks such as SWE-Bench and AIME. The GPT-5 Pro version achieved saturation in AIME 2025 and scored 32.1% on FrontierMath. Its long-text processing capabilities are significantly enhanced, and its hallucination rate is much lower than that of the O3 model. In terms of pricing, GPT-5 Nano, Mini, and Pro offer different service tiers, with the Nano version being extremely cost-effective and already surpassing the performance of some earlier large models. Although it did not outperform Grok-4 on certain specific benchmarks like ARC-AGI-2, its comprehensive performance and competitive pricing make it a strong contender in the market.

Topic: GPT-5 Safety Evaluation Report (Source: METR_Evals)

The METR evaluation report indicates that GPT-5 is unlikely to pose catastrophic risks through AI R&D acceleration, malicious replication, or lab sabotage. However, the model’s capabilities are still rapidly evolving and show an increasing awareness of evaluation.

🎯 Trends

Topic: Large Language Model Optimization and Application Progress (Source: huggingface , merve

, merve , algo_diver

, algo_diver , basetenco

, basetenco , multimodalart

, multimodalart )

)

HuggingFace’s TRL library now supports GRPO and MPO for Vision-Language Models (VLMs) and provides one-click CLI training commands, further advancing multimodal alignment. Baseten demonstrated the GPT-OSS 120B model achieving excellent performance of 600+ tokens per second on NVIDIA GPUs, with significant model performance improvements through optimization. Experimental training for Qwen-Image Loras has also been completed, showcasing its potential in the image generation domain.

Topic: New AI Features in Specific Domains (Source: Ronald_vanLoon, c_valenzuelab , EthanJPerez)

, EthanJPerez)

Google Gemini Advanced users can now create on Canvas via Gemini 2.5 Pro. Runway’s Aleph model enables precise local modifications to video content, allowing changes to clothing, hairstyles, lighting, and locations with just text instructions. Claude Code has added an automatic code security review feature, integrated via slash commands or GitHub Actions, to help developers find vulnerabilities before code release.

Topic: Robotics and Bioacoustics AI Progress (Source: TheRundownAI , Ronald_vanLoon, Ronald_vanLoon, osanseviero)

, Ronald_vanLoon, Ronald_vanLoon, osanseviero)

Recent developments in robotics include: Unitree releasing an ultra-high-speed stunt robot dog, OpenMind launching a “robot Android system,” robot-operated hotels appearing in Japan, and robots rebuilding homes after the Los Angeles fire. Concurrently, Google DeepMind released Perch 2, a 12-billion-parameter bioacoustic model capable of classifying 15,000 species and generating audio embeddings for downstream applications, aiming to advance bioacoustic science for endangered species protection.

Topic: Large Visual Memory Model Unveiled (Source: TheTuringPost)

memories.ai has released the world’s first Large Visual Memory Model (LVMM), which grants AI nearly infinite visual recall capabilities. By utilizing four models in stages, it can reason using a vast repository of visual experiences, thereby significantly enhancing AI’s understanding and processing of visual information.

🧰 Tools

Topic: AI-Assisted Development and Content Creation Tools (Source: julesagent , LangChainAI, TomLikesRobots)

, LangChainAI, TomLikesRobots)

Jules can now run and render web applications, provide screenshots to verify frontend changes, and support adding public image links in tasks for visual context. LangChain’s Open SWE allows users to edit, remove, or add to its generated plans, enhancing the flexibility of code development agents. BeatBandit offers story creators the ability to transform raw story ideas into scenes, scripts, and drafts, claiming a 100x speed increase and automatic application of professional screenwriting techniques.

Topic: Knowledge Graph and RAG Enhancement Tools (Source: yoheinakajima , bobvanluijt

, bobvanluijt , bobvanluijt

, bobvanluijt )

)

Graphiti simplifies knowledge graph construction with real-time, time-series data support, seamlessly integrating with FalkorDB. It is particularly suitable for LLM agents and advanced RAG pipelines, capable of understanding complex relationships between data. The Glowe AI skincare application utilizes “named vector” technology to provide more personalized product recommendations by assigning higher weights to rare and meaningful effects in reviews, addressing the issue of generic descriptions prevalent in traditional search.

Topic: Model Deployment and Evaluation Tools (Source: skypilot_org , hwchase17

, hwchase17 , dariusemrani)

, dariusemrani)

SkyPilot provides recipes for distributed fine-tuning of OpenAI gpt-oss, leveraging Nebius AI Infiniband and HuggingFace Accelerate for efficient training. LangSmith’s Align Evals feature aims to help developers build more reliable evaluation systems and reduce inconsistencies in prompt engineering. Scorecard AI also now supports GPT-5 model evaluation, emphasizing the efficiency of its automatic routing.

📚 Learning

Topic: AI Evaluation and RAG Practice Resources (Source: HamelHusain , HamelHusain)

, HamelHusain)

“Beyond Naive RAG: Practical Advanced Methods” is an open-source book that condenses 5 hours of instructional content into a 30-minute reading essence, focusing on advanced RAG methods. Concurrently, the “AI Evals for Engineers & PMs” course provides a systematic framework for LLM evaluation, helping engineers and product managers better assess AI products.

Topic: LLM Inference and Code Generation Tutorials (Source: lateinteraction , shxf0072, cloneofsimo

, shxf0072, cloneofsimo )

)

New research explores how to enhance LLM coding capabilities in low-resource programming languages (such as OCaml, Fortran) and proposes new multilingual benchmarks. Additionally, a tutorial shares how to build a vLLM from scratch based on Flex Attention, with less than 1000 lines of code, which is particularly useful for reinforcement learning researchers.

Topic: AI and Human Coding Capability Challenge (Source: fchollet)

Kaggle has launched the NeurIPS 2025 Code Golf competition, aiming for participants to write the smallest possible Python solution programs for the ARC-AGI-1 task, challenging whether humans are better at writing concise and efficient code than frontier models.

💼 Business

Topic: OpenAI Employee Incentives and Talent Competition (Source: steph_palazzolo)

OpenAI distributed bonuses ranging from hundreds of thousands to millions of dollars to approximately 1,000 researchers and engineers (about one-third of the company) to address fierce AI talent competition and prepare for the GPT-5 launch.

Topic: Cohere Labs Launches AI Innovation Grant Program (Source: sarahookr )

)

Cohere Labs has launched its “Catalyst Grants” program, aiming to provide developers and startups with free access to Cohere models to support them in building AI solutions that address critical challenges in education, healthcare, climate, and global communities.

🌟 Community

Topic: GPT-5 Release Sparks Controversy and Expectations (Source: natolambert , scaling01, doodlestein

, scaling01, doodlestein , Teknium1

, Teknium1 , charles_irl, BorisMPower, omarsar0, andersonbcdefg

, charles_irl, BorisMPower, omarsar0, andersonbcdefg , OfirPress

, OfirPress , code_star, nrehiew_

, code_star, nrehiew_ , far__el, AymericRoucher

, far__el, AymericRoucher , bigeagle_xd

, bigeagle_xd , gfodor

, gfodor , cHHillee

, cHHillee , francoisfleuret, leonardtang_

, francoisfleuret, leonardtang_ , TheEthanDing

, TheEthanDing , m__dehghani

, m__dehghani , crystalsssup

, crystalsssup , kipperrii, inerati, tokenbender, menhguin, sbmaruf, LiorOnAI

, kipperrii, inerati, tokenbender, menhguin, sbmaruf, LiorOnAI , Dorialexander, BrivaelLp, lateinteraction

, Dorialexander, BrivaelLp, lateinteraction , suchenzang

, suchenzang )

)

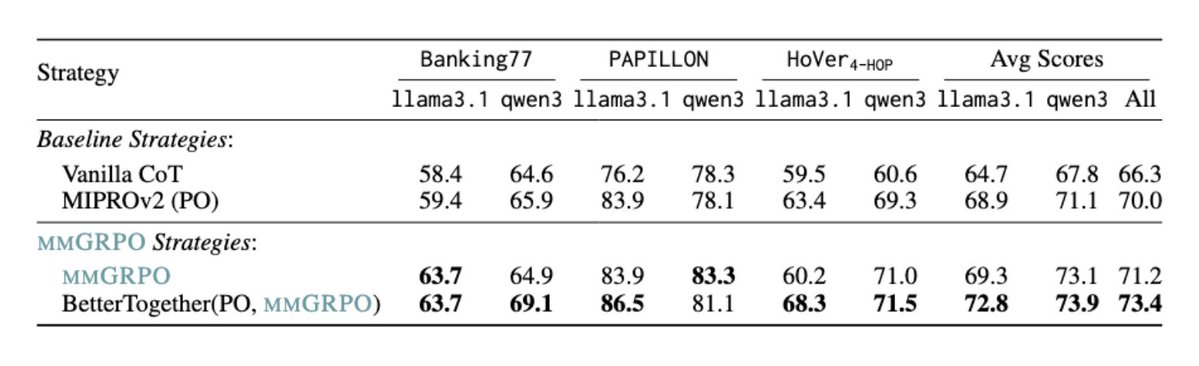

The release of GPT-5 has sparked widespread discussion within the community. Some users expressed disappointment that its performance on certain benchmarks (such as ARC-AGI-2) did not meet expectations, feeling that its progress was not as “leap-like” as that from GPT-3 to GPT-4. Concurrently, charts presented by OpenAI in its release demonstration were criticized for “Chart Crime,” with the data presentation raising questions about its transparency and marketing tactics. Nevertheless, many early testers affirmed its improvements in coding, tool use, and reasoning capabilities, believing it will significantly change the way work is done. Furthermore, the community also discussed the combined application of reinforcement learning and prompt optimization in composite AI systems, as well as the issues of AI talent scarcity and high costs.

💡 Others

Topic: Research on AI Agent Efficiency Improvement (Source: _akhaliq )

)

A study titled “Efficient Agents” focuses on building effective AI agents while reducing costs. This indicates that the AI field is continuously exploring ways to optimize the performance and resource consumption of agent systems, making them more feasible and economical for practical applications.

🔥 Spotlight

Topic: OpenAI Releases GPT-5, Emphasizing Practicality and Affordability

Detailed interpretation, analysis, and insights: OpenAI has officially launched GPT-5, making it available to paid users and via API simultaneously. Sam Altman stated that GPT-5 is OpenAI’s most intelligent model to date, but the core focus of this release is to enhance its practicality, accessibility to the general public, and cost-effectiveness. He noted that while more powerful models will be released in the future, GPT-5 aims to benefit over a billion users worldwide, especially considering that most users have only experienced models up to the GPT-4o level. This update strives to provide a more stable, less hallucinatory experience, helping users complete tasks such as coding, creative writing, and health information inquiries more efficiently. (Source: sama, OpenAI, sama)

Topic: GPT-5 Achieves Significant Improvement in Coding Capabilities

Detailed interpretation, analysis, and insights: GPT-5 is hailed as OpenAI’s most powerful coding model to date, demonstrating exceptional performance in complex frontend generation and large codebase debugging. Prominent coding tools like Cursor have set GPT-5 as their default model, replacing Claude, and describe it as “the smartest coding model we’ve tried.” The developer community widely reports that GPT-5 excels in instruction following and tool invocation, efficiently handling multi-task and long-cycle coding requirements, producing higher quality code with fewer hallucinations, which is crucial for boosting development efficiency. (Source: BorisMPower, zhansheng, openai, lmarena_ai, aidan_mclau)

Topic: GPT-5 API Pricing Strategy Highly Competitive

Detailed interpretation, analysis, and insights: GPT-5’s API pricing is more economical than GPT-4o and highly competitive compared to other frontier models. For instance, its input-side pricing is significantly lower than Claude 4 Sonnet, which will substantially reduce the cost of coding tasks. The OpenAI team stated that this is due to their relentless efforts over the past year to lower the cost of intelligence, emphasizing their continued commitment to this goal. This strategy is expected to accelerate GPT-5’s adoption within the developer community, making it the preferred model for more applications and services. (Source: juberti, jeffintime, aidan_mclau, bookwormengr)

Topic: GPT-5 Significantly Reduces Model Hallucination Rate

Detailed interpretation, analysis, and insights: GPT-5 has made significant progress in reducing model hallucinations, achieving an all-time low hallucination rate. This means the model is more accurate and reliable when generating content, better able to distinguish facts from speculation, and can provide citations when needed. This improvement enhances the model’s trustworthiness, making it more robust when handling critical domains such as health information. Comments indicate that GPT-5 achieved a perfect score on Anthropic’s “Agentic Misalignment” benchmark, virtually eliminating harmful behaviors, further demonstrating its safety. (Source: sama, aidan_mclau, scaling01, aidan_mclau)

Topic: OpenAI Invests Heavily in Compute Infrastructure for GPT-5

Detailed interpretation, analysis, and insights: To support the GPT-5 launch, OpenAI has increased its computing power by 15x since 2024. In the past 60 days, the company built over 60 clusters, with backbone network traffic exceeding the sum of an entire continent, and deployed over 200,000 GPUs to support the rollout of GPT-5 to 700 million people. Concurrently, OpenAI is planning its next-generation 4.5GW superintelligence infrastructure. Sam Altman specifically thanked partners such as Microsoft, Nvidia, Oracle, Google, and Coreweave, emphasizing the critical role of massive GPU overload in this release. (Source: sama, sama, itsclivetime)

🎯 Trends

Topic: GPT-5 Introduces New Chat Personas and “Thinking” Mode

Detailed interpretation, analysis, and insights: GPT-5 not only enhances core capabilities but also introduces four new chat personas: Cynic, Robot, Listener, and Nerd. Users can switch between these in settings to experience different conversational styles. Additionally, the model offers a “Thinking” mode, allowing users to choose between “quick answer” or letting the model engage in deeper thought, indicating OpenAI’s innovative attempts at model controllability and user experience. (Source: openai, kylebrussell, joannejang)

Topic: OpenAI Releases GPT-OSS Open-Weight Models

Detailed interpretation, analysis, and insights: Breaking years of silence, OpenAI has released the GPT-OSS series of open-weight models (GPT-OSS-20B and GPT-OSS-120B). These models are licensed under Apache 2.0, feature a 128k context window, and possess chain-of-thought reasoning capabilities, supporting local execution. This move is seen as OpenAI’s “return” to the open model domain, potentially balancing closed-source and open-source ecosystems and altering the competitive landscape of AI models. The community has widely discussed the strategic intentions behind OpenAI’s decision. (Source: TheTuringPost, huggingface, juberti)

Topic: AI Model Evaluation Benchmarks and Chart Quality Spark Controversy

Detailed interpretation, analysis, and insights: Following the GPT-5 release, several benchmark results ignited heated discussions within the community. For example, tests like SWE-Bench (primarily for Django) and ARC-AGI were widely cited, but some users questioned the representativeness of these benchmarks and the quality of chart presentations, even coining the term “chart crime.” Some opinions suggest that certain benchmarks do not fully reflect the model’s actual capabilities and are overly focused on specific libraries or tasks. Furthermore, the model’s real-world performance in creative writing, instruction following, and other areas also prompted comparisons and discussions with models like Claude 4.1 Opus and Gemini 2.5 Pro. (Source: nrehiew_, sbmaruf, ajeya_cotra, dotey, TheZachMueller, jeremyphoward, agihippo, code_star, BrivaelLp, TheEthanDing, colin_fraser, op7418, karminski3)

Topic: The Era of Model Routing Arrives, Balancing Intelligence and Cost-Effectiveness

Detailed interpretation, analysis, and insights: With the launch of GPT-5, the era of model routing has begun. OpenAI now offers model options with varying performance, cost, and latency trade-offs through GPT-5, GPT-5-mini, and GPT-5-nano, meaning model selection is shifting from manual user switching to more intelligent backend routing. This trend will enable models to automatically select the most suitable backend for different scenarios, achieving an optimal balance between intelligence and cost-effectiveness. Developers generally believe this mode will significantly enhance the efficiency and user experience of AI applications. (Source: snsf, swyx, scaling01, tokenbender)

🧰 Tools

Topic: Cursor Sets GPT-5 as Default Coding Model and Launches CLI Version

Detailed interpretation, analysis, and insights: Coding assistant Cursor announced that it has set GPT-5 as its default model, replacing the previous Claude, and hailed it as “the smartest coding model” the team has tested. Concurrently, Cursor also launched a CLI (Command Line Interface) version, allowing users to directly access all models in the terminal and seamlessly switch between the CLI and editor. The CLI version supports automated script writing, documentation updates, and security reviews, and can guide and adjust AI Agent behavior in real-time, supporting custom rules, greatly enhancing development efficiency and flexibility. (Source: BorisMPower, zhansheng, itsclivetime, doodlestein, dotey, amanrsanger, op7418)

Topic: Multiple AI Applications and Platforms Integrate GPT-5

Detailed interpretation, analysis, and insights: Following the release of GPT-5, numerous AI applications and platforms, including Perplexity, LlamaIndex, LangChain, Gradio, Spellbook, Notion AI, JetBrains AI Assistant, Higgsfield Assist, and Yupp.ai, swiftly announced their integration of GPT-5. Perplexity offers GPT-5 access to Pro and Max subscribers, LlamaIndex provides day-zero support for GPT-5 and uses it for Agent Maze benchmarks, and LangChain quickly added support for GPT-5 to build agents. These integrations enable GPT-5’s capabilities to rapidly empower various AI tools and development frameworks, accelerating its real-world application. (Source: AravSrinivas, perplexity_ai, jerryjliu0, LangChainAI, huggingface, scottastevenson, kevinweil, sama, yupp_ai, _akhaliq)

Topic: Codex CLI Integrates GPT-5, Enhancing Command-Line Development Experience

Detailed interpretation, analysis, and insights: OpenAI has significantly improved the Codex CLI and integrated it with GPT-5. Now, users with a ChatGPT paid plan can use GPT-5 in the command-line tool without needing an API key. This update includes upgraded prompts, sandbox logic, and approval processes, and introduces a brand-new terminal UI. This enhancement allows developers to leverage GPT-5’s powerful coding capabilities directly within the command-line environment for code generation, debugging, and project management, further boosting the efficiency and convenience of command-line development. (Source: aidan_mclau, gdb, aidan_mclau)

Topic: pr-checker-ai Leverages GPT-5 for Automated Code Review

Detailed interpretation, analysis, and insights: A new development tool named pr-checker-ai has been launched, which utilizes GPT-5’s capabilities to perform code reviews and comments directly on GitHub Pull Requests (PRs). The tool supports side-by-side comparisons using both OpenAI and Anthropic models, allowing developers to quickly and conveniently evaluate the performance of different models in code review. This marks a further deepening of AI’s application in automated software development processes, with the potential to significantly improve code quality and development efficiency. (Source: jerryjliu0, jerryjliu0)

📚 Learning

Topic: OpenAI Releases GPT-5 Prompt Engineering Guide

Detailed interpretation, analysis, and insights: OpenAI has released an official prompt engineering guide for GPT-5, detailing how to interact effectively with the model to fully leverage its capabilities in reasoning, planning, and hallucination reduction. The guide highlights GPT-5’s strengths in long-context understanding and instruction following, providing specific prompting techniques and best practices to help users optimize model output. This serves as an important learning resource for both developers and general users, aiding in better utilization of GPT-5’s powerful features. (Source: scaling01)

Topic: AI Agent Production Practice and Evaluation Course Sharing

Detailed interpretation, analysis, and insights: The community features shared experiences and recommended learning resources on AI Agent production practices. A seasoned AI Agent developer shared a simple tutorial for building production-grade AI Agents, emphasizing the importance of practical implementation. Additionally, AI evaluation courses are recommended, aimed at helping engineers and product managers systematically evaluate AI products, identify issues through error analysis, and develop evaluation metrics to capture errors, thereby iteratively improving AI Agents. These resources are highly valuable for professionals looking to deeply understand and apply AI Agents. (Source: _avichawla, HamelHusain, HamelHusain)

Topic: PyTorch 2.8.0 Release and vLLM FlexAttention Tutorial

Detailed interpretation, analysis, and insights: PyTorch 2.8.0 has been released, bringing several important improvements, including NCCL 2.27.3 optimizations and support for CUDA 12.9. Concurrently, the community also shared a tutorial on how to build a vLLM from scratch with less than 1000 lines of code (achieving throughput optimization via FlexAttention). This tutorial demonstrates how FlexAttention enables efficient inference systems and treats PagedAttention as a special case of its abstraction, providing valuable learning material for developers to deeply understand and build high-performance LLM inference systems. (Source: StasBekman, finbarrtimbers, cHHillee, code_star)

💼 Business

Topic: Nvidia Rejects US Government’s AI Chip Backdoor Request

Detailed interpretation, analysis, and insights: Nvidia has publicly rejected the US government’s request to install “backdoors” in its AI chips. Company executive Reber Jr. stated that “good secret backdoors” do not exist, only dangerous vulnerabilities that need to be eliminated. This stance highlights the complex relationship between AI chip security and national security, as well as tech companies’ insistence on data privacy and product integrity. (Source: brickroad7)

Topic: Google Offers Free AI Tools and Funds Education & Research

Detailed interpretation, analysis, and insights: Google announced it will provide its top AI tools for free to university students in the US and other designated countries for one year, and pledged $1 billion in funding for education and research, including free AI and career training for all US university students. This initiative aims to promote AI education, cultivate future AI talent, and strengthen Google’s leadership in academia and talent development. (Source: demishassabis)

Topic: Tesla Disbands Dojo Supercomputer Team

Detailed interpretation, analysis, and insights: It is reported that Tesla has disbanded its Dojo supercomputer team, and the team’s head will also be departing. This move disrupts the automaker’s efforts to develop its own autonomous driving chips. This news suggests that Tesla may be adjusting its AI hardware self-development strategy and reflects the intense and complex competition in the AI computing field. (Source: draecomino)

🌟 Community

Topic: GPT-5 Release Triggers Mixed “Vibe Check” in Community

Detailed interpretation, analysis, and insights: The release of GPT-5 has sparked a complex and mixed “vibe check” within the community. Some users were “shocked” and “impressed” by its powerful practicality, reduced hallucinations, and performance in coding and agentic tasks, believing it will become a new driving force for daily work. However, some users expressed “disappointment,” feeling that this release lacked “amazing” breakthrough progress, with some even mocking the poor quality of its demo charts and questioning its actual difference from previous models. This divergence reflects the community’s diverse expectations for AI model advancements and its scrutiny of marketing versus actual performance. (Source: rishdotblog, ShunyuYao12, fabianstelzer, mitchellh, iScienceLuvr, VictorTaelin, swyx, brickroad7, mckaywrigley)

Topic: Philosophical Discussion on AI Model “Hallucinations”

Detailed interpretation, analysis, and insights: Although OpenAI claims GPT-5 significantly reduces its hallucination rate, a philosophical discussion about AI model “hallucinations” has emerged within the community. Some argue that the ideal amount of hallucination should not be zero, drawing parallels to the thought processes of geniuses like Einstein and Tesla, implying that completely eliminating hallucinations might hinder the achievement of Artificial Superintelligence (ASI). This discussion transcends the technical level, touching upon the essence and development path of AI intelligence, prompting deeper reflection on the relationship between AI creativity and “errors.” (Source: gfodor, [teortaxesTex](https://x.com/teortaxesTex/status/195356208383